THE LAB #15: Deep diving into Apify world

Let's use the new python SDK to know better the Apify ecosystem

This article is sponsored by Apify, your one-stop shop for web scraping, data extraction, and RPA.

For all our readers Apify gives the discount code CLUBSPECIAL30 to save 30% on all paid plans.

Note: the sponsorship doesn’t affect the content of the article, which is totally mine and is not influenced by anyone at Apify.

As we have seen in the latest news recap, Apify just released its Python SDK for its scraping environment and, to celebrate our new partnership, I thought it could be interesting to use this space to taste waters into their environment.

If you’re already an Apify user, this post probably won’t add too much to your knowledge but if you, just like me, don’t have any experience in this ecosystem it’s a good way to start.

What is Apify?

Apify is a platform for web scraping that helps the developer starting from the coding, having developed its open-source NodeJs library for web scraping called Crawlee. Then on their platform, you can run and monitor the scrapers and also finally sell your scrapers in their store.

Basically, the code of your scraper is “Apified” by incorporating it within an Actor, which is a serverless cloud program running on the Apify platform that can perform our scraping operations.

But let’s see more in detail about each phase.

Writing a scraper with Scrapy and Apify actor

Apify developed its open-source NodeJs library for web scraping called Crawlee. It’s available on GitHub where its repo has more than 7k stars, as proof of the good job done.

But being a Pythonista, I prefer using Scrapy and Apify command line interface for starting out.

Let’s start with the basics

First, let’s install the Apify CLI and then create a ready-to-use template:

npm -g install apify-cli

apify create ecommerce-scraper -t=python-scrapyAfter the last command, a Python virtual environment is launched and all the requirements are installed.

When this step ends, we will find out, inside the ecommerce-scraper folder, these files

We see the requirements file, the scrapy.cfg one, a storage folder where all the output of the executions will be saved, and the src folder with the spider’s code.

If you’re familiar with Scrapy, in the src folder you will find the usual files, already modified to integrate the scraper with its Actor.

Since the output of the scraper should be handled by the actor, we can see that the items.py file is basically useless, since it’s used by Scrapy to define its output data model, which now is defined in the scraper itself.

While pipelines.py and settings.py files are customized but standard for Scrapy, a new file, called main.py is created and this is where our execution starts.

Let’s say in our example we want to gather product prices, names and URLs for two product categories on the Lululemon website.

First of all, let’s modify the scraper for starting from the two categories, so in the main.py file, we edit the starting URL in this way.

async def main():

async with Actor:

actor_input = await Actor.get_input() or {}

max_depth = actor_input.get('max_depth', 1)

start_urls = [start_url.get('url') for start_url in actor_input.get('start_urls', [{ 'url': 'https://www.eu.lululemon.com/' }])]

settings = get_project_settings()

settings['ITEM_PIPELINES'] = { ActorDatasetPushPipeline: 1 }

settings['DEPTH_LIMIT'] = max_depth

process = CrawlerProcess(settings, install_root_handler=False)

# If you want to run multiple spiders, call `process.crawl` for each of them here

process.crawl(TitleSpider, start_urls=start_urls)

process.start()

While on this file I’ve only changed the starting URL, on the scraper file I’ve rewrote the scraper entirely to resemble my style.

I’ve used XPATH selectors, declared the two categories I want to scrape, the data model, and included the Scrapy Request to handle pagination. Nothing different than an ordinary Scrapy spider indeed.

After increasing the max depth option on the main file (we are following more than one level of depth of links), if we run our scraper with the command

apify runwe’ll get the first 20 items, since the robots.txt orders to stop there.

Anyway, since it’s a mere exercise, the spider works and we can see the results in the directory storage/datasets/results where we’ll find one JSON per each item returned.

Raising the bar with the Python SDK

With the Python SDK, starting from this basic example, we can add more and more functionalities to fully benefit from the Apify environment and integration.

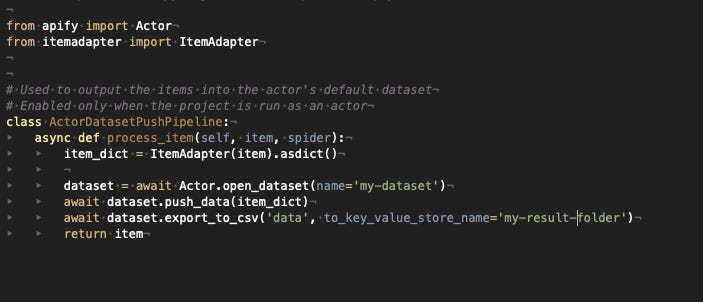

We can ask the actor to create a dataset file, in CSV format, containing all the items instead of a bunch of single files.

With some simple modifications to the pipelines.py file, we can make this happen.

In the directory /storage/key_values_stores/my-result-folder/ I’ll find my data.csv file containing the results of the scraper.

Of course, all the advanced features don’t end here, but we have proxy management (using Apify proxies or your own), the capability of making multiple Actors interact with each other, creating webhooks, and so on.

For all the documentation needed, you have the official page of the Python SDK.

Monitoring your actor’s executions

Now we have a working actor, we want to run it every day and monitor its executions using a dashboard.

As we said, Apify offers a monitoring solution and we’re going to see now how easy it is to deploy actors on the cloud.

First of all, you need an Apify account and an active personal API token. This is created by default when you open an account and you can find it in the account settings, under the integration tab.

Once retrieved the token, you only need to login to the CLI using the command

apify loginand copying your token when prompted.

All you need to do now is, from the project’s folder, to launch the command

apify pushand you will see your actor in your Apify dashboard.

From this interface, you can launch your scraper, edit its code, schedule it, get the results, and logs in with a few clicks.

Monetizing your web scraping knowledge

One of the peculiar aspects of Apify is the chance to make some money by developing your actors, in just a few clicks.

Once your actor is deployed on the platform, you can ask to publish it on the Apify store, where it can be found by people interested in getting data without the web scraping hassle and pay for its usage.

Final remarks

We have seen together a general introduction to the Apify environment. As mentioned at the beginning, I’m completely new to it and I’ve made a high-level orientation starting from what I could study in the past weeks.

If I have to select some interesting aspects of this I would say:

The CLI is very straightforward and the templates created, at least for my Scrapy spider, were very helpful

The dashboard is clear and I appreciate the chance to see the cost per execution of my scrapers

The Actor marketplace is a great idea, it gives to web scraping professionals the chance to create an additional income

The aspects where I’ve got some mixed feelings are instead:

The total cost of running a large-scale web project on Apify. Depending on the execution times of the scrapers and the resources allocated, the price could be a problem in these projects (but I’m sure there are special prices for large customers that mitigate this issue).

The overhead of code needed to integrate a working scraper with the actors can be painful, but I understand that integrating such a great number of tools in one architecture isn’t easy. In any case, the documentation and the Apify community are available for helping you.

Overall the experience has been great and I suggest giving it a try, especially if you’re already proficient in web scraping and want to make some more money from your knowledge.

The Lab - premium content with real-world cases

THE LAB #14: Scraping Cloudflare Protected Websites (early 2023 version)

THE LAB #8: Using Bezier curves for human-like mouse movements

THE LAB #6: Changing Ciphers in Scrapy to avoid bans by TLS Fingerprinting

THE LAB #4: Scrapyd - how to manage and schedule a fleet of scrapers

THE LAB #2: scraping data from a website with Datadome and xsrf tokens