Fine-Tuning LLMs for Industry-Specific Scraping

Beyond General AI: A Deep Dive into Fine-Tuning Language Models for Web Data

Recently, OpenAI released ChatGPT 5.0. So let me guess, the old adage is back:” How do I use LLMs in my scraping workflows?”.

I know, I know. The hype out there is very high. But maybe, given the latest results, it’s slowing down. However, as humans, we always love trying to automate things so that automation can work for us, making us rich overnight. This is how we’re figuring out LLMs, right?! Something that does anything for us so that we can sit down on a Caribbean beach, sipping a Mojito.

Yeah, well…despite the hype, I’m pretty sure it won’t and like that. So, in this article, I’ll discuss:

How you can use LLMs in your scraping activities to gain the best results for you, as per the current state of the technology.

What is fine-tuning in the context of LLMs, and why you may want to do so.

Why you should fine-tune LLMs for industry-specific scraping.

How you can actually fine-tune LLMs.

Let’s dive into it!

Before proceeding, let me thank Decodo, the platinum partner of the month, and their Scraping API.

Scraping made simple - try Decodo’s All-In-One Scraping API free for 7 days.

Discussing The Use of LLMs in Web Scraping

Before discussing LLMs’ fine-tuning and its potential utility in the web scraping industry, I want to bring some clarity out of the fog that exists around certain web scraping concepts and processes.

The Process of Web Scraping: How It Works And Where to Integrate LLMs

When talking about web scraping, we refer to the process of extracting data from the web. However, I wonder: have you ever thought of how the whole process works? When you want to retrieve data from a website, the steps you have to take are the following:

Extract the HTML from the DOM.

Parse the HTML.

Save the parsed data (into a CSV file, a database, or any storage you use).

Each of these steps has its challenges to overcome. For example, when you extract the HTML, you are battling against anti-bots. Anyway, you must accomplish all three steps to complete the entire web scraping process.

The reason why I wanted to point out how the process works is simple. As of today, using LLMs in web scraping is mainly related to the parsing step. In a recent post called “Beyond the DOM: A Practical Guide to Web Data Extraction with LLMs and GPT Vision,” I discussed ways you can use LLMs in the extraction phase. However, at the time of this writing, those are mainly the ones available.

So, if you were thinking:” Oh, there’s AI out there. Let’s just get some LLM, maybe fine-tune it, and web scraping is easily done”, I have bad news for you. I can easily say that, as of today, LLMs are not replacing traditional scrapers. Sorry for interrupting the hype, but someone has to do it sometimes.

So, before going into fine-tuning, let’s briefly discuss why data parsing is important in the web scraping process.

The importance of Data Parsing

In the context of web scraping, data parsing refers to the process of transforming a sequence of unstructured data (raw HTML) into structured data that is easier to read, understand, and use.

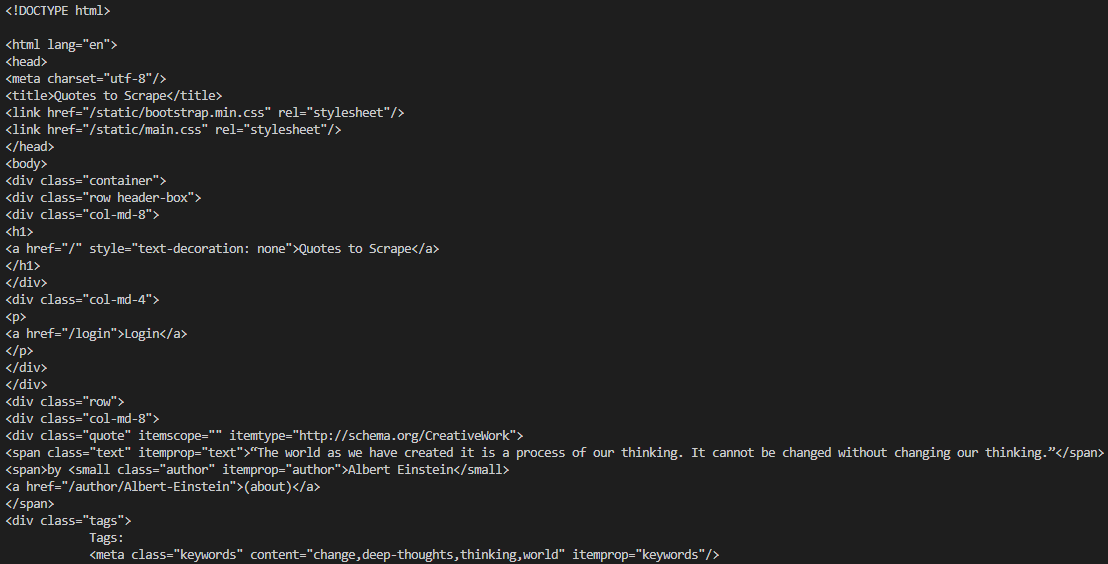

In fact, if you just scrape the (raw) HTML from a webpage without parsing it, this is what you will receive:

As the image shows, the result is not understandable or usable. This is where you intercept the various parts of the HTML structure to extract the data you need from it to transform it into something readable and usable. For example, you might be interested in iterating over a <Class> or something similar. In other words, the parser cleans and formats the data and arranges it in a structured format that contains the information you need. After that, you can export and save the parsed data into JSON, CSV, or any other format.

And here is the part where, at the time of this writing, you can get the best out of integrating LLMs into the process: just before saving the data. In fact, in the data parsing process, LLMs can be used to:

Convert raw HTML into a structured format.

Clean the data.

Change or manipulate the cleaned data.

However, pre-trained LLMs are generalistic models and can lack some specialties. So, let’s move towards the heart of this article: fine-tuning LLMs.

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - Get a 55% off promo on residential proxies by following this link.

🧞 - Reliable APIs for the hard to knock Web Data Extraction: Start the trial here

What Is Fine-Tuning and Why Do It

Let’s start with the definition. In “AI engineering—building applications with foundation models”, Chip Huyen defines fine-tuning as “the process of adapting a model to a specific task by further training the whole model or part of the model. Fine-tuning can enhance various aspects of a model. It can improve the model’s domain-specific capabilities, such as coding or medical question answering, and can also strengthen its safety”.

What’s important to underline here is that fine-tuning does not expand a model’s knowledge on a particular field or industry. Well, you can also reach this goal, but it’s a secondary one. The process of (only) expanding the knowledge of a model is called RAG (Retrieval Augmented Generation).

So, again using Huyen’s words, fine-tuning “is most used to improve the model’s instruction-following ability, particularly to ensure it adheres to specific output styles and formats”.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Enter the TWSC community at this link.

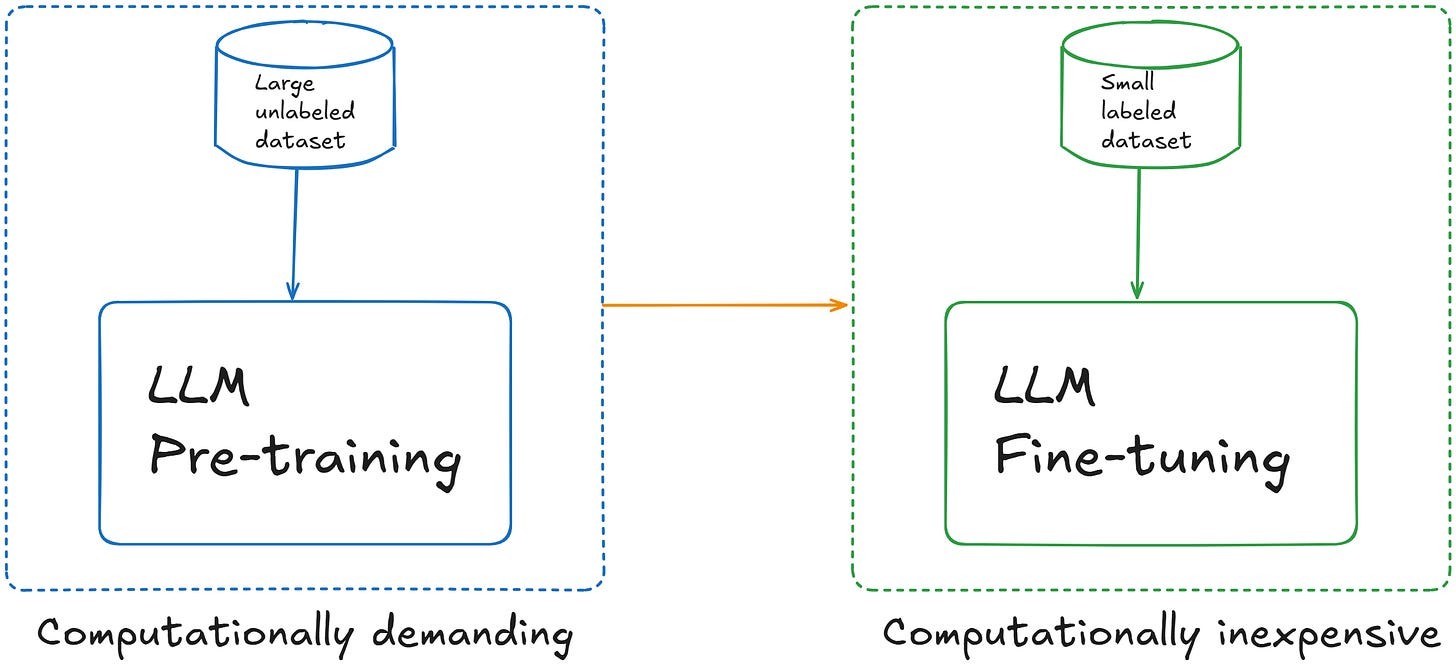

So, to summarize, in the context of LLMs, fine-tuning means modifying a pre-trained model’s parameters (aka, re-training the model) so that it gets new abilities:

Note that, while the image above says that fine-tuning is “computationally inexpensive”, this is only compared to pre-training. In fact, fine-tuning can be very expensive, depending on the model you want to fine-tune (particularly, its total number of parameters) and on the dataset you have. Also, somehow the actual expense comes from having a labeled dataset you can actually use for fine-tuning. This is why you can often get your fine-tuning dataset via web scraping.

There are some ways to perform fine-tuning—some more expensive, on the side of computation, than others. A classical approach that can fit our necessities on the side of web scraping is called “instruct fine-tuning”. This is about (re)training the LLM using examples that demonstrate how the model should respond to a query. In other words, you have to feed the LLM with a pair (prompt + text; example response). This way, given a prompt, the model learns the response you’d expect.

For example, suppose you want to fine-tune a model to improve its summarization skills. In that case, you should create a dataset of examples that has the instruction to summarize a text plus the text itself, followed by its summary. This way, the model learns precisely how to behave when it receives new, unseen summarization prompts with related text to summarize.

As is understandable, this approach is time-consuming for a simple reason: you need several pairs of (prompt + text; summary) examples before the model can reach good performance. However, spending time and money doesn’t mean anything if you don’t know your industry well. Every process must have an ROI, and you have to understand if, in your industry, it’s worth the effort (and, in web scraping, it could really be the case!).

Why Fine-tune LLMs for Industry-Specific Scraping

After all this discussion, I think you have gained an understanding of how to use LLMs in web scraping and why fine-tuning them. So here’s the big question: Does it make any sense to fine-tune LLMs for scraping industry-specific data?

The answer can be subdivided into two:

On the side of technicalities: Yes, it makes sense.

On the side of money and time spent: It depends on the ROI you expect to gain.

I’ll leave it to you to estimate the ROI of fine-tuning LLMs for your specific applications. So, let’s discuss the technicalities.

Reasons And Examples of LLMs’ Fine-tuning for Industry-Specific Scraping

First, you may think:” Why in the world would I fine-tune an LLM if I can extract the raw HTML, paste it all into an LLM, and get my results?”. Well, you can not do that. And the reasons are mainly two:

Any LLM has limitations on the number of tokens it can get as input. So, if your HTML is very long, it can’t ingest it all.

After parsing data, a common practice is to save the data into JSON. And here’s the point: LLMs are generally not so good at structuring data into JSON, particularly when there’s a lot of data. In fact, there are also specialized tools available that return data in JSON.

LLMs haven’t a great ability to understand raw HTML. Here you have two possibilities (if the HTML is short enough):

You can convert the HTML to markdown, which is a format LLMs understand way better (for example, you could use a service like Code Beautify).

You could fine-tune an LLM to understand raw HTML—and here you are with a first use case! However, this will still be limited by the number of tokens ingestible. So, I wouldn’t suggest this. Unless you always need to scrape a reasonable part of the whole HTML for each website.

So, considering the limitations that pre-trained LLMs have towards generalistic parts of the parsing process in web scraping, it actually makes sense to fine-tune an LLM for industry-specific cases.

Let’s consider a specific example. Say you want to scrape data from the following Zillow page:

In this case, you have several difficulties while scraping it:

The structure of the HTML is not simple to scrape and parse. The data in the red rectangles shows that it has (at least) two tables. Plus, the data in the top bar is displayed only when you move the mouse down: one more complication (yes: JavaScript management!).

You may want to feed an LLM with the parsed data to make it perform some analysis. In this case, the LLM may not be aware of what “5 bds” or “3ba” actually means. To avoid hallucinations, you need to train the model with this information. Also, the fact that the building has two acres means that it is sold with a terrain. But is it a garden or somehow a raw terrain? This is something an LLM needs to understand, just in case you prompt it like: “Extract all the houses with a garden” and want it to get the correct ones.

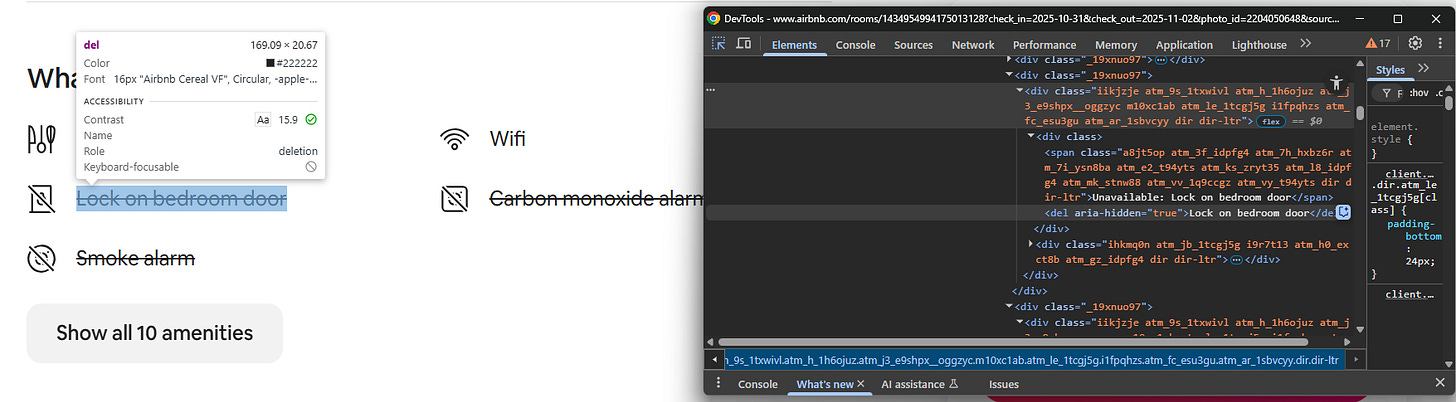

Let’s make another example in the real estate industry. Suppose you want to scrape data from Airbnb and consider the following:

The question is: How can a model understand when a host doesn’t provide a particular “amenity”? Well, a solution is to fine-tune a model that, when it finds <aria-hidden> in the HTML structure, it understands that the amenity is not available from the host.

So, as you can understand, the nuances of specific industries can be really a lot. So, indeed, fine-tuning a model to learn them actually makes sense, on the side of the technicalities involved.

Methods to Fine-Tune LLMs

So, I said that industry-specific fine-tuning can be beneficial in web scraping. But how do you actually do so? Well, you have mainly two major ways of doing it:

Writing custom code: This requires choosing an LLM and writing all the needed code for fine-tuning it. Also, in this case, you need to manage all the infrastructure. This means that, unless you use a “small” LLM that can be fine-tuned on your local machine, you need to set up a cloud environment that allows you to use multiple GPUs. To understand all the nuances of this process, you can read how I fine-tuned Llama4 using scraped data. Note that this process requires highly specialized AI skills.

Using an automation tool: In this case, you can take advantage of automation tools that can integrate with the LLM of your choice, whether it is ChatGPT, Gemini, or whatever you are using. Commonly used solutions are n8n, Dify, and Zapier, but there are others on the market. The process in this case is easier on the side of the technicalities: you connect the nodes of the automation tool via drag and drop, you feed the LLM with the fine-tuning dataset, and make a POST request to the LLM’s API that takes care of fine-tuning. No coding skills required for fine-tuning. However, not all that shines is gold. So, if you want to know more about how to do so, you can read my article on how I fine-tuned ChatGPT using n8n.

Below is the comparison summary of those two fine-tuning approaches, based on my experience:

Coding the fine-tuning process:

Technical skills required: Very high.

Time required: About a full working day.

Initial cost: About 20/25$.

Flexibility: Low. Every model you fine-tune needs custom code.

Fine-tuning via automation tools:

Technical skills required: Low. Basically, just IT knowledge.

Time required: About half a working day.

Initial cost: About 25/30$.

Flexibility: Very high. Every time you need to modify something, you just need to rerun the workflow without modifying the code (as you don’t need to write code!).

In the end, they are comparable. However, using automation tools to fine-tune LLMs is more suitable for teams that don’t have deep AI knowledge.

Conclusion

In conclusion, in this article, I covered fine-tuning LLMs. I discussed where, as of today, LLMs give us their best results in the scraping process, if it makes sense to fine-tune LLMs for specific scraping industries, and how to perform fine-tuning.

Apart from the technicalities that I covered, the most important thing to always consider and validate is ROI. As you’ve discovered, the initial cost for fine-tuning is affordable. And so is the expected time required, regardless of the method you use. However, what you have to carefully evaluate is the time (and money) needed to extract and prepare the dataset for fine-tuning the LLM. You really have to understand if retrieving and preparing the fine-tuning dataset will give you an ROI that makes sense in the long run.

So, time for a discussion: Are you fine-tuning LLMs for improving your scraping activities? Let me know in the comments!