Are LLMs capable of replacing traditional scrapers?

What I understood, up to now, about scraping with LLMs

Nowadays, it seems you can’t hear the word scraping without also hearing AI in the same sentence. While this is probably true for many other subjects, it seems that the web scraping industry is trying to figure out if LLMs could solve its atavic problem: the scalability.

I’ve just returned from the PragueCrawl event, organized by Apify and Massive: great audience, perfect organization, and engaging speeches, definitely worth going. Of course, also in this case, the main topic was AI in scraping, and I had the opportunity to add my two cents from the stage.

This post is an extended version of my speech, containing concepts already expressed in previous posts, along with some additions and experiments from the past weeks.

Before proceeding, let me thank NetNut, the platinum partner of the month. They have prepared a juicy offer for you: up to 1 TB of web unblocker for free.

Traditional web scraping and its limits

When I think about traditional web scraping, I’m referring to all the tools and techniques used before the advent of LLMs (and still used nowadays).

We can split the scraping tasks into two areas: bypassing any anti-bots to retrieve the HTML, and parsing the HTML.

The amount of time that each steps take, as you can imagine, depends on website to website, and it can be synthesized with this chart.

If no anti-bots are on a website, we’ll spend all our time finding the selectors in the code to scrape all the fields and links to other pages in scope.

The more complex the anti-bot protection becomes, the more time we spend bypassing it, until this becomes the predominant task in cases of websites that are hard to scrape.

Unless we’re working on a few edge cases, for every new website we need to scrape, we have to create a dedicated web scraper to add to our code base.

Every time we add a new scraper to the codebase, we’re adding a new piece of software that, sooner or later, will have chances to break and should be fixed. These chances compound with the possibility that the other scrapers in our codebase will break, so that the more scrapers we’re maintaining, the more people we’ll need to maintain them. That’s precisely the scalability issue we mentioned before.

Thanks to the gold partners of the month: Smartproxy, IPRoyal, Oxylabs, Massive, Rayobyte, Scrapeless, SOAX, ScraperAPI, and Syphoon. They’re offering great deals to the community. Have a look yourself.

What LLMs promise

With the advent of LLMs, the first and most obvious use case the scraping community had in mind is to use it for solving the HTML parsing layer when creating a scraper.

The dream applications would be, in a world where the anti-bot bypass is done or not needed, to create self-healing scrapers that automatically map the data to the desired output structure.

We soon understood that LLMs are not a silver bullet for all web scraping cases, for three main reasons:

They’re inaccurate and unreliable

They’re expensive

They’re slow

Let’s see each of these steps in detail.

LLMs are inaccurate and unreliable

We all know that LLMs can hallucinate, even in cases where they just need to extract information from HTML code.

The web has a long tail of various website layouts and structures that can trick the algorithms behind LLMs. There’s nothing more obvious than, given an e-commerce page, the data returned is from the suggested product instead of the main one.

And even if we get the right data from a page, if we request the same prompt fed with the same HTML code 1000 times, we’re not 100% sure that we’ll get the exact same response from the LLM.

This is because, at the end of the day, LLMs are guessing the most probable next word to use in the response, with a pinch of randomness. But the most probable answer could not be the correct one.

LLMs are expensive

When creating a standard scraper, processing a single page for extracting data comes for free. This is no more true if we’re using LLMs: every time we’re passing an HTML code to the prompt, in order to analyze it, this will be split into tokens, together with the remaining part of the prompt. Every commercial LLM is priced in tokens, both in input and in output, and the bill could be expensive.

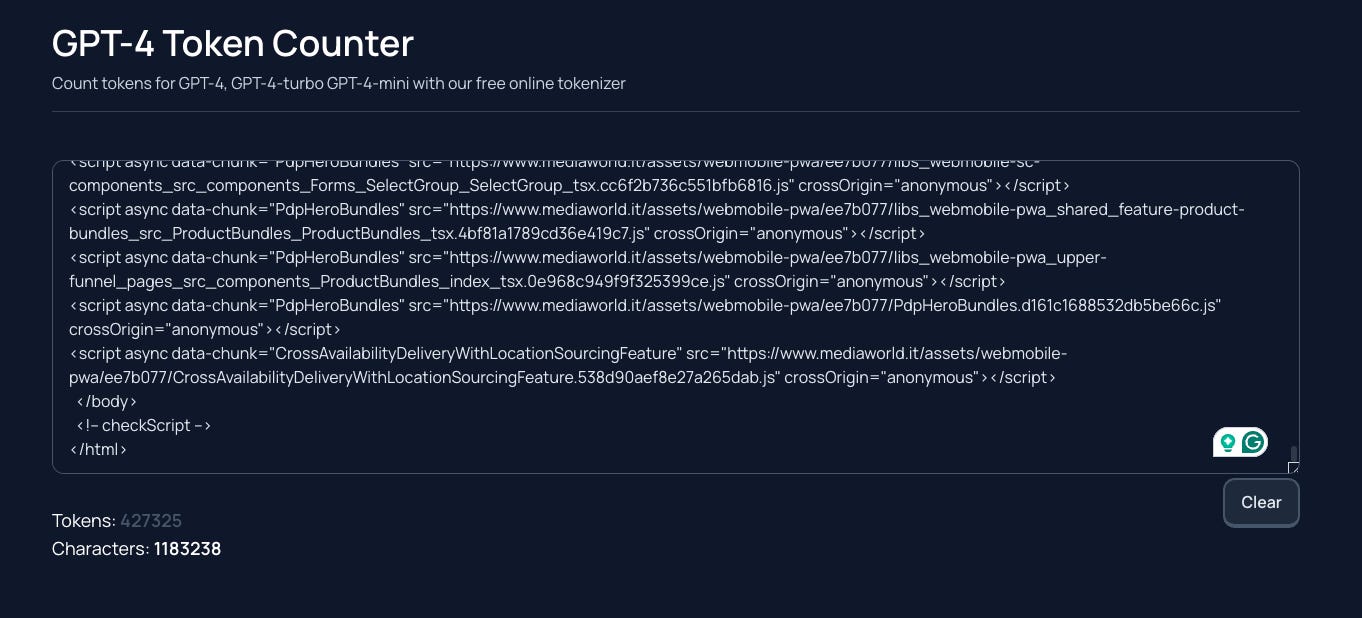

This is a standard web page from a well-known Italian e-commerce website. If we pass its HTML code to a tokenizer, the function that splits the text into tokens, we’ll discover that just reading this page will cost us more than 400k tokens.

To understand what this means in dollars, please use this online pricing comparator for just one API call.

You can see that prices range from a few cents to $ 60. In any case, this is too much if we want to scrape millions of pages.

LLMs are slow

When chatting with ChatGPT, we can see that there is a delay of a few seconds between the request and the response. This is not an issue in a conversational UX, but certainly it is when scraping millions of pages.

This can be limited by calling an API in several threads, but all the LLM providers are rate-limiting the number of calls we can make in a certain timeframe. This means that we have a hard limit on the scalability of an eventual scraping project based on LLMs.

Best use cases for LLMs in scraping

Despite all these issues, with a proper tool and in certain circumstances, LLMs can help improve our productivity in web scraping projects dramatically.

In this small experiment, available for free readers on GitHub at this link, I’ve created a scenario where we need to “scale horizontally,” which means scraping a large number of websites with just a few URLs each.

In traditional scraping, this is the worst use case: we should create a separate scraper for each website, just to scrape a few URLs.

In our case, I’ve selected 100 URLs from 33 different e-commerce websites and asked the ScrapeGraphAI API to map the data for me, using this output schema:

class PDPEcommerceSchema(BaseModel):

ProductCode: str = Field(description="Unique code that describes the product")

ProductFullPrice: float = Field(description="Full price of the product, before any discount applied. If there's no discount applied, it's the final product price")

ProductFinalPrice: float = Field(description="The final sale price of the product, after discounts.")

CurrencyCode: str = Field(description="ISO3 currency code")

BrandName: str = Field(description="The name of the product's manufacturer")

ProductUrl: str = Field(description="URL of the product page")

MainImageURL: str = Field(description="URL of the main image of the product")

ProductMainCategory: str = Field(description="The main category of the product, usually the first item of the breadcrumb")

ProductSubcategory: str = Field(description="The product subcategory, usually the second level of the breadcrumb") The ScrapeGraphAI API is the commercial implementation of the famous open-source project that enables you to use any LLM available to scrape data from a web page.

In the API, given an output structure and a URL, the service retrieves the HTML for you, preprocesses it, and then passes it to an LLM to return the parsed data.

The results of my experiment were quite good:

72% success rate, where the output fields were populated correctly

13% of errors in fetching the content. They're not related to LLMs but mainly to anti-bots.

15 % of HTML parsing errors, focused on five websites (no price retrieved or, just for one case, wrong prices)

The whole scraping cost me around 4 USD (1000 tokens of the paid starter plan)

The process took 20 minutes to finish, simply because I asked URLs in series, without any form of parallelism. It could be way faster, in the order of 3-4 minutes, if I wanted to push it to the rate limit of my plan.

It may seem expensive and slow compared to a simple Scrapy script that crawls 100 URLs, but the reality is that we saved the time and the money of developing 33 different spiders.

In these circumstances, where we have a great development time given the number of scrapers to create and a not excessive list of URLs to query, it seems that using LLMs is a perfect fit. We could develop ad hoc scrapers just for the few websites that are not correctly parsed (or even try custom prompts), saving weeks compared to the traditional way of operating.

How to use LLMs for massive scraping instead?

Well, we’ve already seen that LLMs cannot be used for parsing a significant number of URLs due to their limits. In these cases, the way we can implement them in our web scraping pipeline is to speed up the development of scrapers.

I started thinking about it some weeks ago and, if you remember, I wrote about this approach in this article.

From the first tests I made, the results are encouraging, but there’s still a lot to do, including adding new cases and implementing a correct crawling strategy for each type of scraper.