Build your web scraping assistant with Claude and Cursor

Boost your productivity with MCP, Rules and a lot of patience

Web scraping is never dull, but it can be repetitive for sure. Unless you’re a freelancer, so you have customers asking you for different websites from various industries and needs, if you work for a company you’ll probably face the same challenges over and over.

In my experience at Re Analytics, we had to set up hundreds of e-commerce scrapers, all with the same three or four data structures. After doing that, we had all the maintenance, which means:

change selectors/navigation logic

upscale/downscale the proxy type

change scraper settings

move from Scrapy to Playwright or other tools if nothing else works

Sometimes, this can be challenging, especially the latest point, but at the end of the day, 90% of the work is not rocket science. What is difficult, instead, is doing so while keeping the costs low, which means a thin proxy bill and a slim headcount. For the first point, while proxy adoption grew during the past few years, prices dropped significantly as more players entered the scene. If you want an updated view of what’s happening now with the proxy prices, have a look at the Proxy Pricing Benchmark Tool and, if you want to save even more, at our Club Deals page.

About the headcount, instead, what we could do was create tools, best practices, and standardized processes to remove every possible friction in the ingestion of new websites or their maintenance.

Today, a huge opportunity comes from using AI as an assistant that understands your processes and knowledge base and gives you the start for the scraping creation or fixture.

This topic fascinates me: web scraping is a time-consuming activity, and there’s a huge room for improvement. For this article, I started to build my web scraping assistant using Cursor as an IDE, piecing together a custom MCP server (improving the one created in this article), and Cursor rules. The LLM I used instead is the new Claude 3.7 Sonnet.

Before proceeding, let me thank NetNut, the platinum partner of the month. They have prepared a juicy offer for you: up to 1 TB of web unblocker for free.

What is Cursor, and why did we choose Claude?

Cursor is an “AI-first” code editor built on the familiar foundation of VS Code. In practice, it feels like having an AI pair programmer living inside your IDE. You can write code using plain-language instructions, ask the editor to fix errors or refactor sections, and even generate new modules by describing what you need.

To be honest, I did not make a true benchmark between Cursor and its alternatives like Windsurf, and I plan to do so soon.

Cursor integrates a chat-driven assistant that can edit files, generate code, and explain changes all within your editor.

The feature that impressed me the most when I first saw it was the fact that, after indexing my codebase of scrapers, whenever I started to write a new line of code, the autocomplete was not only correct but also just like I would have done it.

This is because, as we mentioned before, our codebase is standardized, and all the scrapers are very similar, so it’s a piece of cake for an LLM to understand the probability of the following line of code I will add.

Speaking of LLMs, while Cursor can work with different AI models, after using GPT4-o for a long time, I chose Claude Sonnet 3.7 as the assistant for our web scraping tasks. Claude is a leading large language model developed by Anthropic, known for its strong coding abilities and large context window. Many in the industry consider Claude one of the most capable models for coding – in fact, the CEO of Cursor noted that Claude handled real-world coding tasks so well that it became the default model for all Cursor users.

In my (short) testing cycle for this article, I’ve found Claude more reliable and predictable in the outcome than GPT4-o. Given the MCPs and the rules, it acts as expected in most cases.

Thanks to the gold partners of the month: Smartproxy, IPRoyal, Oxylabs, Massive, Rayobyte, Scrapeless, SOAX and ScraperAPI. They’re offering great deals to the community. Have a look yourself.

What are the MCP servers?

Modern AI assistants like Claude can’t natively browse websites or fetch live data on their own – they rely on what’s in their training data or what the user provides. This is why you cannot get updated code for your scraper by using the original LLM knowledge base.

MCP (Model Context Protocol) is a solution to this limitation. MCP is an open protocol (introduced by Anthropic) that standardizes how AI models connect to external tools and data sources. Think of MCP as a “universal adapter” that lets an AI plug into various services in a consistent way. It defines a common language (using JSON messages) for the AI to request something and get back results, no matter the underlying tool.

MCP follows a simple client-server model. On one side, you have an MCP client built into the AI’s environment (for example, Claude Desktop or Cursor can act as an MCP client). On the other side, an MCP server is a program that exposes a particular capability or data source to the AI.

To make things even simpler, consider MCP a way to send commands to your machine (at the moment, servers should be installed locally) via JSON. You can start your browser, call an API, start a bash script, and so on. The parameters of these commands will be guessed from the prompt used in the chat with the LLM. If we say in a prompt: “I need to browse the website X”, the LLM knows it has to open the tool that opens the browsers and then use it to go to website X (and eventually return its HTML).

In the context of web scraping, MCP servers become the bridge between Claude and the live web. For example, Apify provides an Apify Actors MCP server that allows Claude to run any of Apify’s pre-built scrapers (called Actors) via a simple API call.

Likewise, the open-source Firecrawl MCP server gives Claude the ability to crawl websites and extract content on the fly. Firecrawl is a comprehensive web scraping tool that can even handle JavaScript rendering and perform web searches, all through an MCP interface.

We already built our MCP server a few weeks ago, but today, we’re using it together with Cursor rules.

What are Cursor rules?

One of Cursor’s most useful features is the ability to define rules that guide the AI assistant. Cursor rules are structured instructions you write for the AI, acting like a persistent guide or framework for its responses.

You can create project-specific rules (stored in a .cursor/rules/ folder in your repo) or global rules that apply to all your projects. Every time the AI in Cursor is generating code for you, it will take these rules into account. In essence, it’s like programming the assistant with your personal best practices.

Just like our best practices and knowledge base are there to be consulted each time we work on something (and I’m not sure we’re doing it every time), rules are there to force the LLM to follow some predetermined paths when coding.

It allows you to encode all those reusable steps and conventions that you, as an expert, always want to see in a scraper.

Here's a great collection on GitHub that shows how rules are implemented for different use cases.

I used the Scrapy file as a starting point for the example we’re seeing now.

Building a Scrapy coding assistant

While LLMs are not capable, since they’re not built for that, to understand the best approach for scraping a website (look for internal API, use the mobile App instead of the website version), which is also the most interesting part of the job, I preferred focusing on the boring part one: the creation (and in the future the maintenance) of Scrapy spiders.

Given that we need to scrape a website's HTML to get the data, I wanted to automate the process of creating the scraper from scratch with a predefined output data structure, navigation logic, correct selectors, and so on. While this seems trivial, it means breaking down the process and the logic into smaller steps that explain them to the LLM so that he can follow them and make the right decision.

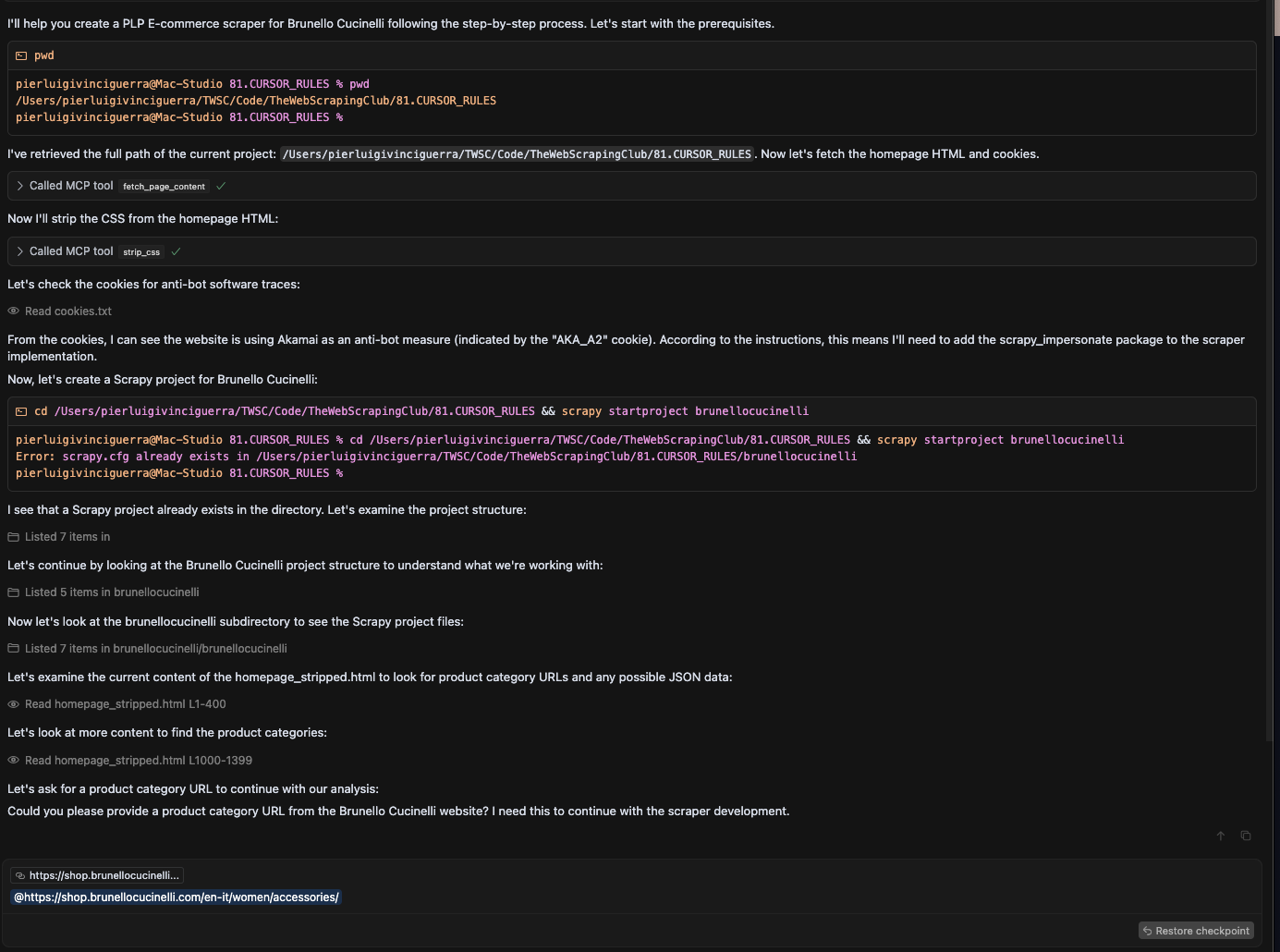

Here are the details of what I’d like to achieve with this, given a prompt like: “Create a PLP E-commerce scraper for website X”:

First, the assistant should understand what it means “PLP E-commerce scraper” in terms of output and scraping process. This is a particular type of scraper that crawls the catalog page of e-commerce websites and doesn’t go into the detail page of each product (the PDP). A “PDP E-commerce scraper” instead receives a list of product URLs and scrapes all the details from their detail page. These two are the only types of scrapers I’ve actually implemented in the rules, but others can be added.

After understanding the scraper type, the assistant should create a proper Scrapy project with all the files in the correct folder.

Using an MCP, the assistant should browse to the starting URL of the scraper and download both the HTML and the cookies stored. If it’s a PLP E-commerce scraper, it should also ask for a URL of a product category page.

After that, it should analyze both the HTML files, looking for JSON at first, to map the fields to the best selectors available, both for browsing the categories and for the output items.

Then, it should read the cookies file to understand if there’s a known anti-bot protection. If it finds Kasada or Datadome, the process should stop since Scrapy will not be enough to bypass them. If Akamai is found, implement scrapy_impersonate in the scraper.

Optimize the settings.py file with common configurations like request headers and user agent

Finally, write down the scraper and launch it.

I’ve spent hours tweaking the rules and creating scrapers, and we’re far from perfection, but the results are not that bad.

I won’t describe in detail what’s inside the rules and the MCP server in this article since I’m releasing all this work as an open-source project. You can visit the repository at this link.

Feel free to add your contributions so that we can implement more and more rules and features for our assistant.

Conclusion

This AI-assisted setup for web scraping is not yet a fully autonomous system that you can “set and forget” – and that’s by design.

It’s a collaboration between you and the AI’s capabilities. What it does is dramatically improve efficiency for those repetitive tasks. Instead of spending hours writing code or tweaking selectors, you can let Claude (via Cursor) do most of that work under your supervision. Of course, using LLMs, there’s also the risk of spending hours prompting until you get the desired result.

To mitigate this, it’s important to set the most simple and clear rules possible to avoid LLMs going off the rails.