Best Practices for Ethical Web Scraping

A discussion on the golden rules for scraping websites ethically

Thank you to everyone who took 5 minutes to complete our survey related to the “State of web scraping report 2026”, promoted by Apify and The Web Scraping Club.

If you’ve left your email in the form, you should have received two months of paid subscription to The Web Scraping Club newsletter. When it happens, Substack doesn’t send an email, so feel free to check The Lab articles and see if you can read them fully.

If you have been scraping for a while, you know that retrieving data from websites and managing it must be done carefully and respectfully. We live in a world that, while it is interconnected, is subject to local and national laws. And data makes no exception on that.

Also, aside from laws, there are several rules you should follow to scrape websites gracefully: you do not want your IP address to be banned for making too many requests, do you?!

So, in this article, I collected the rules I consider the golden ones if you want to be an ethical scraping professional.

Ready? Let’s dive into it!

Before proceeding, let me thank Decodo, the platinum partner of the month, and their Scraping API.

Decodo just launched a new promotion for the Advanced Scraping API, you can now use code SCRAPE30 to get 30% off your first purchase.

Best Practice #1: Respect The Robots.txt File

I know you already know it! But, hey, we talk about web scraping here, so I can not skip it. The robots.txt file, also known as the Robots Exclusion Protocol, is a text file placed in the root directory of a website (for example, https://www.example.com/robots.txt).

The purpose of this file is to give instructions to automated web crawlers, spiders, and bots about which pages or sections of the site they should not access or process. In other words, it is the website owner’s way of saying:

Welcome, automated visitor. Here are the house rules.

A typical robots.txt file looks something like the following example:

User-agent: *

Disallow: /admin/

Disallow: /private/

Disallow: /search

User-agent: Googlebot

Allow: /search

Sitemap: <https://www.example.com/sitemap.xml>Let’s break it down:

User-agent: This specifies which bot the rules apply to. is a wildcard that means “all bots”. You can also have specific rules for bots like Googlebot (Google’s crawler).

Disallow: This directive tells the bot not to crawl the specified URL path. In the example, it is telling all bots not to enter the /admin/, /private/, or /search directories.

Allow: This can override a Disallow directive for a specific bot. In the example, all bots are disallowed from /search. Instead, Googlebot is explicitly allowed.

Sitemap: This is an optional but common directive that points bots to the website’s sitemap. It helps them discover all the pages the owner wants them to find.

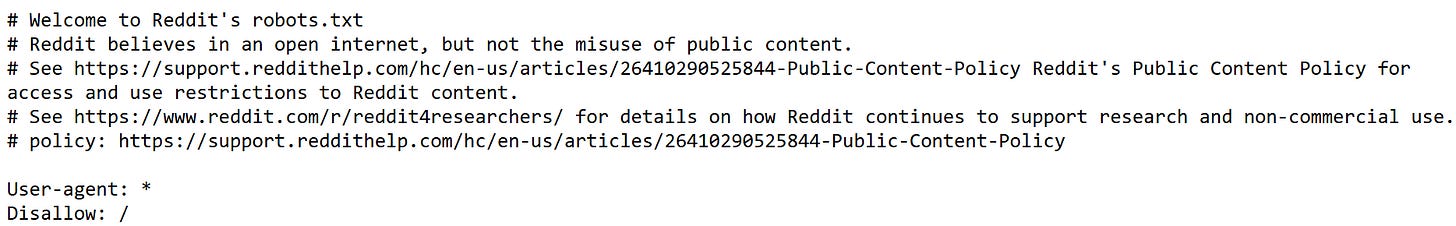

Let’s consider an actual example, for instance, from the Reddit website:

As you can see, Reddit’s robots.txt file is very strict, as it disallows crawling from any user agent. Looking directly at the robots.txt file is a way of verifying it, but what if you want to create an automation that parses it? In this case, you could use urllib.robotparser: A Python library that tells users about whether or not a particular user agent can fetch a URL on the target website.

For instance, you can write the following code:

from urllib.robotparser import RobotFileParser

# The user-agent you will use for scraping the target website

user_agent = "MyAwesomeScraper/1.0"

# The target URL you want to scrape

url_to_scrape = "<https://www.reddit.com/>"

# Initialize the parser and read the robots.txt file

parser = RobotFileParser()

parser.set_url("<https://www.reddit.com/robots.txt>")

parser.read()

# Check if your user-agent is allowed to fetch the URL

if parser.can_fetch(user_agent, url_to_scrape):

print(f"Scraping is allowed for {url_to_scrape}")

else:

print(f"Scraping is NOT allowed for {url_to_scrape}")

Which results in:

Scraping is NOT allowed for <https://www.reddit.com/>Since Reddit’s robots.txt file is strict, as expected, the result is a deny answer from this script.

But here is the point: Do you really have to respect the robots.txt file? Well, the point is that respecting the robots.txt file is purely voluntary. Anyway, respecting it is essential if you want to be an ethical professional, as this defines the rules of the house. The last paragraph of this article will discuss more on this matter.

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - 1 TB of web unblocker for free at this link

💰 - 50% Off Residential Proxies

💰 - Use TWSC for 15% OFF | $1.75/GB Residential Bandwidth | ISP Proxies in 15+ Countries

Best Practice #2: Set a Descriptive User-Agent

The User-Agent is a string in the HTTP header that identifies your client to the web server. So, every time your browser, a scraping script, or any other client requests a web page, it includes a User-Agent header that tells the web server what kind of software is making the request.

For example, when you visit a website using Chrome on a Windows computer, your browser sends a User-Agent string that looks something like the following:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)

Chrome/123.0.0.0 Safari/537.36

This string tells the server that:

You are using a browser based on the Mozilla framework.

Your operating system is Windows 10 (64-bit).

Your browser engine is AppleWebKit/Safari (another convention).

Your browser is Chrome, version 123.

When you visit a website with your browser, it automatically sends your User-Agent to the server. But when it comes to web scraping, you have the possibility to set the User-Agent of your crawler. The reasons why you want to set it are mainly two:

Avoid immediate blocks: Many websites automatically block or deny requests that have no User-Agent. A request without one is a red flag that suggests it is coming from a poorly configured script. Similarly, using the default User-Agent of a common scraping library (like python-requests/2.28.1) is another way for a site to identify and block your scraper.

Blend in: To successfully scrape many websites, the goal is to make your requests look like they are coming from a real human using a standard web browser. By setting your scraper’s User-Agent to a common browser string (like the Chrome example above), you can often bypass basic anti-bot measures.

But what about ethics, in this case? Well, let’s be honest: mimicking a browser is a common tactic for getting data. But if you want to be ethical, then you should lean towards a descriptive User-Agent.

A descriptive User-Agent does not pretend to be a browser. It clearly identifies your bot and provides a way for the website administrator to contact you. A good descriptive User-Agent should include information like:

The name of your bot.

A version number.

A URL or email address where the site administrator can find more information or get in touch with you.

Below is an example of a descriptive User-Agent:

# Define user agent

headers = {

"User-Agent": "MyAwesomeScraper/1.0 (<http://www.myawesomescraper.com/bot.html>; <mailto:bot@myawesomescraper.com>)"

}Providing a descriptive User-Agent is ethical for the following reasons:

Accountability and transparency: You are not hiding. You are openly stating who you are and that you are running an automated process. This builds trust and shows you are acting in good faith.

Provides a communication channel: This is the most important reason. If your scraper has a bug and starts sending too many requests, it could harm the website’s performance. A responsible administrator could try to use the URL or email in your User-Agent to contact you and ask you to fix your bot, before just banning your bot. Without this, their only option is to block your IP address, which is a much blunter solution.

Respects the website owner: It gives the website owner control. They can see your bot’s traffic in their logs and, if they wish, create special rules for it in their

robots.txtfile.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Enter the TWSC community at this link.

Best Practice #3: Scrape at a Respectful Rate

Bombarding a server with too many requests in a short period of time is the fastest way to get your IP address banned. Anti-bots respond like this because too many requests can slow down or even cause a server to crash, so they have to protect it to keep the website online.

So, while ethical, scraping websites at a respectful rate is also a way to not get banned. Below is a list of actions you can perform to scrape websites politely:

Introduce delays between requests: If you have to perform multiple requests, adding a delay among them will make the server breathe. In other words, it will give it the right time to manage your requests and also the ones from other users and bots, without crashing.

Manage concurrent requests: There are cases where you need to wait for a scraping activity to complete before launching the subsequent one, on the same target website. In such cases, to respect the server activity, you should manage concurrency using the right techniques. For example, Python provides you with the library asyncio. Also, note that managing concurrency is even a way to scrape high-traffic websites gracefully.

Use proxies to distribute request load: Proxies are somehow the holy grail in web scraping. You can use them for solving several technical challenges, and distributing the request workload is one of these. If you are new to the proxies world, read our comparison between residential and mobile proxies to learn more about them.

Test first, scale later: If you need to scrape dozens or hundreds of pages, going directly to fetch the data is not the right way to do that. Even if you are an experienced professional in the industry, the first tests of a new script are always tests. So, the best way to be an ethical professional is to first verify the code works on a few pages, then scale it for more. This way, you do not overload the server with wrong requests.

Scraping at a respectful rate also means retrieving only the data that you need, without occupying a server with requests for data you do not need. A Python example on how to ethically scrape only the pages of interest, introducing a delay for scraping at a respectful rate, is the following:

import requests

import time

# Define URLs to scrape

urls = [

"<http://httpbin.org/get?page=1>",

"<http://httpbin.org/get?page=2>",

"<http://httpbin.org/get?page=3>"

]

# Loop for scraping with delay among pages

for url in urls:

# Get response from target URL

response = requests.get(url)

print(f"Scraped {url}, status code: {response.status_code}")

# Wait for 2 seconds before the next request

time.sleep(2)The output is the following:

Scraped <http://httpbin.org/get?page=1>, status code: 200

Scraped <http://httpbin.org/get?page=2>, status code: 200

Scraped <http://httpbin.org/get?page=3>, status code: 200Best Practice #4: Handle Errors Gracefully

A well-built and ethical scraper should be able to handle errors and changes to the website’s structure. This includes:

Checking HTTP status codes: Your scraper should be able to handle codes like 404 Not Found, 503 Service Unavailable, and 429 Too Many Requests.

Handling website changes: Websites change their HTML structure all the time. Your scraper should be designed to be resilient to these changes, for example, by using more robust selectors, such as those that involve LLMs and GPT vision.

Also, managing errors gracefully goes hand in hand with managing retries. The reason why this is ethical is simple: you do not want to overload the server with too many automated retry requests (because of which you could be banned by a rate limiter), just because your scraper does not handle errors.

Best Practice #5: Mind The Data You Scrape and The Goal

As a last best practice for ethical scraping, let’s discuss something that maybe often remains under the hood: The kind of data you scrape and the goal you are scraping it for.

Let’s be honest, as scraping professionals, a common thought we often have is: “Well, the data is publicly available on the Internet, so there’s no reason why I should fear scraping it”. On one hand, this thought makes sense; on the other hand, it is not that easy.

The main issue is that when you scrape data, you are managing it and reusing it for other purposes—otherwise, why would you care about scraping it? So, first of all, your subsequent activity may be subject to laws like the European GDPR or the CPRA.

So, for example, if you are scraping people’s email from social media or any personal sensitive information, you should be following any privacy rule, even though that kind of data was put on social media by the owner themselves.

Another example could be that, while it is acceptable to scrape the text of the articles from blogs for analyzing them, republishing them without permission is a copyright violation. This is nothing more than copy-pasting without permissions. But, you know, in an ethical scenario, it was worth mentioning it.

Finally, another aspect to take into account for ethical scraping is data sovereignty. Data sovereignty is the concept that data generated within a country’s borders is subject to that country’s laws and regulations. It means a nation has the authority to control and govern how data is collected, processed, stored, and accessed, ensuring local control and protecting citizen privacy and national security.

This is something that generally applies to companies that manage data in distributed systems across the world. Anyway, this is also applicable to scraping. The main idea is that if you scrape data generated in the US, migrating and managing it in the EU can be a violation of data sovereignty.

Why Ethical Web Scraping is Important

So far in this article, the discussion has been around the best practices for ethical scraping. But why is ethical scraping important for professionals? Well, the reasons can be the following:

Professionalism: As with any profession, web scraping is about the reputation of the professional. Also, since scraping has to do with data, the quality of the data is also important. So how you gather data says a lot about you (and your organization, if you work for one). You do not want to be remembered as a professional who breaks the GDPR just for the sake of scraping data, do you?!

Sustainability: If you care about your profession, chances are that you want to scrape tomorrow, not just today. So, getting the IP banned from websites is not a badge of honor. Being an ethical professional, on the other hand, allows you to be a sustainable professional who can have a long career.

Risk mitigation: Avoiding legal and financial troubles is probably everyone’s best dream. Going to bed at night with thoughts that you might have broken laws is the part that can keep you up at night, because the consequences of unethical scraping can be legal summons and hefty fines. Being an ethical scraper allows you to have sweet dreams with the certainty that you will not need to go to court.

On the Robots.txt File, Laws, and What Happened Recently in The Industry

All right, all seems fantastic. Just be a good professional, follow the best practices, and everything will be good, right?!

Well, as usual, the reality is often different from the theory. For example, in recent months, companies like OpenAI and Anthropic have been cited in several courts worldwide for scraping activities used to train their LLMs. While the legal cases concerned the use of copyrighted material and compliance with robots.txt, outcomes have varied by jurisdiction and specific claims. In other words, judges have not always treated the robots.txt file as a strong basis for denying a court order to scrape a website. They have provided their response, along with other technical or commercial issues, for the specific cases. So, it seems that, at least for now, for judges, there is no universal rule of thumb regarding the robots.txt file.

Of course, the idea is not that professionals should ignore the robots.txt file. They have to care bout it! But what happens in courts should not be taken for granted.

Conclusion

In this article, the discussion focused on best practices for ethical web scraping and its importance.

So, let’s move forward with the discussion in the comments: What do you think is the most important best practice? Did we forget something?