Using Internal API Calls for Web Scraping More Efficiently

Snoop and replicate API calls to retrieve data straight away

Traditional web scraping can quickly turn into a headache—whether due to page structure changes, anti-scraping measures, IP bans, or other issues. But have you ever stepped back and thought about this?

Most of the data you see on a page is actually retrieved by the browser through API calls. So why not connect directly to those endpoints? That’s exactly what API web scraping is all about!

Follow me as I show you how that technique works and walk you through a real-world API scraping scenario.

What Is API Scraping?

API scraping is the process of collecting data by making requests to a website’s public API endpoints. The idea is to inspect the AJAX requests a web page makes while rendering in the browser and replicate them in your script to access the data.

By calling these endpoints, you can get structured data efficiently without having to render the entire page. Unlike traditional web scraping, which relies on parsing HTML, API web scraping takes advantage of the structured data exposed through API endpoints.

The API-based approach to web data retrieval is faster, more reliable, and eliminates the need to handle complex HTML parsing.

Before proceeding, let me thank Decodo, the platinum partner of the month, and their Scraping API.Scraping made simple - try Decodo’s All-In-One Scraping API free for 7 days.

When Is It Possible to Apply API Scraping?

You can apply API scraping whenever a web page retrieves data dynamically through public endpoints (typically via AJAX). After all, most modern sites load content by making RESTful or GraphQL API calls during page rendering. Those are all public API endpoints, which you can call directly to access the same data.

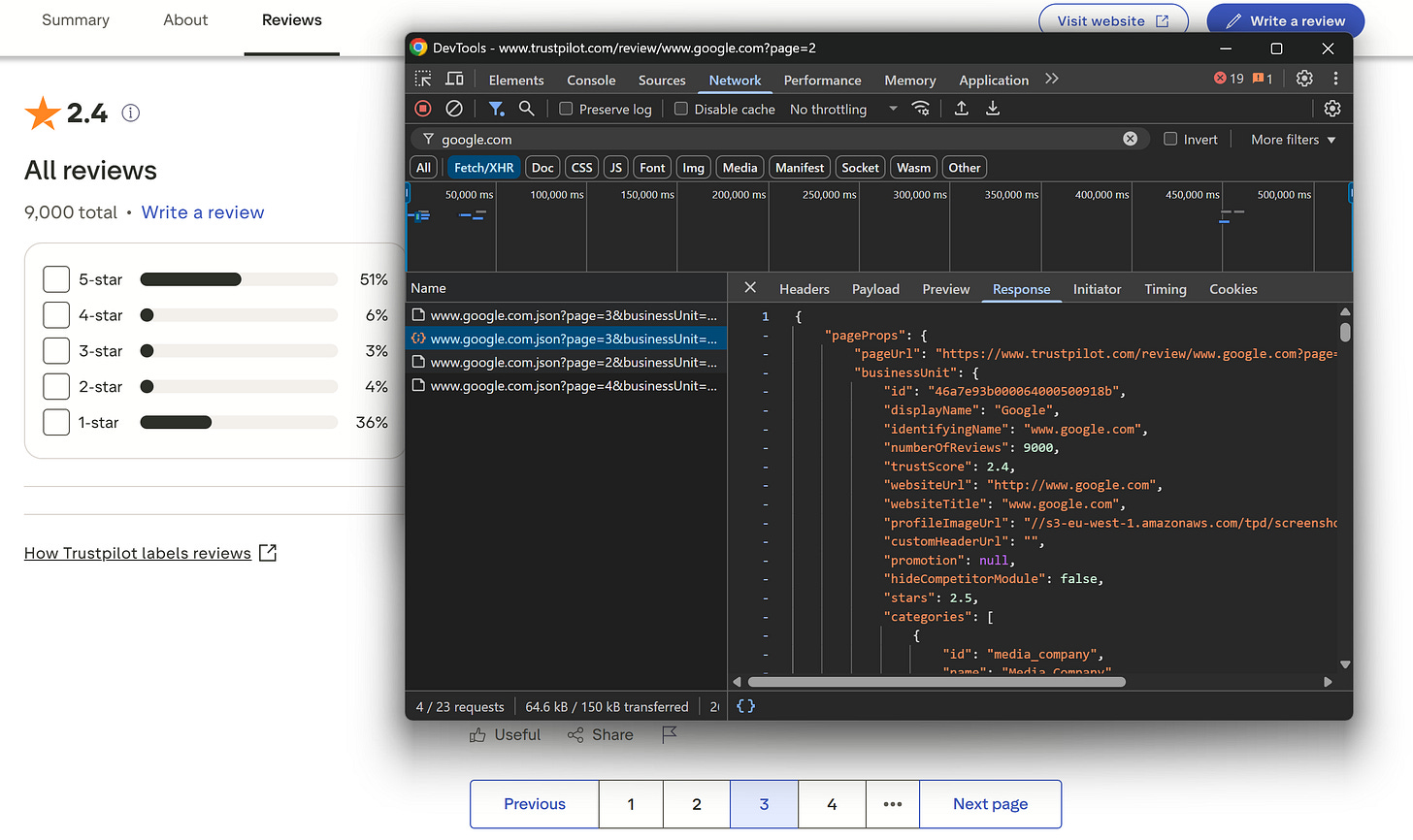

All you have to do is capture them in the browser’s Network tab. For example, Trustpilot’s review pages use a pagination API to fetch additional reviews as users click a page number on the pagination element:

The API response contains reviews, ratings, and other metadata. Precisely, that's the same data the browser will display on the page after rendering.

Now, when is API scraping generally viable? First, look at the Network tab and monitor requests made by the browser. As a rule of thumb, you should also look out for these scenarios:

“Load More” buttons

In-page filtering options

In-page pagination elements

Single-page applications (SPAs)

Every time a user interaction leads to data loading on the page

By inspecting the network requests triggered by those interactions, you can identify public API endpoints. Then, understand the required query parameters and/or request bodies, and reproduce the API calls in your scraping script.

Note: Stay away from authenticated API calls (e.g., requests made by pages in sections accessible only to logged-in users). Those endpoints may expose private/confidential data, and targeting them could create legal issues. Also, when you authenticate a request (e.g., via your auth token), you’re linking the activity to your account, so the backend server can track your actions. In simpler terms, they know what you’re doing!

…and When It’s Not

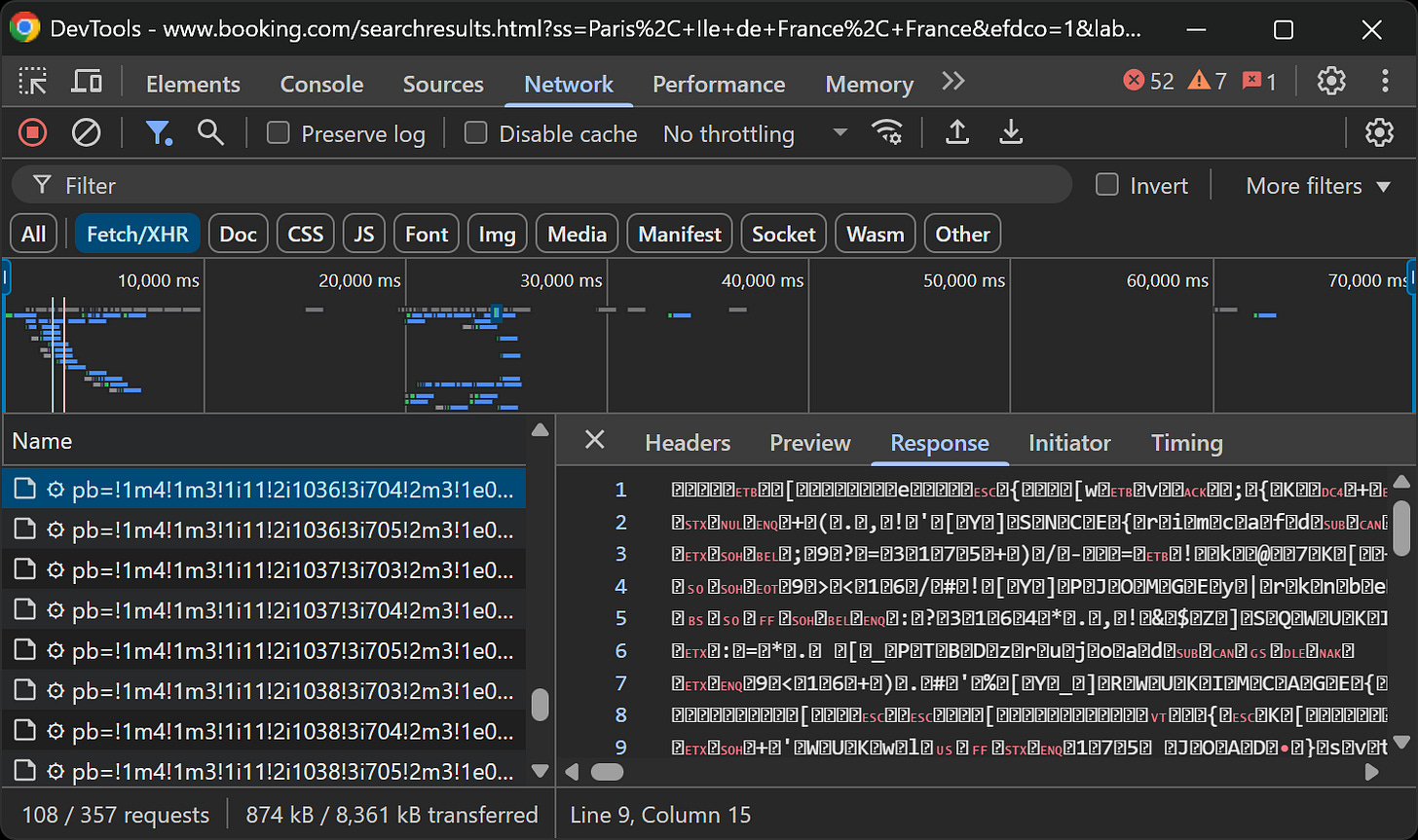

Unfortunately, although rare in my experience, there are situations where the API body, query parameters, or response are obfuscated or encrypted. For example, that is the case with Google Maps API calls from Booking.com:

Note how both the API query parameters and the response are encrypted.

In this case, there’s little you can do other than reverse-engineer the process to understand how the data encryption/decryption works. In such situations, it’s just better to rely on regular web scraping.

Other situations where you may not be able to apply API scraping are when backends use fingerprinting systems to block forged requests that don’t originate from the target site. But, as you’re about to learn, there’s a solution for that…

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - 1 TB of web unblocker for free at this link

💰 - 50% Off Residential Proxies

💰 - Use TWSC for 15% OFF | $1.75/GB Residential Bandwidth | ISP Proxies in 15+ Countries

Required Tools to Implement API Web Scraping

You might think you only have to replicate those API calls with your favorite HTTP client, and that's it. Well, that’s not always a good idea….

Using the right HTTP method and setting the same headers, cookies, query parameters, and body isn’t always enough. Why? Because of TLS fingerprinting!

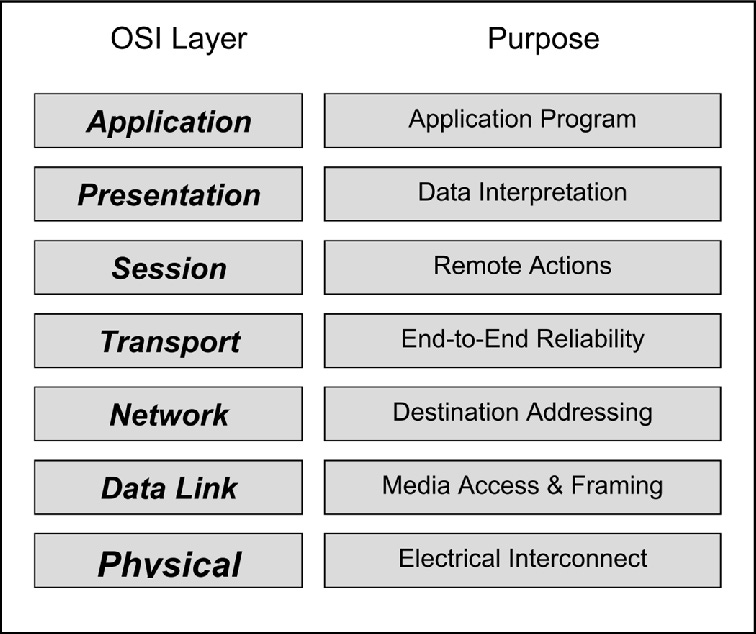

Browsers use TLS libraries that differ from those utilized by standard HTTP clients. As a result, the fingerprints produced by browsers aren’t the same as those of regular HTTP clients. This means that even if the request appears identical at the Application level, the API backend can still flag it as illegitimate by inspecting lower-level details (such as the TLS handshake at the Transport layer):

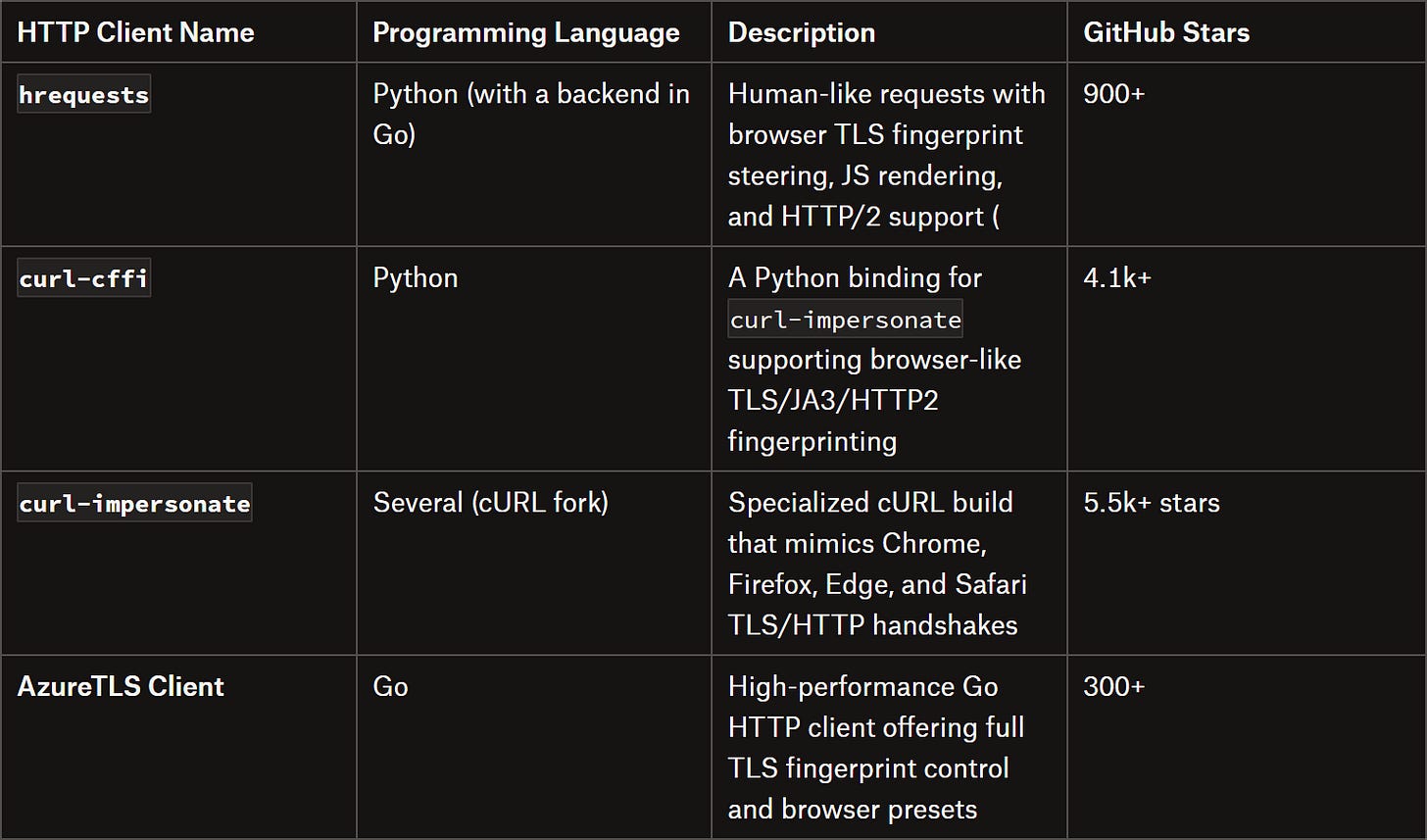

Long story short, when replicating calls in API scraping, it's best to use HTTP clients that support TLS fingerprint spoofing, like these:

Note: I didn’t mention Node.js libraries in the table because Node.js struggles with TLS fingerprinting. The workaround is either tweaking cipher settings or using curl-impersonate clients.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Enter the TWSC community at this link.

Applying API Scraping Against a Real-World Site

Enough theory! Follow the steps below to learn how to apply API web scraping against a real website. The target site will be Yahoo Finance, which exposes historical stock data through an API.

Note: I’m going to use hrequests for API web scraping in Python, but you can easily adapt the snippet to any of the other HTTP clients I mentioned earlier.

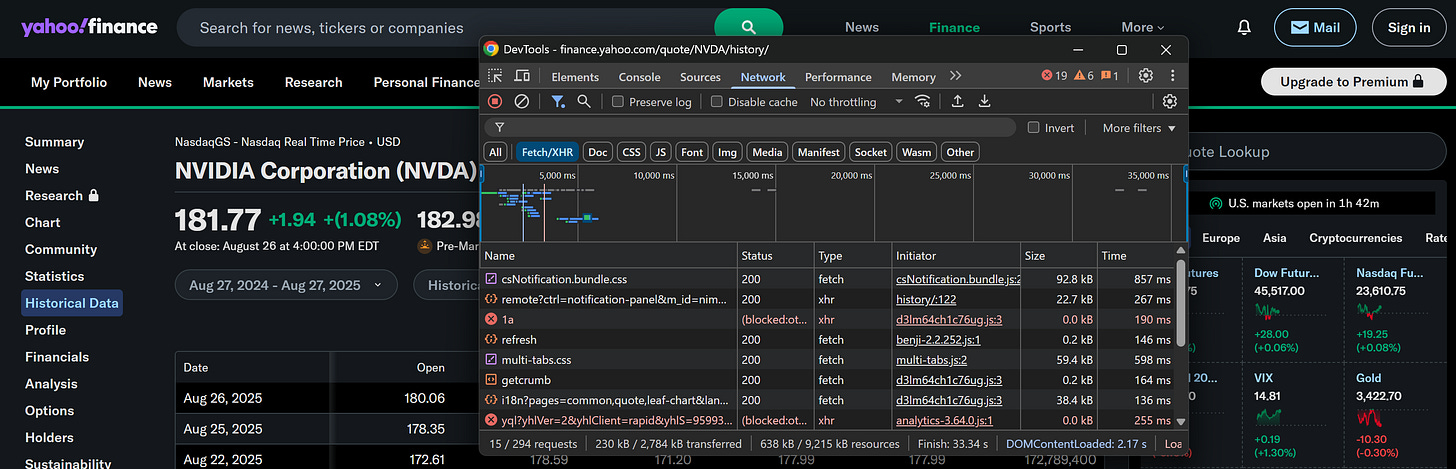

Step #1: Get Familiar with the Target Page and API Endpoints

Assume you’re interested in historical NVDA stock data from the following Yahoo Finance page:

Open DevTools, go to the Network tab, and reload the page:

You won’t notice any significant AJAX requests. That doesn’t mean the data you want isn’t exposed by an API, though.

Now, click on the “1Y” filter:

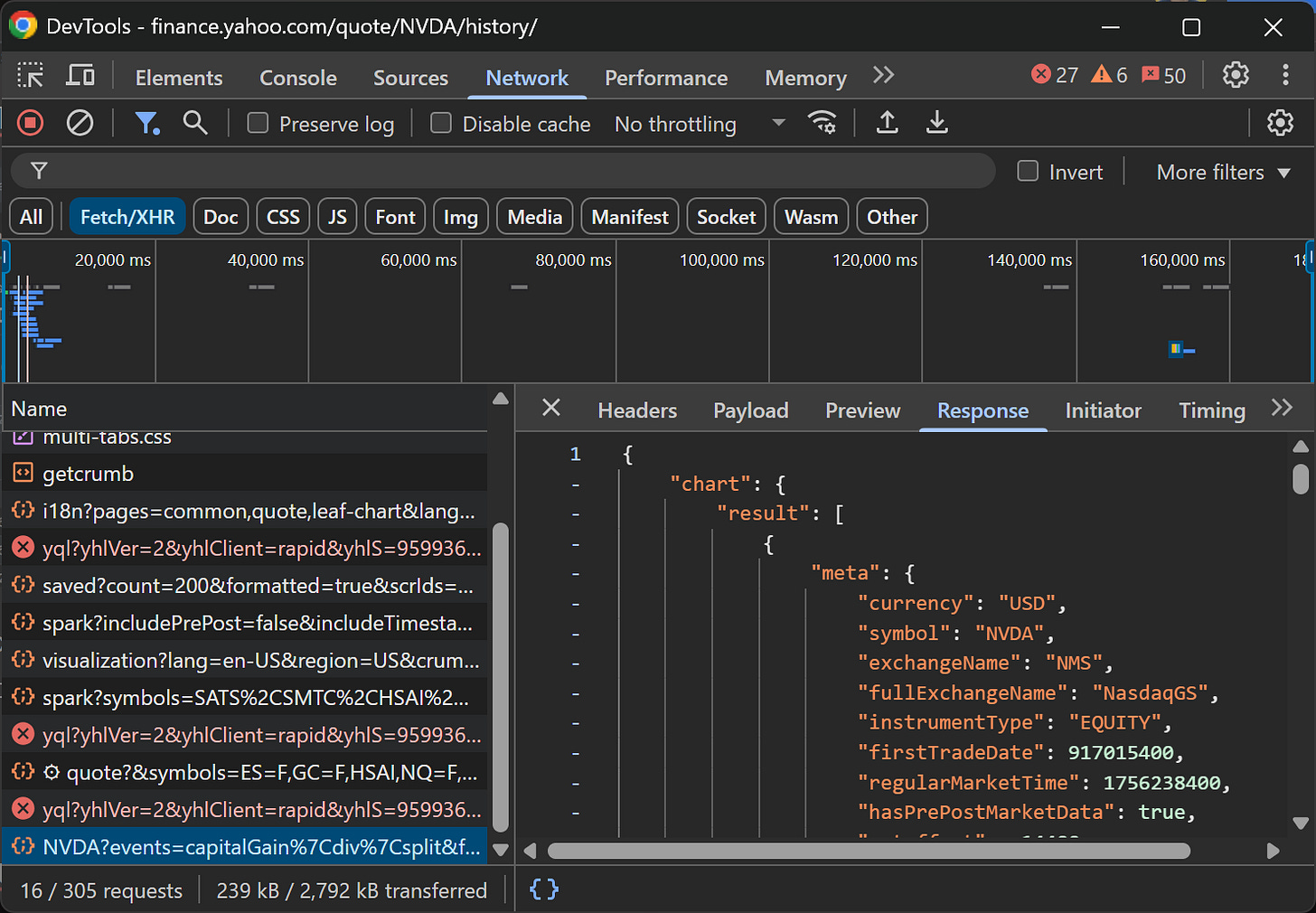

Check the Network tab again, and you’ll see a juicy API call:

Bingo!

Step #2: Replicate the API Call in hrequests

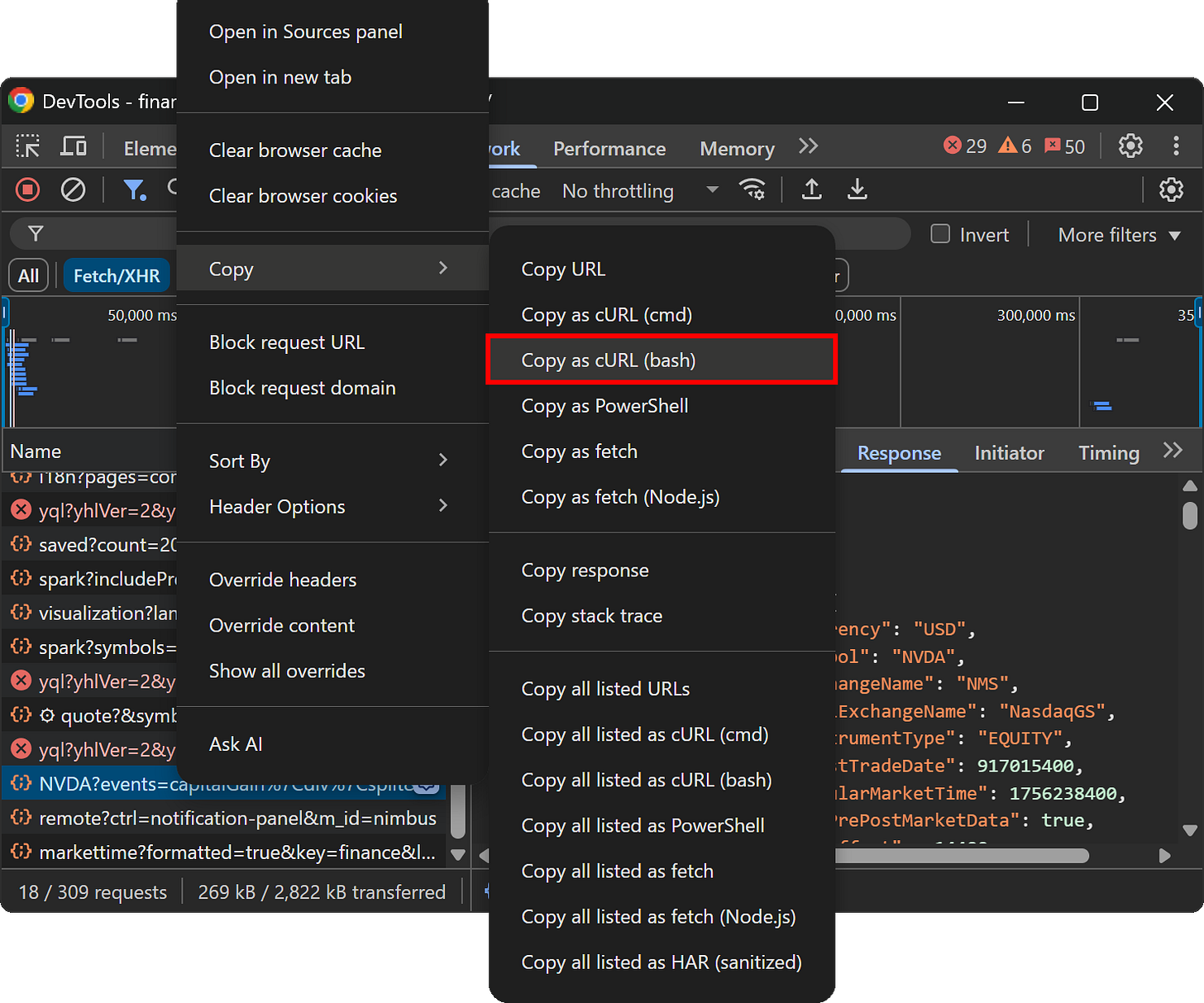

To reproduce the API request, you can either manually inspect the request headers and body and set them in your code—or, more quickly, right-click on the request in DevTools and select “Copy > Copy as cURL (bash)” option:

Here’s what you should get:

curl 'https://query1.finance.yahoo.com/v8/finance/chart/NVDA?events=capitalGain%7Cdiv%7Csplit&formatted=true&includeAdjustedClose=true&interval=1d&period1=1724759447&period2=1756295297&symbol=NVDA&userYfid=true&lang=en-US®ion=US' \

-H 'accept: */*' \

-H 'accept-language: en-US,en;q=0.9' \

-b 'A1=d=AQABBDvwrmgCEDschUoeY0qzKdahyIPpxIMFEgEBAQFBsGi4aNxH0iMA_eMCAA&S=AQAAAhiyU2WpXz79jNkMwXrUn4M; A3=d=AQABBDvwrmgCEDschUoeY0qzKdahyIPpxIMFEgEBAQFBsGi4aNxH0iMA_eMCAA&S=AQAAAhiyU2WpXz79jNkMwXrUn4M; A1S=d=AQABBDvwrmgCEDschUoeY0qzKdahyIPpxIMFEgEBAQFBsGi4aNxH0iMA_eMCAA&S=AQAAAhiyU2WpXz79jNkMwXrUn4M; PRF=t%3DNVDA; cmp=t=1756295232&j=0&u=1YNN; gpp=DBAA; gpp_sid=-1; _cb=Bfh7GUCUFa7R-WI0; _cb_svref=https%3A%2F%2Fwww.google.com%2F; _SUPERFLY_lockout=1; fes-ds-session=pv%3D3; _chartbeat2=.1756295232562.1756295298782.1.DFVJCt9HJ7_DuXFzQhUuwpCyV8fx.3' \

-H 'origin: https://finance.yahoo.com' \

-H 'priority: u=1, i' \

-H 'referer: https://finance.yahoo.com/quote/NVDA/history/?period1=1724759447&period2=1756295297' \

-H 'sec-ch-ua: "Not;A=Brand";v="99", "Google Chrome";v="139", "Chromium";v="139"' \

-H 'sec-ch-ua-mobile: ?0' \

-H 'sec-ch-ua-platform: "Windows"' \

-H 'sec-fetch-dest: empty' \

-H 'sec-fetch-mode: cors' \

-H 'sec-fetch-site: same-site' \

-H 'user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36'This makes it much easier to understand how the API call is structured. In most cases, with an HTTP client that supports browser-like TLS fingerprinting, it’s enough to set realistic User-Agent and Referer headers. Other times, you may need to set cookies, along with other special headers.

This is how you can reproduce the Yahoo Finance historical data API call with hrequests:

# pip install hrequests

import hrequests

# API endpoint

url = "https://query1.finance.yahoo.com/v8/finance/chart/NVDA"

# Query params

params = {

"events": "capitalGain|div|split",

"formatted": "true",

"includeAdjustedClose": "true",

"interval": "1d",

"period1": "1724759447",

"period2": "1756295297",

"symbol": "NVDA",

"userYfid": "true",

"lang": "en-US",

"region": "US"

}

# Set the required headers

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36",

"Referer": "https://finance.yahoo.com/quote/NVDA/history/"

}

# Initialize a session for cookie management

with hrequests.Session() as session:

# Make the GET HTTP request

response = session.get(url, params=params, headers=headers)

# JSON response parsing

data = response.json()

# Print the data retrieved via API scraping

print(data)Keep in mind that TLS fingerprinting is handled automatically in hrequests, so you don’t need to set any special arguments or options.

Step #3: Access the Scraped Data

Execute the Python script, and you’ll get a result similar to this:

{

'chart': {

'result': [

{

'meta': {

'currency': 'USD',

'symbol': 'NVDA',

'exchangeName': 'NMS',

'fullExchangeName': 'NasdaqGS',

'instrumentType': 'EQUITY',

# ... other fields ...

'priceHint': 2,

'currentTradingPeriod': {

'pre': {'timezone': 'EDT', 'start': 1756281600, 'end': 1756301400, 'gmtoffset': -14400},

'regular': {'timezone': 'EDT', 'start': 1756301400, 'end': 1756324800, 'gmtoffset': -14400},

'post': {'timezone': 'EDT', 'start': 1756324800, 'end': 1756339200, 'gmtoffset': -14400}

},

'dataGranularity': '1d',

'range': '',

'validRanges': ['1d', '5d', '1mo', '3mo', '6mo', '1y', '2y', '5y', '10y', 'ytd', 'max']

},

'timestamp': [

1724765400, 1724851800, 1724938200, 1725024600, 1725370200,

# ... more timestamps ...

],

'events': {

'dividends': {

'1726147800': {'amount': 0.01, 'date': 1726147800},

# ... more dividends...

}

},

'indicators': {

'quote': [

{

'low': [

123.87999725341797, 122.63999938964844, 116.70999908447266,

# ... more lows ...

],

'open': [

125.05000305175781, 128.1199951171875, 121.36000061035156,

# ... more opens ...

],

'volume': [

303134600, 448101100, 453023300,

# ... more volumes ...

],

'close': [

128.3000030517578, 125.61000061035156, 117.58999633789062,

# ... more closes ...

]

}

]

}

}

]

}

}Suppose you want to get a list of dictionaries containing the low, open, volume, and close values associated with each timestamp. You can achieve this by processing the data from the API response with:

import datetime

# API data retrieval...

# Access the main data fields

result = data["chart"]["result"][0]

timestamps = result["timestamp"]

quote = result["indicators"]["quote"][0]

# Combine into list of dictionaries with readable time

processed_data = []

for i in range(len(timestamps)):

processed_data.append({

"time": datetime.fromtimestamp(timestamps[i]).strftime("%Y-%m-%d %H:%M:%S"),

"open": quote["open"][i],

"low": quote["low"][i],

"close": quote["close"][i],

"volume": quote["volume"][i]

})At the end of the for loop, processed_data will contain:

[

{'time': '2024-08-27 15:30:00', 'open': 125.05000305175781, 'low': 123.87999725341797, 'close': 128.3000030517578, 'volume': 303134600},

{'time': '2024-08-28 15:30:00', 'open': 128.1199951171875, 'low': 122.63999938964844, 'close': 125.61000061035156, 'volume': 448101100},

{'time': '2024-08-29 15:30:00', 'open': 121.36000061035156, 'low': 116.70999908447266, 'close': 117.58999633789062, 'volume': 453023300},

# ... other values ...

{'time': '2025-08-26 15:30:00', 'open': 180.05999755859375, 'low': 178.80999755859375, 'close': 181.77000427246094, 'volume': 167926400}

]Great! The scraped data is now much easier to analyze and use.

Main Benefits and Disadvantages of API Scraping

Let me summarize the main pros and cons of AP scraping based on my experience.

👍 Pros:

Data is already structured, usually in JSON format.

Accessing the API directly may help you bypass some anti-bot measures, like browser fingerprinting or CAPTCHA.

Requires only an HTTP client (which typically includes a JSON parser).

👎 Cons:

APIs may not provide all the data rendered on the page, sometimes requiring a hybrid scraping approach (API web scraping + traditional web scraping).

Some APIs return encrypted or obfuscated data (e.g., some Booking.com APIs).

API endpoints can change more frequently than DOM page structures, requiring frequent updates to scraping or field-accessing logic.

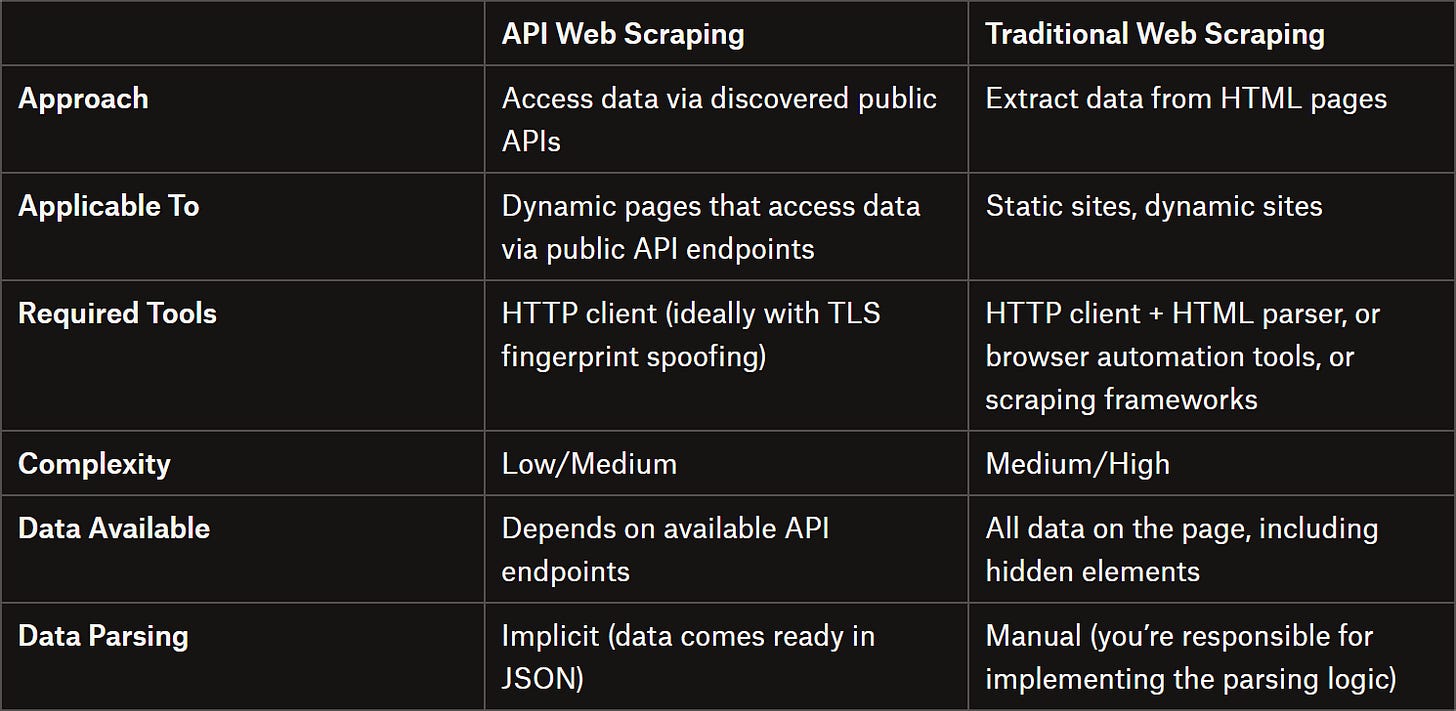

API Web Scraping vs Traditional Web Scraping

Conclusion

The goal of this post was to explain the API web scraping approach for automated online data retrieval. The idea is to tap into the public API endpoints that a site exposes, instead of relying on traditional HTML data parsing methods.

As I showed here, this method is generally straightforward, but it does have some pitfalls, which I have discussed and addressed.

I hope you found this article useful. Feel free to share your thoughts, questions, or experiences in the comments—looking forward to hearing from you!