What is Scrapy?

Scrapy is an open-source web crawling framework for Python used for extracting data from websites, developed and maintained by Zyte.

As described in Wikipedia, the Scrapy framework provides you with powerful features such as auto-throttle, rotating proxies, user agents, and several other useful options. Being highly modular, it can be expanded with many additional features useful for web scraping.

How to use it

To use Scrapy, you will need to have basic knowledge of Python programming. The framework can be installed using pip, a package manager for Python. Once installed, users can create a new Scrapy project using the Scrapy CLI and start writing their spider scripts to scrape the data they need.

Scrapy has several built-in features that make it easy to use and effective. It includes support for handling HTTP requests and responses, following links, handling cookies and sessions, and processing data in the desired format. It also provides tools for logging, managing duplicate requests, and handling exceptions.

If you want to understand better how to create your first scraper you can have a look at the official documentation or see my post about it.

The Scrapy’s architecture

Always from the Scrapy documentation, we can have a deeper understanding of how Scrapy works by knowing its understanding.

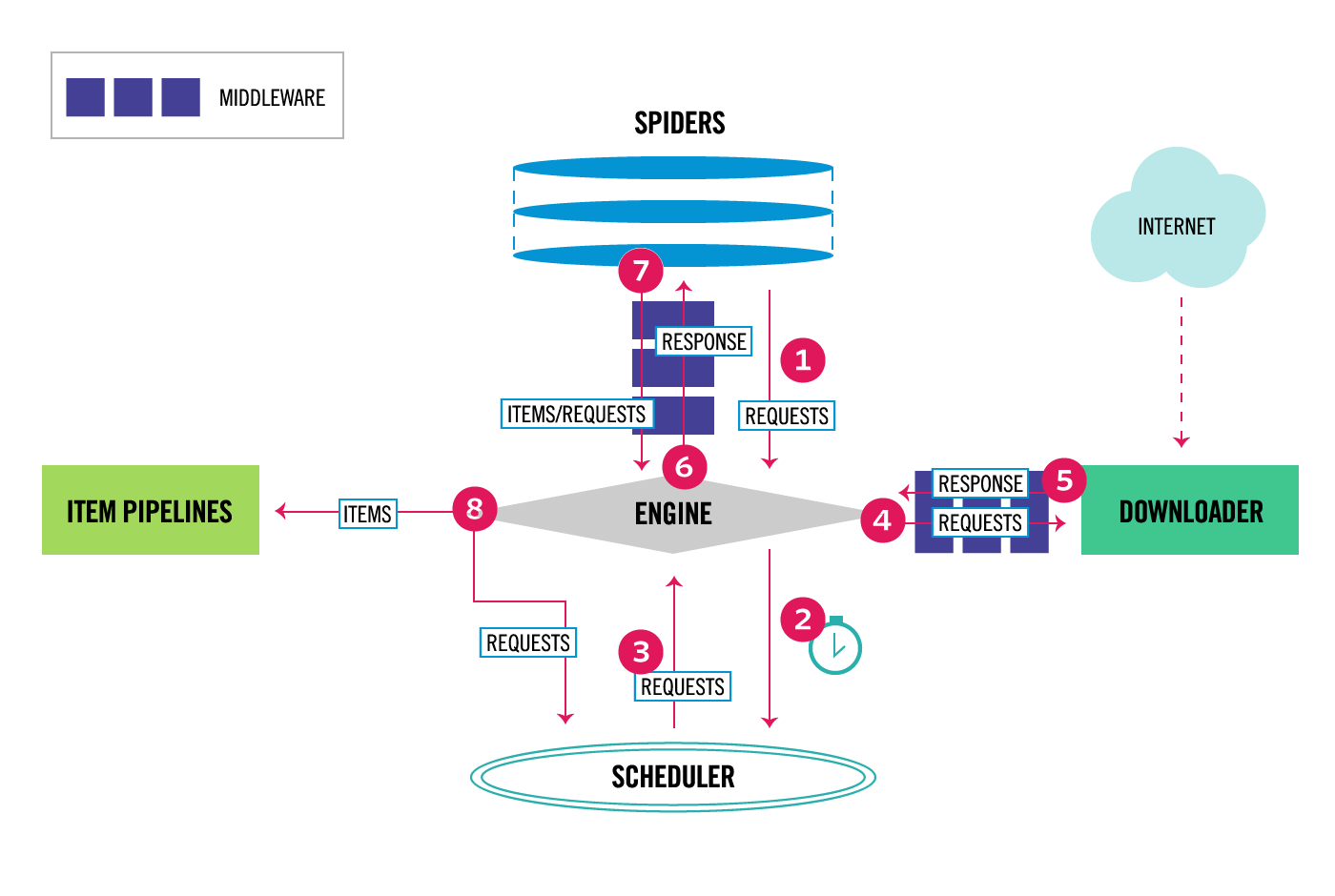

The data flow in Scrapy is controlled by the execution engine, and goes like this:

The Engine gets the initial Requests to crawl from the Spider.

The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl.

The Engine sends the Requests to the Downloader, passing through the Downloader Middlewares (see

process_request()).Once the page finishes downloading the Downloader generates a Response (with that page) and sends it to the Engine, passing through the Downloader Middlewares (see

process_response()).The Engine receives the Response from the Downloader and sends it to the Spider for processing, passing through the Spider Middleware (see

process_spider_input()).The Spider processes the Response and returns scraped items and new Requests (to follow) to the Engine, passing through the Spider Middleware (see

process_spider_output()).The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl.

The process repeats (from step 3) until there are no more requests from the Scheduler.

If you want to see Scrapy in action, I recommend this video from John Watson Rooney with a tutorial for beginners.

This post is written by Pierluigi Vinciguerra (pier@thewebscraping.club)