The Lab #56: Bypassing PerimeterX 3

Testing the latest PerimeterX version by scraping Crunchbase public data

PerimeterX (which was acquired by Human Security some time ago) is one of the most important anti-bot solutions, together with Cloudflare, Datadome, and Kasada, as recognized by Forrester in their industry report.

Before diving into the technical details of the solution I’ve worked on, let’s try to understand more about PerimeterX

What is PerimeterX and how can you detect it?

PerimeterX Bot Defender (a.k.a. HUMAN bot defender), as mentioned before, is one of the most famous anti-bot solutions, used by websites like Crunchbase, Zillow, SSense, and many others.

By navigating the documentation, we can understand that its architecture consists of three different components:

HUMAN Sensor: A JavaScript snippet inserted on your website that loads the HUMAN Sensor to your browser. The Sensor collects and sends data to analyze user and device behavior, as well as network activities. It assesses the authenticity of the device and application, and tracks user behavior and interaction.

HUMAN Detector: A cloud-based component that evaluates sensor and enforcer data in real-time using machine learning and behavioral analytics to create a risk score. This risk score identifies whether a user is malicious or not and is sent back to the user's device in a secure and encrypted token.

HUMAN Enforcer: A lightweight module installed on your choice of web application, load balancer, or CDN. It is responsible for the enforcement functionality of the HUMAN solution.

Each installation can be configured with different layers of security and options.

In my experience, one of the most common options enabled is the Human Challenge, the big “Press and Hold button” typical of PerimeterX.

As an alternative, Google ReCAPTCHA can be used on the website.

In both cases, PerimeterX bot Defender throws invisible challenges to the browser in order to detect any red flag in the scraper’s configuration or behavior.

If the website requires additional protection on a limited portion of the scope (let’s say some rare items on sale or the checkout process), a more powerful bot detection system can be activated, called Hype Sale.

In this case, more resource-consuming (and slower to solve) challenges are used to detect bots, and this is also the reason why this approach can’t be applied to the whole website unless heavy penalization in the user experience.

How we can detect a website using PerimeterX?

The easiest way, as always is to use the Wappalyzer browser extension, even if its results should be always double-checked, since their database could be not updated.

Detecting PerimeterX is quite easy, both by having a look at the website’s cookies and at the network calls it makes.

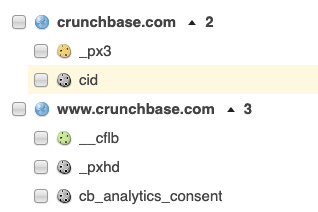

Always from the PerimeterX documentation website, here’s the list of the cookies you should see on a website using it.

Another way, a bit more difficult, is opening the network tab in the Developer’s tools and looking for requests made to PerimeterX-related domains like:

perimeterx.net

px-cdn.net

px-cloud.net

pxchk.net

px-client.netlike in the following real-world case

Now we have seen how to detect PerimeterX, let’s understand how we can bypass it to scrape public data.

First tests with the browser

Let’s look for a website that’s protected only by Perimeterix 3, so we can be sure about our solution.

Ideally, we should get the _px3 cookie and no other anti-bot softwares should be installed.

After some searches, the designed target website has been selected: Crunchbase!

Since the website has an open section and then more content behind a login page, the scope of our tests will be only the public information available without the need to log in. I strongly discourage you from using these (or other) techniques for scraping paywalled data, you might incur issues related to the violation of the Terms of Services and intellectual property.

Given these due premises, let’s study what we could work and what could not from simply browsing the website in incognito mode.

The first test consists of opening directly a company page from the browser in Incognito mode: as an example, we can open the Databoutique.com page but we are getting blocked by the Human Challenge.

So, the second test is to open the Crunchbase home page and then the Databoutique page.

In this case, we’re successful!

When we create the scraper, we must consider that we cannot start a brand new browser session and enter directly to the Crunchbase company profile page but we should at least open the home page before (well, SPOILER: we’ll see that it won’t be enough).

What doesn’t work

I’ve tried the easiest way possible, so with a Scrapy spider (also adding the Scrapy_impersonate package) I tried to open Google, then the Crunchbase home page, and then a company page, but with no success.

def start_requests(self):

url='https://www.google.com/'

yield Request(url, callback=self.get_home_page, headers=self.HEADER, meta={'impersonate': 'chrome110'}, dont_filter=True)

def get_home_page(self, response):

url='https://www.crunchbase.com/'

yield Request(url, callback=self.read_company_page, headers=self.HEADER, meta={'impersonate': 'chrome110'}, dont_filter=True)

def read_company_page(self, response):

url='https://www.crunchbase.com/organization/luma-ai'

yield Request(url, callback=self.end_test, headers=self.HEADER, meta={'impersonate': 'chrome110'}, dont_filter=True)

def end_test(self, response):

print("Test ended")

Unless you’re using an unblocker with JS rendering, you won’t be able to bypass the challenges thrown by the website with a simple Scrapy scraper. We need a browser automation tool like Playwright.

As always, if you want to have a look at the code, you can access the GitHub repository, available for paying readers. You can find this example inside the folder 56.PERIMETERX3

If you’re one of them but don’t have access to it, please write me at pier@thewebscraping.club to get it.

My first try with Playwright on a local machine

The first thing I tried was to load a Crunchbase company page straight from its URL, using my default Playwright configuration.

def run_chrome(playwright):

CHROMIUM_ARGS= [

'--no-first-run',

'--disable-blink-features=AutomationControlled',

'--start-maximized'

]

# Get the screen dimensions

browser = playwright.chromium.launch(channel="chrome", headless=False,slow_mo=200, args=CHROMIUM_ARGS,ignore_default_args=["--enable-automation"])

context = browser.new_context(

no_viewport=True

)

page = context.new_page()

page.goto('https://www.crunchbase.com/organization/databoutique-com', wait_until="commit")and guess what?

Press and hold again!

It was not a matter of fingerprint, since I was starting my scraper from my own Mac, nor a matter of IP, since I was at home, using a clean one.

So what could be the issue?

And then, I remembered the behavior when testing the website with my browser.

My second try with Playwright on a local machine