The Great Web Unblocker Benchmark: Kasada edition

Let's squeeze our Unblockers to see who's bypassing Kasada

Welcome to a new episode of the Great Web Unblocker Benchmark, our series where we compare the performances of the most famous unblocker solutions on the market.

After the first introductory episode in March, today we’re diving more into details testing them against Kasada, one of the hardest anti-bots to bypass.

Testing methodology and disclaimers

During our web scraping projects, we often encounter anti-bot solutions protecting the target website.

In this case, we have two options: improve our scraper to bypass them or delegate this task to third-party solutions like Web Unblockers. These two solutions have pros and cons: you need to invest your time in creating your scraper and probably you’ll need a browser automation tool, which will be slower and more expensive to run. Depending on the size of your scraping scope, delegating this task to a third-party provider by using an unblocker, considering the development time of the scraper, could be a cheaper option in the long run.

Web Unblockers work like an API so that we can include them in our browserless solutions: for this reason, in our case, all the tests in this article will be made by using a simple Scrapy Spider.

For this episode, I’ve created a scraper that reads the homepage and then 100 different product URLs of an e-commerce website protected by Kasada, canadagoose.com.

The Unblockers will be tested according to three measures:

a score from 0 to 100 for their success rate in scraping data from the website. 0 if the Unblocker was not able to scraper the homepage and not even a URL from the list. Every URL successfully scraped from the list gives one additional point, until reaching out the 100.

the time spent scraping these URLs, only for those Unblockers that were able to bypass the homepage

the cost for the scraping, considering only the pages scraped successfully

Given these premises, let’s see the engagement rules:

All the unblockers are tested using the same Scrapy spider, with different options or setups that are peculiar for each solution

To be considered successfully scraped, the requests should not only return the return code 200 but also be parsed successfully by the scraper. In fact, it may happen that Kasada returns an empty HTML, so this case should be considered an error.

Results are not shown in advance to vendors, to avoid any influence on the final result. This could also lead to errors on my side: I might have missed one option that could solve the anti-bot. If this is the case, I’ll update this article, so keep an eye on it even after its publishing.

The scraping time I’ve entered in the benchmark is calculated by Scrapy itself and it’s the elapsed time of the first successful run.

This is not a paid review of unblockers, but a quantitative test. In case your company sells a web unblocker not listed here and wants to participate in the next issues of the test, please write me at pier@thewebscraping.club

This test is created for educational purposes, in order to let the readers understand what’s the best tool for their needs when working with scraping public data. None of these tools should be used to damage the target website’s business.

Who’s participating in this round?

In this edition, we’ll see, in a rigorous alphabetical order:

While their ultimate goal (bypassing anti-bots) is the same, the technology under the hood is different between each provider and so are also their pricing models.

Smartproxy and Oxylabs have a pay-per-GB pricing model, which is convenient when you’re scraping API endpoints but gets expensive when you scrape pure HTML code, like in these tests.

Bright Data, ZenRows, and Zyte, instead, have a pay-per-request model, with some differences: Bright Data charges 3 USD per 1000 requests, but they become 6 when scraping a domain included in their premium list. In our case, no one of the websites we’re testing is contained in considered so.

ZenRows and Infatica use a credit system: you’re buying credits (250k, enough for 250k basic requests, for 69 EUR in the case of ZenRows, 250k per 25 USD at Infatica) and every basic request is one credit.

Zyte API has dynamic pricing calculated internally, so I could get the exact scraping cost from their dashboard.

Let’s see how they behave against Kasada, in alphabetical order. I can anticipate it will be a tough test, with few Unblockers that will nail it. Kasada is a strong anti-bot solution but still not so widespread so it’s not the first solution on the radar for companies developing the Unblockers. In fact, the list of Unblockers that could not be bypassed is a long one.

Who got blocked? ❌

I wanted to test the Unblockers with Kasada since they’re an incumbent player with great technology, but still not mainstream.

While Cloudflare is the undisputed leader and it’s the first solution any company is targeting when creating Unblockers, given the number of websites protected by it, Kasada probably is not on the radar of most of these companies.

This is fully understandable, following the Pareto Principle everyone focused on the biggest threat first, but the web is made of a long tail of websites and solutions that need to be bypassed. I think it’s just a matter of time before these solutions could be able to do so.

As of today, the following unblockers failed to scrape the target website:

Infatica stopped at the home page, with an error 429 which is the trademark of Kasada.

The requests made with Zyte API, instead, returned a 200 code and went through also all the pages but it was not able to retrieve the HTML code. Testing the product pages on the playground on their website, the API is able (sometimes) to get a screenshot but cannot return the HTML, which is a pity.

There are only three APIs remaining, let’s see which one performed better.

Bright Data ✅

The domain is not on Bright Data’s “premium list”, which means we could benefit from the lower tie of the cost, 0.003 USD per request, so we’ve spent 0.303 USD for 101 pages.

In the first run, the scraper was able to retrieve 96 product pages out of 100, which is excellent.

The issue in this case was the execution time: 3480 seconds (58 minutes) for only 101 pages.

NetNut ✅

Let’s see how the new NetNut unblocker behaves in this challenge.

The scraper has been able to scrape correctly 97 items out of 100, during an execution time of 595 seconds.

It’s a great result, considering that the NetNut Website Unblocker is a product just released three months ago.

The price of the solution is currently undisclosed to we cannot compare the costs of this execution with the other ones.

Oxylabs ✅

In the previous episode, Oxylabs was the best choice for bypassing Kasada and also in this case the results are pretty good.

We received 96 valid items back, just like Bright Data, but in only 10 minutes and for a cost of 0.1 USD. Great result!

Smartproxy ✅

Here in the first run, we got 92 items out of 100, so on the same level as Bright Data, but the huge difference is about the response time: 413 seconds (7 minutes).

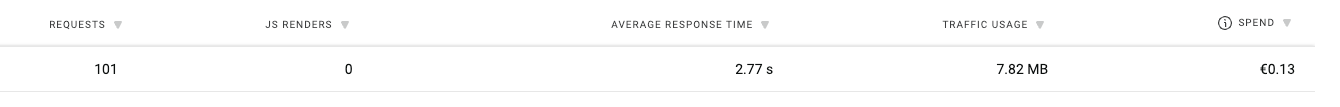

The cost for this extraction is 0.13 EUR, being also the cheapest option at the moment, as we can see from their dashboard.

ZenRows ✅

Compared to the previous benchmark, ZenRows this time was able to retrieve data from the website, scraping 95 items out of 100.

Since we used 101 requests for heavily protected websites, we spent 0.7 EUR, making this solution the most expensive one.

Even the response time is the highest one, 4608 seconds (76 minutes).

Final remarks

Here’s the recap of our tests for the unblockers that were able to bypass Kasada.

The score is pretty similar for all five solutions, while the differences in cost and execution time are the main factors that differentiate the solutions.

The NetNut Website Unblocker is the solution with the best score on the return code.

The Smartproxy Site Unblocker instead is the best solution in terms of response time, while the Oxylabs Web Unblocker is the cheapest solution.

Thanks to all the companies involved in the test, in the next episode we’ll test the solutions against another anti-bot.

I hope you liked this second edition of “The Great Web Unblocker Benchmark”, we’ll have a second one in September.

Zenrows offload to one of the cited unblockers 🙈 they inherit the same behavior

Are you also checking the content to ensure they are not using cache? (checking timestamp or unique id generated on the page)