Web Scraping from 0 to hero: Selenium

Web Scraping with Selenium and webdrivers

Welcome back for a new episode of “Web Scraping from 0 to Hero”, our biweekly free web scraping course provided by The Web Scraping Club.

Let’s continue our tour of the most popular tools for web scraping, by staying between the Python landscape.

After having seen Scrapy and Playwright, today we’re approaching another browser automation framework widely used for web scraping also: Selenium.

How the course works

The course is and will be always free. As always, I’m here to share and not to make you buy something. If you want to say “thank you”, consider subscribing to this substack with a paid plan. It’s not mandatory but appreciated, and you’ll get access to the whole “The LAB” articles archive, with 40+ practical articles on more complex topics and its code repository.

We’ll see free-to-use packages and solutions and if there will be some commercial ones, it’s because they are solutions that I’ve already tested and solve issues I cannot do in other ways.

At first, I imagined this course being a monthly issue but as I was writing down the table of content, I realized it would take years to complete writing it. So probably it will have a bi-weekly frequency, filling the gaps in the publishing plan without taking too much space at the expense of more in-depth articles.

The collection of articles can be found using the tag WSF0TH and there will be a section on the main substack page.

What is Selenium?

Selenium is an open-source automation tool widely used for web scraping and automated testing, its original and main purpose. It’s a versatile instrument for developers and data analysts, enabling automated navigation, interaction, and data extraction from web pages with precision. Initially developed to streamline and automate web application testing processes, Selenium has evolved into a comprehensive tool that supports programmable web browser control to simulate user interactions efficiently.

The tool's architecture is quite flexible, supporting multiple programming languages such as Python, Java, C#, and Ruby. This wide-ranging support extends its utility across various user groups, from experienced developers to beginners in programming.

On the browser’s side, Selenium's compatibility with leading web browsers, including Chrome, Firefox, Internet Explorer, and Safari.

What’s the difference with Playwright?

As mentioned before, Selenium and Playwright are both open-source automation tools designed for web testing and automation, but they differ significantly in their approach, architecture, and capabilities.

Architecture and Language Support:

Selenium operates through a WebDriver protocol, acting as an intermediary between test scripts and the browser. It supports multiple programming languages, including Java, C#, Python, Ruby, and JavaScript, making it versatile for various development environments.

Playwright, developed by Microsoft, offers more direct interaction with browsers through a dedicated library for each supported browser. It primarily supports JavaScript and TypeScript, Python, C# and Java. This direct interaction allows for more efficient execution of commands and control over the browser.

Browser Support:

Both tools support major browsers, including Chrome, Firefox, and Safari. However, Playwright extends its support to include newer Chromium-based browsers and offers experimental support for mobile browsers through WebKit. This wider range of browser support in Playwright is useful in web scraping since sometimes browsers like Brave are needed to spoof our fingerprint.

Execution Speed and Reliability:

Playwright is designed to be faster and more reliable than Selenium, primarily due to its direct communication with browsers and the ability to run tests in parallel across different contexts. This results in quicker test execution times and improved test suite efficiency.

Selenium may experience slower execution times due to its reliance on the WebDriver protocol, which requires communication back and forth between the test script, the WebDriver, and the browser.

API and Feature Set:

Playwright provides a richer API for browser automation, including features like auto-waiting for elements, network request interception, and built-in support for headless testing. These features are designed to reduce flakiness and improve the reliability of tests.

Selenium, while offering a comprehensive set of features for web automation, may require additional tools or libraries to achieve similar functionality provided out-of-the-box by Playwright, such as handling network requests or integrating visual regression testing.

My two cents: I prefer Playwright, I find it easier to manage and more flexible for web scraping.

What’s a WebDriver for Selenium?

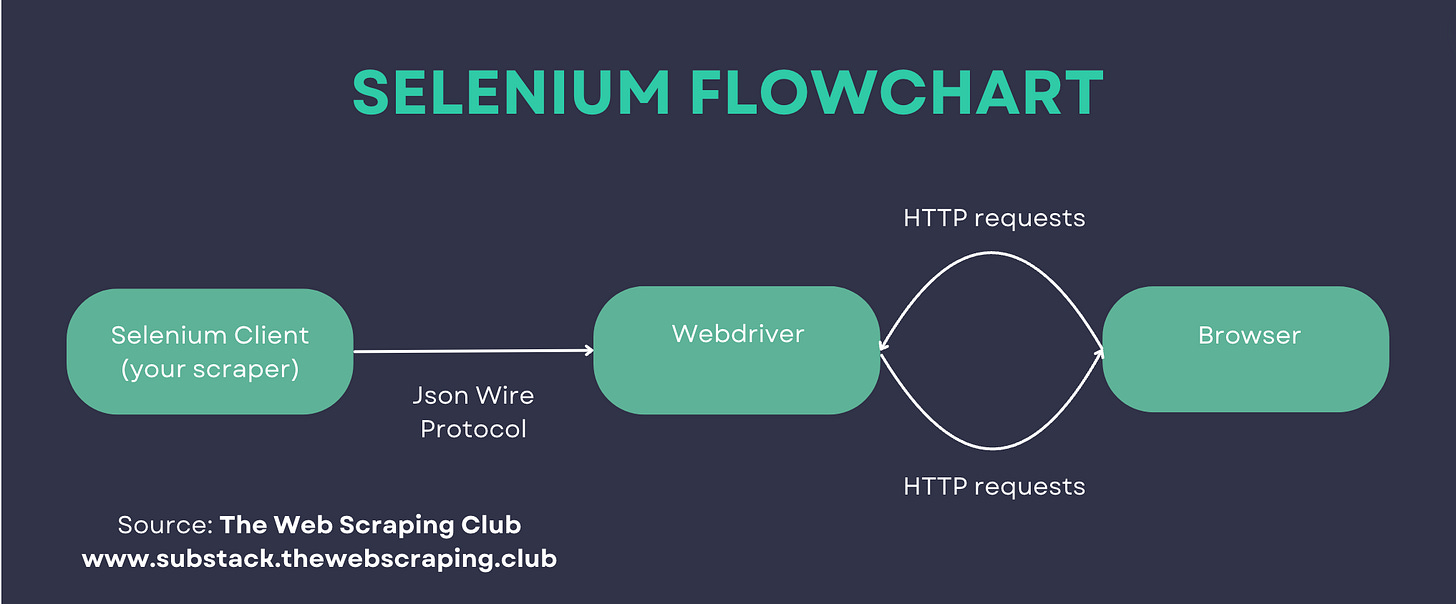

Describing the differences between Playwright and Selenium we mentioned the WebDriver component. But what’s a webdriver and how does it work?

A WebDriver in the context of Selenium is a crucial component that acts as a link between Selenium tests and web browsers. It is essentially a browser-specific driver that enables communication between the Selenium script and the browser's native API, allowing for the execution of web automation tasks such as navigating to a URL, clicking on links, filling out forms, and retrieving web content.

For every Selenium command, there is a respective REST API in JSON Wire Protocol.

JSON Wire Protocol transfers requests between the Client Library and browser driver HTTP servers through REST APIs. Because servers do not understand programming languages, JSON Wire Protocol uses the process of serialization (converting object data to JSON format) and de-serialization (converting JSON format to object).

Each major web browser (e.g., Chrome, Firefox, Edge, Safari) has its own implementation of WebDriver, designed to interact with the browser in a way that mimics user actions as closely as possible.

The WebDriver works by implementing a browser-specific protocol. This protocol is a set of rules and commands that the WebDriver uses to communicate with the browser's API. When a Selenium script is executed, the WebDriver translates the Selenium commands into requests that are understood by the browser's API. These requests are then sent to the browser, which performs the requested actions. The browser's response to these actions is sent back to the WebDriver, which then translates it into a format that can be understood and used by the Selenium script.

This interaction between the WebDriver and the browser's API is facilitated through HTTP requests. The WebDriver acts as an HTTP server, receiving commands from the Selenium script (the client) and sending them to the browser. The browser, in turn, executes these commands using its native API and returns the results back to the WebDriver. This process allows for seamless automation of web interactions as if they were being performed by a human user.

The architecture of WebDriver enables it to work independently of the specific language bindings used to write Selenium scripts. This means that whether a script is written in Python, Java, C#, or any other language supported by Selenium, the WebDriver can interpret and execute the commands.

Integrating WebDrivers in a Selenium Scraper

To integrate WebDrivers into a Selenium scraper, follow these steps:

Download the WebDriver: First, identify the browser you intend to automate (e.g., Chrome, Firefox, Edge) and download the corresponding WebDriver. Ensure that the version of the WebDriver matches the version of the browser you are using to avoid compatibility issues.

For Chrome, download ChromeDriver.

For Firefox, download GeckoDriver.

For Edge, download EdgeDriver.

Setting Up the WebDriver: Once downloaded, extract the WebDriver from its archive and place it in a directory on your system. It's recommended to add the directory containing the WebDriver to your system's PATH environment variable. This makes the WebDriver accessible to Selenium scripts from any location on your system.

Configuring Selenium to Use the WebDriver: In your Selenium script, you need to configure Selenium to use the downloaded WebDriver. This is typically done by specifying the path to the WebDriver executable or ensuring it's located in a directory included in your system's PATH.

Here's an example of how to set up Selenium with the ChromeDriver in Python:

from selenium import webdriver # Specify the path to ChromeDriver if it's not in your PATH driver_path = '/path/to/chromedriver' # Initialize the WebDriver for Chrome driver = webdriver.Chrome(executable_path=driver_path) # Now you can use `driver` to navigate and interact with web pages driver.get('https://example.com')Executing Selenium Commands: With the WebDriver initialized, you can now use Selenium commands to interact with the browser. These commands allow you to navigate to web pages, interact with page elements, and extract data as needed.

Closing the Browser: After your automation tasks are complete, it's important to properly close the browser and quit the WebDriver session to free up system resources.

driver.quit()

Similar to what we’ve seen with Plawwright's connection over CDP to remote sessions, Selenium scrapers can connect to remote webdriver using the following command:

options = webdriver.ChromeOptions()

driver = webdriver.Remote(command_executor=server, options=options)Now we’re ready to create our first scraper with Selenium, which is something we’ll do in the next post of “Web Scraping From 0 to Hero”.