Automating LinkedIn Scraping Using Its Hidden APIs

Let’s learn how to scrape LinkedIn job listings in a simplified way by retrieving data from its hidden APIs

LinkedIn is a treasure trove of useful data, but if you’ve ever tried to scrape it, you know how challenging that can be. That’s particularly true given the limitations of publicly accessible pages. Fortunately, there is a solution, which is obtaining data directly from LinkedIn’s hidden APIs.

In this post, I’ll show you how to scrape LinkedIn job listings using its hidden API endpoints with a Python script!

Before proceeding, let me thank NetNut, the platinum partner of the month. They have prepared a juicy offer for you: up to 1 TB of web unblocker for free.

Main Problems with Scraping LinkedIn

Scraping LinkedIn the traditional way, which means using an HTTP client + HTML parser duo (like Requests + Beautiful Soup) or browser automation tools like Playwright or Selenium, may not be the best approach.

Why not? Based on my experience, there are three main LinkedIn-specific reasons:

The infamous LinkedIn login wall: Try visiting LinkedIn in an incognito tab, and you’ll often encounter a login prompt that blocks access to the page. Sometimes it’s just a dismissible pop-up. Other times, it completely replaces the page content, leaving no data to scrape. Now, as long as you're scraping publicly accessible data, you should be fine. But if you’re thinking about automating the login process, keep in mind that this can raise legal concerns (more on that later in the post).

Inconsistent page structures: LinkedIn pages vary wildly. Some include full job descriptions, salary information, and company details—others don’t. Similarly, some public profile pages have sections that others do not. This inconsistency makes programmatic parsing error-prone and fragile, especially at scale. To handle that variability reliably, you might even need AI-powered data parsing.

Lots of moving parts: LinkedIn relies heavily on infinite scroll, client-side rendering, and dynamic pagination. Handling these complex navigation patterns reliably requires sophisticated automation logic, which increases development time and maintenance costs. Plus, it demands advanced scraping skills.

The bottom line is that traditional scraping isn’t always ideal for LinkedIn. A better approach? Getting data directly from LinkedIn’s hidden API endpoints!

Note: Don’t forget about common challenges with web scraping, such as the fact that web pages often change their structure over time—usually much more frequently than APIs do. While API endpoints can sometimes be removed, their responses rarely change drastically overnight (instead, they're typically versioned so that the same endpoint is available in v1, v2, and so forth).

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - 55% discount with the code WSC55 for static datacenter & ISP proxies

💰 - Get a 55% off promo on residential proxies by following this link.

Finding the Hidden LinkedIn API Endpoints

If you’ve ever used LinkedIn, you know that most of its pages are dynamic. As mentioned before, they involve infinite scrolling, client-side rendering, and other dynamic behaviors.

Most of those interactions are implemented using AJAX, a mechanism that lets web pages fetch data dynamically by making HTTP requests to servers (usually, by calling RESTful or GraphQL API endpoints).

For those APIs to be accessible by web pages running in the browser, the endpoints must be public. Also, since they need to work on public pages where users aren’t logged in, they cannot be protected by authentication.

In other words, LinkedIn’s hidden API endpoints are public and free to access. The only challenge is discovering them—and that’s exactly what I’ll show you here!

Step #0: Acknowledge the LinkedIn Login Wall

Suppose you want to scrape LinkedIn job listings. Naturally, the endpoints exposing that data would be part of LinkedIn’s “Jobs” page. So what might you be tempted to do?

You open your browser in incognito mode (to ensure a fresh session where you're not logged in—important for avoiding legal issues), go to Google, and search for “linkedIn jobs”:

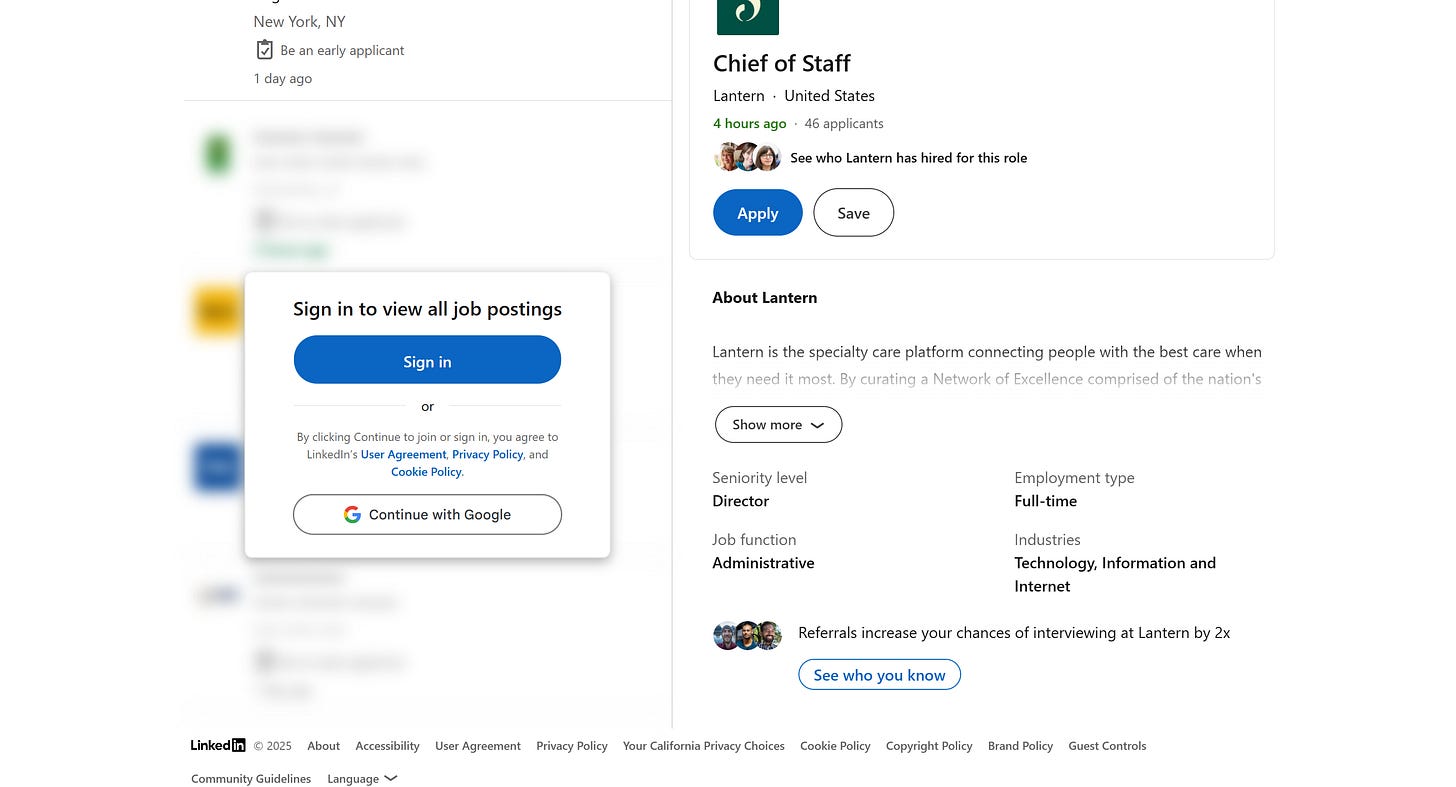

Clicking the first result brings you to the /jobs LinkedIn page. And what do you see? The blocking LinkedIn login wall!

Even if you inspect the raw HTML returned by the server, you’ll see there’s no job data hidden on the page. That means the login prompt wasn’t loaded dynamically—it is the actual response from LinkedIn’s “Jobs” page.

Now think about it, how does that even make sense?

Well, that happened to me several times, and at first, I assumed there was some kind of random mechanism that occasionally showed the real jobs page and other times returned the login wall. After all, I clearly remembered being able to access a public LinkedIn jobs page in the past.

At that point, I thought scraping LinkedIn was game over. But it’s not. That login wall is just a clever trick LinkedIn started using recently to push visitors toward creating an account. Follow along, as I’ll show you how to legally work around it!

Step #1: Access the Real LinkedIn Jobs Page

During my research, I found a way to get around the LinkedIn login wall on the Jobs page. First, visit the LinkedIn homepage in incognito mode, then click on the “Jobs” link on the top navbar:

This time, you’ll land on this page:

Behind the login modal, you’ll notice the actual LinkedIn “Jobs” page—with the job data you’re after. Strange, right? Why does this work when previous attempts led straight to a full login wall?

Here’s the trick: look closely at the URL. It’ll be something like:

https://www.linkedin.com/jobs/search?trk=guest_homepage-basic_guest_nav_menu_jobsThat’s different from the URL you get when visiting the “Jobs” page via Google search:

https://www.linkedin.com/jobsAs you can tell, these are two separate pages. LinkedIn appears to be prioritizing the first one for SEO to steer more users toward logging in—likely to increase conversion rates.

Pro tip: Based on my tests, the trk=guest_homepage-basic_guest_nav_menu_jobs query parameter seems to play a role—it consistently prevents the actual Jobs page from loading and instead triggers the login wall.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Enter the TWSC community at this link.

Step #2: Get Familiar With the LinkedIn Jobs Search Page

To access the job listings, simply click the “X” to close the login prompt modal. You’ll now see the full LinkedIn job search page:

From here, you can perform any job search. However, as you scroll down, you’ll notice that infinite scrolling (which loads more job postings) doesn’t work unless you log in:

Fortunately, as you’re about to see, you can find more job postings by tapping directly into LinkedIn’s hidden APIs—another huge benefit of scraping LinkedIn this way.

Step #3: Discover the LinkedIn Jobs API Endpoint

To get the LinkedIn Jobs API endpoint, right-click anywhere on the page and select the “Inspect” option to open your browser’s DevTools. From there, go to the “Network” tab and apply the “Fetch/XHR” filter to view AJAX requests made by the page.

Now, reload the page, perform a job search (e.g., for “software engineer” positions in New York), and try to trigger a dynamic loading event—by scrolling down or adding filters to your search.

Watch the API calls appearing in the DevTools panel. Look for the one responsible for loading the job postings dynamically.

However, as of this writing, there’s a major caveat: LinkedIn appears to have removed dynamic loading sections from the public version of the Jobs Search page.

In other words, it's currently hard (or, maybe, not even possible) to trigger an interaction that reveals the useful public API endpoints. But from my experience, I know that wasn’t always the case—so, there's still hope!

Instead of giving up, you can use the Wayback Machine to access an older version of the target page. For instance, visit the snapshot of the LinkedIn Jobs Search page taken on August 15, 2024, at 1:32:38 PM, you can find at this URL:

https://web.archive.org/web/20240815133238/https://www.linkedin.com/jobs/search/?position=1&pageNum=0Once the page loads, scroll down to trigger infinite loading while keeping DevTools open:

In the “Fetch/XHR” section of the “Network” tab, you’ll notice an endpoint like this:

Bingo! That’s the LinkedIn Jobs API endpoint. Specifically, it’s a RESTful GET endpoint:

https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/search?position=1&pageNum=0&start=25

Be sure to remove the "https://web.archive.org/web/…/" prefix added by the Wayback Machine so you’re using the raw LinkedIn API URL. Despite coming from a 2024 snapshot, this endpoint still works today—meaning you can use it programmatically to access fresh LinkedIn job data.

I’ll show you exactly how in the next chapter!

How to Scrape LinkedIn via Its Hidden API Endpoints

Now, I’ll guide you through the process of connecting to the LinkedIn API to scrape data directly from it. The code snippets below are written in Python, but you can easily adapt them to any other programming language.

Step #1: Study the Endpoint

Based on my research and past experience on this topic, the LinkedIn Job Search API has the following GET endpoint:

https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/search?keywords=<keyword>&location=<location>&start=<start>Where:

<keyword> is the job title you’re searching for (e.g., “Software Engineer”).

<location> is the location of the job search (e.g., “New York”).

<start> controls pagination (e.g., 0 starts from the first job, 20 skips the first 20 results, and so on).

Note: The position and pageNum query parameters are present in the original URL endpoint seen eariler, but in practice, they don’t seem to affect the results and can be ignored.

One of the downsides of scraping hidden APIs like this is the lack of official documentation. You need to experiment, reverse-engineer the requests, and test various parameters to understand how to control the API’s output.

If you call the API for “Software Engineer” positions in New York using curl, you’ll see that it returns a raw HTML response:

Step #2: Call the API Endpoint

Create a function that utilizes Requests to call the hidden LinkedIn Job Search endpoints:

import requests

def fetch_linkedin_jobs(keyword, location, start=0):

"""

Sends a GET request to LinkedIn's hidden job search API endpoint.

Args:

keyword (str): The job title or keywords to search for.

location (str): The location where to search for jobs.

start (int): The pagination offset (0 for the first page, 25 for the second, etc.).

Returns:

str: The raw HTML content of the job listings.

"""

# LinkedIn Jobs Search hidden API endpoint

base_url = "https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/search"

params = {

"keywords": keyword,

"location": location,

"start": start

}

response = requests.get(base_url, params=params)

# Raise an error if the request fails

response.raise_for_status()

# Return the raw HTML

return response.textThis snippet performs a GET request to the target LinkedIn endpoint and retrieves the HTML response from the server.

You can now call the function like this:

job_postings_html = fetch_linkedin_jobs("Software Engineer", "New York")If you print job_postings_html, you’ll get something like:

<!DOCTYPE html>

<html>

<body>

<ul>

<li>

<div

class="base-card relative w-full hover:no-underline focus:no-underline

base-card--link

base-search-card base-search-card--link job-search-card"

data-entity-urn="urn:li:jobPosting:3625991287"

data-impression-id="jobs-search-result-0"

data-reference-id="RgELK5m6YdhWvuLtkYySTw=="

data-tracking-id="qMqbfqQV25CLfykQN+Cgng=="

data-column="1"

data-row="1"

>

<a

class="base-card__full-link absolute top-0 right-0 bottom-0 left-0 p-0 z-[2] outline-offset-[4px]"

href="https://www.linkedin.com/jobs/view/full-stack-engineer-at-porter-3625991287?position=1&pageNum=0&refId=RgELK5m6YdhWvuLtkYySTw%3D%3D&trackingId=qMqbfqQV25CLfykQN%2BCgng%3D%3D"

data-tracking-control-name="public_jobs_jserp-result_search-card"

data-tracking-client-ingraph

data-tracking-will-navigate

>

<span class="sr-only">Full Stack Engineer</span>

</a>

<!-- Job details omitted for brevity -->

</div>

</li>

<li>

<!-- Other job position -->

</li>

<!-- More job listings... -->

</ul>

</body>

</html>Time to parse that HTML and extract job data from it!

Step #3: Parse the Resulting HTML

Render the response HTML in a browser, and you'll see that the API returns up to 10 (fewer on the last page of pagination) job listing elements per page:

Each job listing entry generally contains:

The URL of the detailed job posting page

The job title

The company that posted the job

The time it was published

The job location

Associated tags

Other optional information

You can use Beautiful Soup to parse the HTML and iterate over all job entries. Then, apply the appropriate scraping logic to extract the relevant information:

def parse_job_postings(job_postings_html):

# Where to store the scraped data

job_postings = []

# Parse the input HTML string

soup = BeautifulSoup(job_postings_html, "html.parser")

# Select all <li> elements (each one is a job card)

job_li_elements = soup.select("li")

for job_li_element in job_li_elements:

# Extract the job URL, if present

link_element = job_li_element.select_one(

'a[data-tracking-control-name="public_jobs_jserp-result_search-card"]'

)

link = link_element["href"] if link_element else None

# Extract the job title, if present

title_element = job_li_element.select_one("h3.base-search-card__title")

title = title_element.text.strip() if title_element else None

# Extract the company name, if present

company_element = job_li_element.select_one("h4.base-search-card__subtitle")

company = company_element.text.strip() if company_element else None

# Extract the job location, if present

location_element = job_li_element.select_one("span.job-search-card__location")

location = location_element.text.strip() if location_element else None

# Extract the date the job was posted, if present

publication_date_element = job_li_element.select_one("time.job-search-card__listdate")

publication_date = (

publication_date_element["datetime"] if publication_date_element else None

)

# Create a dictionary for this job posting

job_posting = {

"url": link,

"title": title,

"company": company,

"location": location,

"publication_date": publication_date,

}

# Append it to the top-level list

job_postings.append(job_posting)

return job_postingsNote that you should always check whether the selected HTML element exists and is valid, as each job posting entry can contain different or missing data.

Step #4: Put It All Together

Combine all steps into a working Python script that scrapes and processes LinkedIn job data from its hidden API:

# pip install requests beautifulsoup4

import requests

from bs4 import BeautifulSoup

def fetch_linkedin_jobs(keyword, location, start=0):

"""

Sends a GET request to LinkedIn's hidden job search API endpoint.

Args:

keyword (str): The job title or keywords to search for.

location (str): The location where to search for jobs.

start (int): The pagination offset (0 for the first page, 25 for the second, etc.).

Returns:

str: The raw HTML content of the job listings.

"""

# LinkedIn Jobs Search hidden API endpoint

base_url = "https://www.linkedin.com/jobs-guest/jobs/api/seeMoreJobPostings/search"

params = {

"keywords": keyword,

"location": location,

"start": start

}

response = requests.get(base_url, params=params)

# Raise an error if the request fails

response.raise_for_status()

# Return the raw HTML

return response.text

def parse_job_postings(job_postings_html):

"""

Parses the job listings HTML returned by LinkedIn and extracts relevant data.

Args:

job_postings_html (str): The raw HTML content of job listings.

Returns:

list[dict]: A list of dictionaries, each representing a job posting.

"""

# Where to store the scraped data

job_postings = []

# Parse the input HTML string

soup = BeautifulSoup(job_postings_html, "html.parser")

# Select all <li> elements (each one is a job card)

job_li_elements = soup.select("li")

for job_li_element in job_li_elements:

# Extract the job URL, if present

link_element = job_li_element.select_one(

'a[data-tracking-control-name="public_jobs_jserp-result_search-card"]'

)

link = link_element["href"] if link_element else None

# Extract the job title, if present

title_element = job_li_element.select_one("h3.base-search-card__title")

title = title_element.text.strip() if title_element else None

# Extract the company name, if present

company_element = job_li_element.select_one("h4.base-search-card__subtitle")

company = company_element.text.strip() if company_element else None

# Extract the job location, if present

location_element = job_li_element.select_one("span.job-search-card__location")

location = location_element.text.strip() if location_element else None

# Extract the date the job was posted, if present

publication_date_element = job_li_element.select_one("time.job-search-card__listdate")

publication_date = (

publication_date_element["datetime"] if publication_date_element else None

)

# Create a dictionary for this job posting

job_posting = {

"url": link,

"title": title,

"company": company,

"location": location,

"publication_date": publication_date,

}

# Append it to the top-level list

job_postings.append(job_posting)

return job_postings

if __name__ == "__main__":

# Fetch and parse jobs for "Software Engineer" in New York

job_postings_html = fetch_linkedin_jobs("Software Engineer", "New York")

job_postings = parse_job_postings(job_postings_html)

# Export to CSV/JSON and/or store it in a database and/or process it...Execute the above LinkedIn API scraping script, and job_postings should contain a data structure like:

[

{

"url": "https://www.linkedin.com/jobs/view/full-stack-engineer-at-porter-3625991287?position=1&pageNum=0&refId=699dcvdd0c%2F08b60IrUMRw%3D%3D&trackingId=qZ%2BIrXaXY3oRQEJXbrOwYg%3D%3D",

"title": "Full Stack Engineer",

"company": "Porter",

"location": "New York, NY",

"publication_date": "2023-06-05"

},

// omitted for brevity...

{

"url": "https://www.linkedin.com/jobs/view/software-engineer-c%2B%2B-%E2%80%93-2025-grads-at-hudson-river-trading-4002177189?position=10&pageNum=0&refId=699dcvdd0c%2F08b60IrUMRw%3D%3D&trackingId=cvj2EXBjikFs3Jafymiwzg%3D%3D",

"title": "Software Engineer (C++) – 2025 Grads",

"company": "Hudson River Trading",

"location": "New York, NY",

"publication_date": "2025-05-16"

}

]Et voilà! As I promised at the beginning of this article, I showed you how to scrape LinkedIn via its hidden APIs.

hiQ Labs vs LinkedIn: Is It Legal to Scrape LinkedIn?

When it comes to web scraping, there are some commonly recommended best practices to avoid legal issues (such as scraping only publicly available data and not violating the target site's terms and conditions).

Yet, since the legal landscape around web scraping can be complex, it's always wise to look at precedents from previous court cases to understand how to comply with the law.

Regarding LinkedIn scraping, the most notable legal case is hiQ Labs vs. LinkedIn. In this case, while the CFFA (Computer Fraud and Abuse Act) claims about accessing public data largely favored hiQ, LinkedIn also pursued a breach of contract claim based on hiQ's behavior.

Specifically, it was revealed that hiQ used "turkers" (crowdsourced workers) to create fake LinkedIn accounts, which they then used to log in and scrape data behind the login wall. This went beyond simply viewing publicly available profiles and directly violated LinkedIn’s terms of service that users agree to when creating and using accounts.

The takeaway: Avoid scraping data behind paywalls or login walls (and hidden APIs help you with that!), and never use fake or fraudulent accounts to bypass restrictions.

Conclusion

The goal of this post was to show how to scrape job data from LinkedIn by tapping into its hidden API. As you’ve seen, discovering these API endpoints wasn’t too easy, but using them offers several advantages over traditional scraping techniques.

Please note that all the code provided is for educational purposes only. Use it responsibly and always respect LinkedIn’s terms of service.

I hope you found this technical guide helpful and learned something new today. Feel free to share your thoughts or experiences in the comments—until next time!

Great article, did you find a way to scrape companies though? I'm working on a similar project and the main thing I would want is search companies with their filters, I suppose we cant skip the auth wall for this? Linkedin does have explicit page for individual companies but I guess to add filters such as location, industry and keyword and search and get paginated results, I did find a way to create demo accounts and scrape it. But the account will get blocked in no time.