THE LAB #17: Creating a dataset for investors - Tesla (TSLA)

The creation process for a dataset for stock market analysts: Tesla

We’ve seen in the previous post the alternative data landscape and the role of web scraping in the financial industry.

Just as a recap, data for the financial market is subject to a strict compliance check and due diligence to avoid any possible legal issues for the fund using it in its analysis.

This means that no personal information should be contained and that the scraping activity should be done in an ethical and legal manner.

We also have seen that depending on the type of investor, fundamental or quantitative, we should need different data: if data should be ingested by any machine learning algorithm, we should create a dataset that covers many stocks and with a long history, while for fundamental investors a meaningful dataset on one stock could be enough, if truly valuable.

Given this, let’s try to create for fun a dataset for investors that allow us to analyze one of the most popular stocks: Tesla.

Why Tesla?

It had tremendous growth in the past years but in 2023 it still did not reach the 2021 peak after the 2022 crash and could be interesting to have a deeper look at its fundamentals.

Investors have already a large variety of data to rely on when studying Tesla stocks: the EV market data, estimates on orders, any data on its supply chain, and so on.

Now we’ll create for fun a dataset that could tell us how Tesla models keep their values over time, which could give us a taste of the appeal of the brand. Monitoring this over time could be very interesting: does Tesla keeps its value in time like a BMW or is it more like an Opel?

Where to get data?

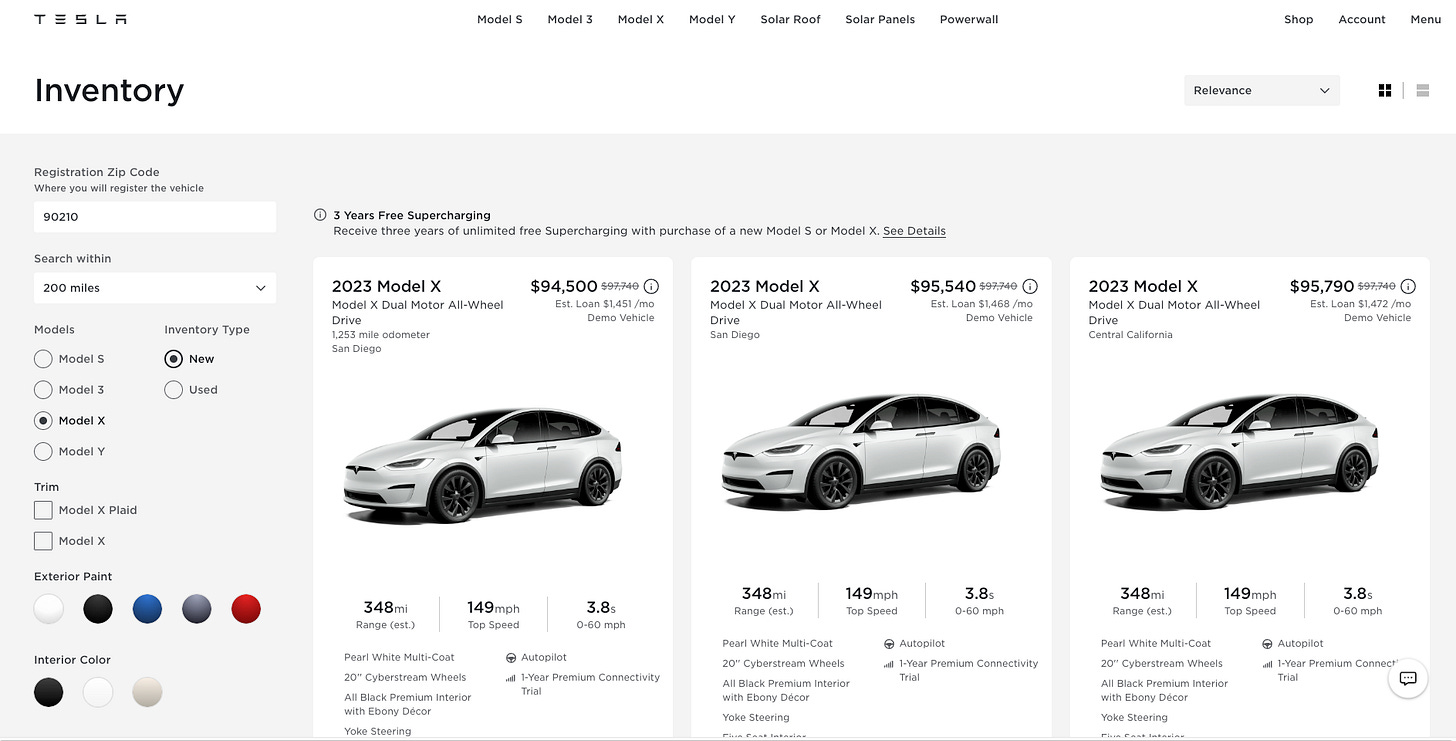

On its US website, Tesla has a list of models on sale across the USA, both new and used.

Comparing apples with apples, we’ll see how each model and configuration behaves over time.

You can see that this list is filtered by Zip Code, so first of all, I’ll need a list of them before starting the scraping phase.

Then, analyzing the website, I see that the data shown comes from an internal API, so what we’ll need to do is to iterate the calls to this API for every zip code and store the results.

Let’s code this!