Web scraping and alternative data for financial markets

What are alternative data and how to use web scraping to build datasets for financial markets?

This post is sponsored by Bright Data, award-winning proxy networks, powerful web scrapers, and ready-to-use datasets for download. Sponsorships help keep The Web Scraping Free and it’s a way to give back to the readers some value.

In this case, for all The Web Scraping Club Readers, using this link you will have an automatic free top-up. It means you get a free credit of $50 upon depositing $50 in your Bright Data account.

We have seen in many posts how to scrape the web under several circumstances, like when there’s a Cloudflare-protected website or a mobile app. Still, we didn’t investigate much on which sectors are benefitting the most from the data just scraped.

Some of them are pretty obvious, I think we all know that each e-commerce who is selling online is trying to understand what its competitors are selling and the same applies to delivery apps and many other markets.

But there’s one sector that is always hungry for data since having a new and reliable dataset can lead to millions of dollars in benefits and it’s the financial one. With my current company, Re Analytics, we’ve made (and still making) experience on the ground in this industry so in this post and in the next The Lab (scheduled for the 27th of April) we’ll make a deep dive inside the world of Alternative Data.

What does it mean for Alternative Data?

In the financial sector, data is key for investors to take decisions about their investment strategy. Michael Bloomberg created an empire by selling financial data and news to investors. Jim Simmons, an illustrious mathematician, created the Reinassance Hedge Fund and he was one of the first people to use what today we would call data science in finance, to extract some competitive edge against other funds and close better deals. Medallion, the main fund which is closed to outside investors, has earned over $100 billion in trading profits since its inception in 1988. This translates to a 66.1% average gross annual return or a 39.1% average net annual return between 1988 – 2018, as Wikipedia states.

As the world’s economy becomes more and more digitalized, new sources of information are becoming available. So while traditional financial data sources, like financial statements, balances, and historical financial market data keep their importance, different sources from the digital world are becoming more and more interesting for the financial industry and they are called alternative data. According to the official definition, alternative data are information about a particular company that is published by sources outside of the company, which can provide unique and timely insights into investment opportunities.

What types of alternative data exist?

Since alternative data are data that don’t come from inside the company, this definition includes a wide range of possibilities.

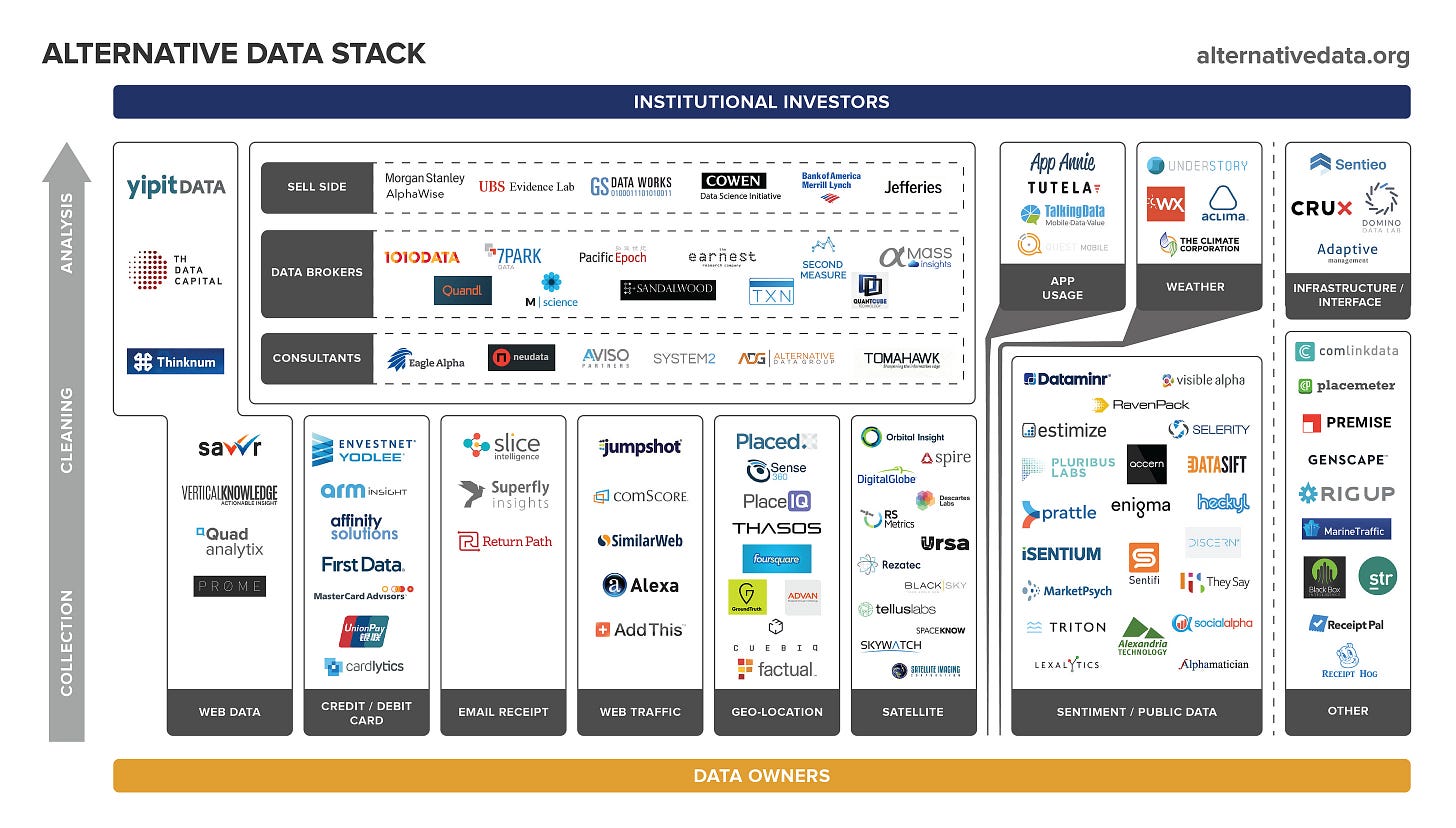

As we can see from this slide from alternativedata.org, in addition to web data, we have satellite images, credit card transactions, sentiment data, and so on.

In this 2010 article we can understand better how satellite images can be used for financial purposes: a company called Remote Sensing Metrics LLC, using satellite images, monitored 100 Walmart parking lots as a representative sample and counted, month by month, the cars parked outside, to get an estimate of the quarterly revenues by counting the people flow.

As you can easily understand, you probably won’t get the right amount with a single dollar precision but, with a proper model, as soon as the quarter ends you might have an estimate of the revenues months before the public data is available to everyone, for a publicly traded stock.

Why web scraping is important in the alternative data landscape?

As we have seen from the slide before, alternative data providers can be divided in two: who owns or rework other’s data (in the satellite image example made before, the firm acquired the images from providers and tailored its data product to Walmart revenue estimator) and who extract data from public sources and derive insights from it (web data category but also sentiment).

An example of the second category can be a company that, starting from online reviews, extracts the sentiment of the customers and understand if the targeted company is losing its grip on its customers. Or scraping e-commerce data, we can understand if a brand is much more on sales than its direct competitors, this can be a sign of a product or sales issues.

What are the attention points for web-scraped data in the financial industry?

Financial markets are strictly regulated to avoid fraud and so-called insider trading, the trading of stocks based on non-public information about the company, and other types of legal issues. In fact, using data collected in an improper way can have legal repercussions for the fund itself and its managers.

For this reason, if you want to sell data to hedge funds and investors, be prepared for a lot of paperwork.

A good article by Zyte explains in detail what you need to prove to funds to demonstrate that you collected data properly. As stated in the article:

Generally speaking, the risks associated with alternative data can be broken into four categories:

Exclusivity & Insider Trading

Privacy Violations

Copyright Infringement

Data Acquisition

Let’s briefly summarize the risks related to the four points before.

Exclusivity & Insider Trading

As we said before, the practice of insider trading is when data non publicly available is used for trading stocks. This means that data behind a paywall are generally not allowed since are not publicly available for everyone but only for paying users of the target websites. Also, if a scraper needs to log in to the target website for getting some data, it raises some reds flag and you must be sure that you don’t break any TOS doing so.

Privacy violations

This applies when scraping personal data from the web, since in the last few years privacy regulations around the globe, especially in Europe with GDPR, have become more and more restrictive.

For this reason, scraping personal data is generally a no-go in every project, unless you can anonymize it.

Copyright Infringement

Scraping and reselling data which is protected by a copyright, like photos and aricles is not a great idea, especially in this case. Of course it’s a big NO.

Data acquisitions

Funds for sure will want to understand if the whole data acquisition process was executed in the fairest way possible or if it could have caused some harm to the target websites.

For this reasons, the Investment Data Standards Organization released a checklist with best practices to follow for web scraping.

You can find all the points in the linked file, but just to give an idea, here are some points:

6 A data collector should assess a website according to the terms of its robots.txt.

7 A data collector should access websites in a way that the access does not interfere with or impose an undue burden on their operation.

8 A data collector should not access, download or transmit non-public website data.

9 A data collector should not circumvent logins or other assess control restrictions such as captcha.

10 A data collector should not utilize IP masking or rotation to avoid website restrictions.

11 A data collector should respect valid cease and desist notices and the website’s right to govern the terms of access to the website and data.

12 A data collector should respect all copyright and trademark ownership and not act so as to obscure or delete copyright management information.

As you can see, the guidelines are very strict to avoid any possible issue for the data provider and the fund itself.

Key features for web-scraped data in the financial industry

Given all this information and premises, what are the features required by the financial industry to consider a dataset an interesting one?

Well, first of all, we need to understand that funds have their own strategy for studying the markets and this impacts what they are looking for.

Over simplifying, we can divide the funds into two macro-categories, quantitative and fundamentals, knowing that many funds fall in the shades between these two poles, mixing the two strategies.

Basically, fundamental investors study economics and learn about the economics, the business model, and risk factors for a single company, in a sort of bottom-up approach. Quants try to elaborate complex machine learning models fueled by a lot of data, trying to spot correlations between data ingested and the stock market, in a top-down approach.

As you can imagine, the two approaches require different types of data. For fundamentals, the dataset can be very specific to a stock ticker but it should tell something valuable about the business model of the target stock. For quants instead, since they need data for training some ML models, it should have some history (typically some years, how many depends on the market you’re covering and their model needs) and many stocks covered, otherwise, it could be inefficient to add your data to the model for only few stocks.

Final remarks

With this article, I wanted to introduce the Alternative Data landscape, since it’s one of the growing sectors in the data world where web scraping has a significant role.

It’s a complex environment, where there are some strict rules on data sourcing, and a provider must prove its accountability, so it’s very difficult for a freelancer to enter, while it could be easier for established companies.

Depending on the investors you might have different requirements in timeframe and specs of data but if you can build up a great data product it could be a rewarding field to enter.

In the next The Lab episode we’ll try to build a dataset for the financial investors as a fun experiment.

I enjoyed reading your article on web scraping and alternative data. It was informative and well-written, and the examples you provided were helpful. Thanks for sharing your insights!