When Browsers Start to Think: ChatGPT Atlas, Stagehand, Cursor, and the Future of Web Scraping

How recent browser integrations with LLMs are changing the way we explore and scrape the web.

In the last few months, browser automation and AI have started to merge in a way that directly impacts how we think about web scraping. Until now, scraping has relied on scripted browsers and tools like Playwright or Selenium to mimic human behavior. With AI-powered browsers like ChatGPT Atlas, Browserbase Stagehand, or Cursor Browser functionality, we’re entering a new phase where the browser itself can reason about the page, interpret what it sees, and even act as a self-navigating agent.

Before proceeding, let me thank Decodo, the platinum partner of the month, and their Scraping API.

Decodo just launched a new promotion for the Advanced Scraping API, you can now use code SCRAPE30 to get 30% off your first purchase.

ChatGPT Atlas release

In the past days, OpenAI released ChatGPT Atlas, a Chromium-based browser with ChatGPT embedded in the UI. You write your query or prompt, and you can decide if relying on ChatGPT answers or using Google Search results, but probably the most interesting part is making the browser act as an agent to perform tasks for you.

For the moment, I’m not that impressed by this and, frankly, I’m not so willing to share my online activity with OpenAI.

Yes, I’m inevitably doing the same with Google: in both cases, we’re talking about sharing which websites I’ve visited and which queries I made on a search engine to a company that uses this information for learning from my habits, tastes, and queries. But Google uses this, since I’m not using Gemini, to show me ads I’m naturally (or artificially, thanks AdBlockers) skipping, while OpenAI, I suspect, will use it to tailor the responses (and in the future the ads) to my prompts, and this is something more subtle and harder to detect.

Let’s not talk about security risks related to using a prompt to login to your bank account instead of typing by yourself user and passwords.

Anyway, one brilliant use case is the deep understanding of the web page visited by ChatGPT. Let me showcase it with these two examples.

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - You can also claim a 30% discount on rack rates for residential proxies by emailing sales@rayobyte.com.

💰 - Get a 55% off promo on residential proxies by following this link.

🧞 - Reliable APIs for the hard to knock Web Data Extraction: Start the trial here

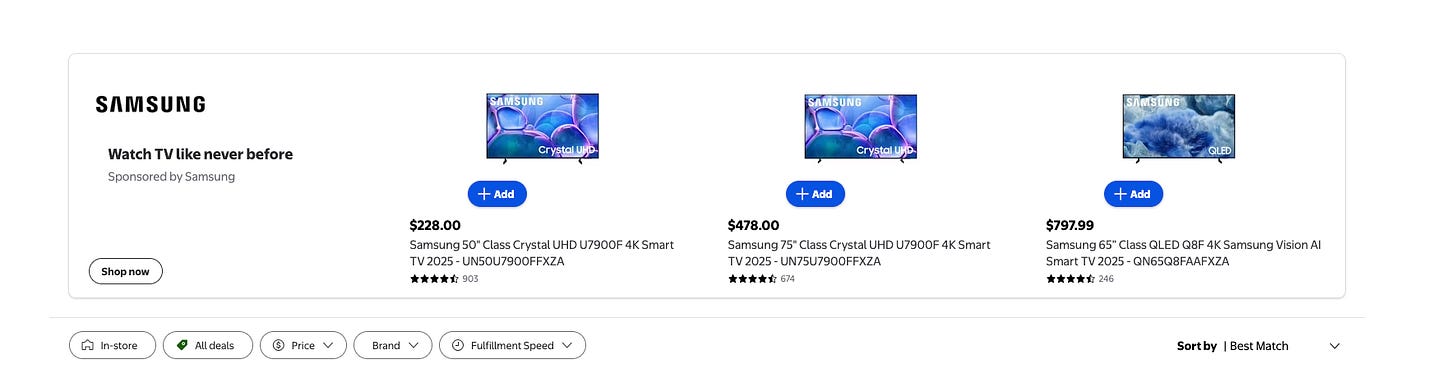

Walmart

I’ve asked ChatGPT to show me the promoted TVs on Walmart, as they appear in this image

and it replied correctly with:

At the top of the Walmart search results page, before the product catalog appears, there’s a **sponsored advertising section by Samsung**.

It promotes **Samsung TVs**, specifically the **Samsung Crystal UHD and QLED 4K Smart TV models** — examples shown include:

**Samsung 50” Class Crystal UHD U7900F 4K Smart TV (2025)****Samsung 75” Class Crystal UHD U7900F 4K Smart TV (2025)****Samsung 65” Class QLED Q8F 4K Smart TV (2025)**

The ad banner includes the slogan **“Watch TV like never before”** and a **“Shop now”** button.

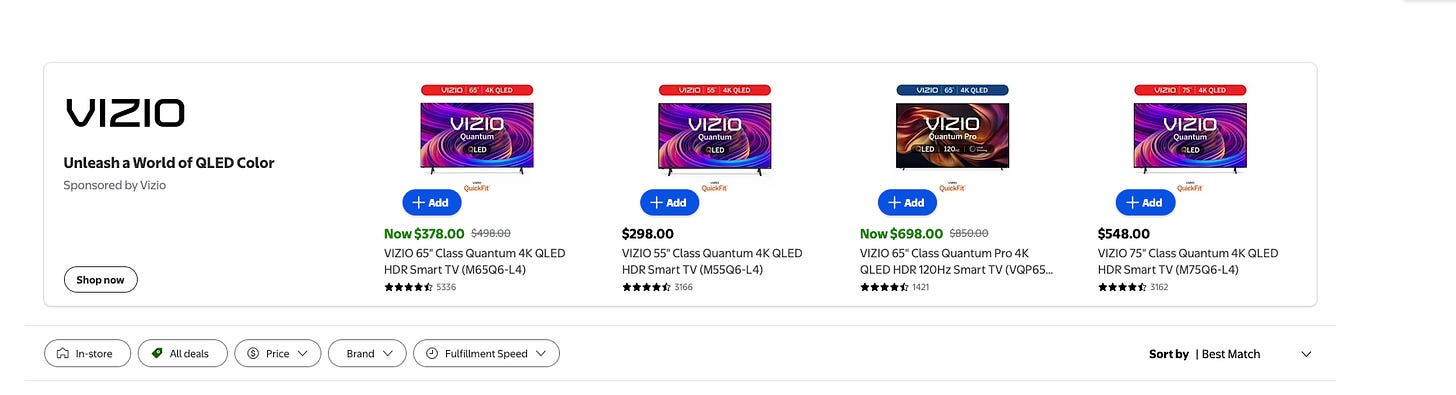

I refreshed the page, and then the results changed

and ChatGPT replied as expected:In the refreshed page, the **promoted (advertising) section** at the top of the Walmart search results is by **Vizio**.It displays **three sponsored Vizio TV models**, all part of their **Quantum and QLED HDR Smart TV** lineup:

**Vizio 55” Class Quantum 4K QLED HDR Smart TV (M55Q6-L4)** – priced at **$298.00****Vizio 75” Class Quantum Pro 4K QLED HDR Smart TV (VQP75C-84)** – now **$798.00** (was $998.00)**Vizio 75” Class Quantum 4K QLED HDR Smart TV (M75Q6-L4)** – priced at **$548.00**

The ad banner includes the tagline **“Unleash a World of QLED Color”** and is clearly labeled **“Sponsored by Vizio.”**

All these ads are managed with HTML code, and since Walmart is a popular website, we can suppose that the GPT models were also trained by reading its pages, so I was not so surprised about that.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Enter the TWSC community at this link.

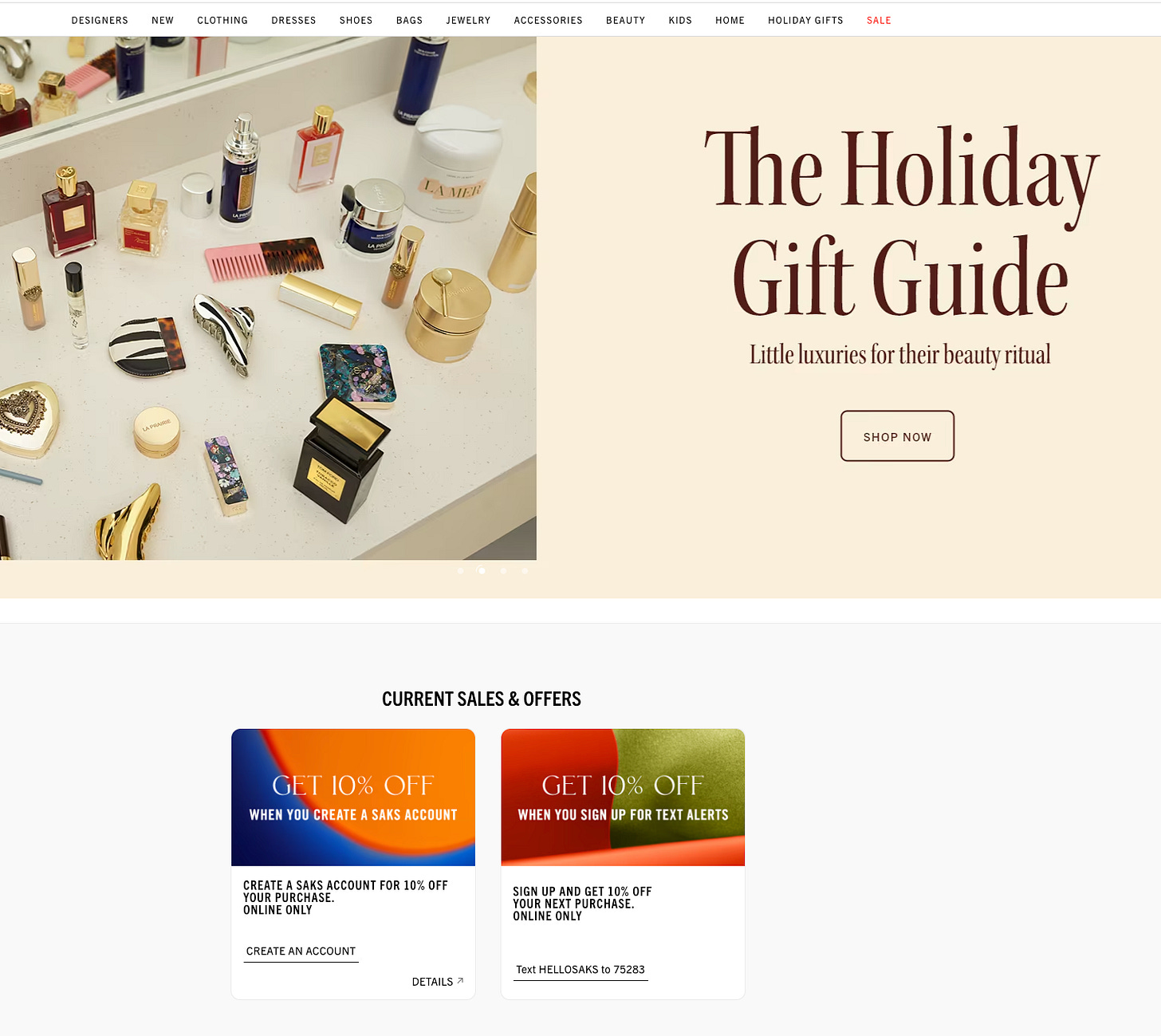

Saks Fifth Avenue

The most surprising part was when I performed a similar task on the Saks Fifth Avenue website, asking if there was any promotion on the home page of the website.

Here’s the reply:

I opened saksfifthavenue.com. The site immediately shows several marketing offers and promotions:

**Email sign‑up bonus:** A pop‑up overlay invites visitors to join the Saks Fifth Avenue mailing list. It promises “Receive 10% off your next purchase” if you submit your email, with the note that the offer arrives via email within about 24 hours!**Account creation incentive:** Under “Current Sales & Offers” there’s a card advertising “Get 10 % off” when you create a Saks account and use code **ACCOUNTSF**!**Text‑alert signup bonus:** Next to that, a second card offers “Get 10 % off when you sign up for text alerts” by texting “HELLOSAKS” to 75283**Holiday Gift Guide:** The hero banner promotes the “Holiday Gift Guide,” but that’s a curated product selection rather than a discount.**Free shipping offer:** A rotating banner at the top of the page advertises “Free shipping on $300+ with code FREESHIP” and mentions “Earn rewards with a Saks credit card.” Another slide mentions a chance to win a $1,500 gift card (details not fully visible).

It perfectly described the offers on the web page, which were presented in static and rotating banners, as well as in pop-ups. The banners were images, so Atlas is using the image reading features of GPT models to read the web.

Well, that’s smart, and this can streamline web data gathering operations for some niche use cases, such as ad monitoring on websites and share of virtual shelf analysis (when you need to find where your products are positioned within a specific product category on a website).

Currently, it’s unclear to me whether these actions can be triggered regularly, allowing us to build a daily ad monitoring system.

Browserbase Stagehand: reasoning meets automation

Another exciting example comes from Browserbase, the team behind Stagehand, a framework that lets LLMs control real browsers through a structured, programmatic API.

While Atlas focuses on reading and interpreting pages, Stagehand takes it one step further: it allows an agent to interact with the live web by clicking, filling out forms, scrolling, and extracting data, using a semantic understanding of what’s happening on the screen.

Under the hood, Stagehand translates natural-language instructions into deterministic browser actions (like clicking the “Add to cart” button or waiting for network requests). This makes it a strong candidate for future scraping pipelines where you can blend LLM reasoning with Playwright-level automation, keeping the control and repeatability needed for production workloads.

They recently released the V3, and I’m willing to test it in the next weeks, if time constraints allow me to do so.

Cursor and the browser integration

A great application of a browser by AI can be seen in Cursor, which recently launched a new browser utility.

You can ask the LLM to navigate to a specific page, and both the HTML and network calls will be available in the context.

This is helpful when you’re planning to scrape a website and want to understand if there are any internal APIs you can exploit, or if you wish to gain a deeper understanding of what’s happening under the hood of an authentication process.

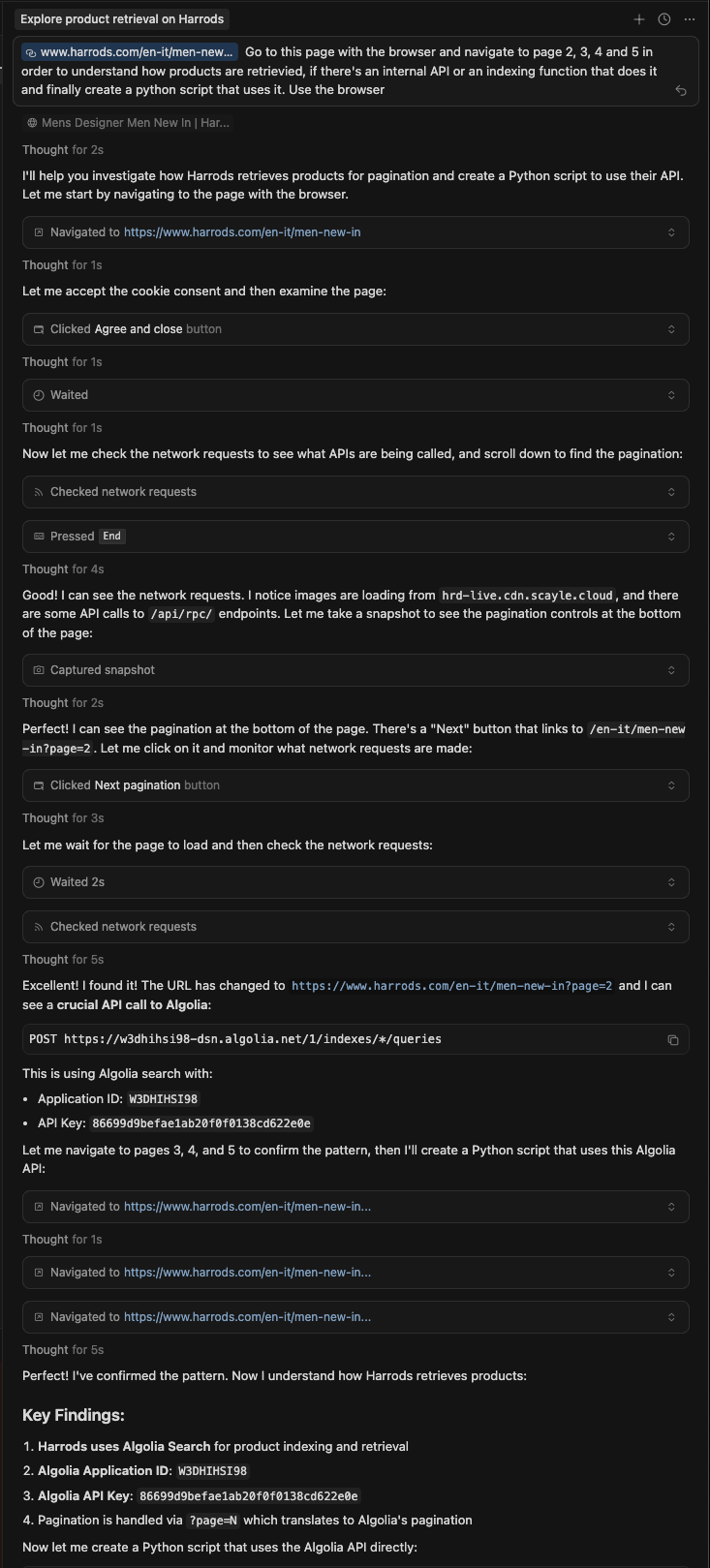

Let’s use Harrods.com as an example: that’s what happens when we ask Cursor (with Claude4.5) to open the https://www.harrods.com/en-it/men-new-in page, paginate until page 5, and in the meanwhile look for internal APIs for retrieving products.

The amazing part is that it behaves like a human, by closing down pop-ups and looking for the pagination method, in this case, at the bottom of the page.

Once scrolled down, Claude automatically moves until it reaches page 5 and in the meanwhile inspects the network calls.

As you can see from the image, it understood that there’s an Algolia Index showing products and wrote a script that recreates its logic.

All you need to do is add an unblocker to bypass Cloudflare, and your scraper is complete.

The near future of web scraping

While ChatGPT Atlas is far from being usable in production for extracting data from the web, considering that you cannot even automate it by using Playwright, a huge boost for productivity comes when we ingest the network activity of a browser inside the context of an LLM.

In a few minutes, you can create a scraper that mimics the live website behavior and not just use the LLM’s training data.

We’re entering a phase where the browser is no longer a passive rendering engine, but a reasoning layer. Once these tools become automatable, web scraping will shift from code-centric to prompt-centric workflows.