Web DRAGON - LLM-powered web scraping on a distributed cloud

How generative AI can be used for web scraping, using residential game PCs

Welcome to this post made in collaboration with Salad. Its distributed network of residential gaming PCs seemed a perfect fit for bypassing device fingerprinting in web scraping operations. That’s why I’ve asked Bob Miles (Founder and CEO) and Shawn Rushefsky (Senior Generative AI Solutions Architect) to share with The Web Scraping Club how to use generative AI on Salad architecture to create a web scraping project.

Today’s AI boom is largely powered by web-scraped data - text, images, videos, and audio collected from the public internet. Despite its importance, web scraping at scale typically suffers from two main challenges:

Many websites don’t want to be scraped and have technology in place to prevent automated extraction. Many websites outright block datacenter IP addresses, making it significantly more difficult to use public clouds.

Websites change formatting and content all the time, and even basic layout changes can break manually coded scrapers, which typically rely on HTML selectors and regular expressions to get the data they’re looking for.

Here we propose an approach to web scraping that leverages Salad’s globally distributed network of residential gaming PCs. The nature of the network points to solutions to both of the main challenges faced by scrapers. Since every Salad node is an actual residential computer, loading a page from these nodes looks like legitimate human traffic to most websites, and is much less likely to get blocked. Additionally, these gaming PCs have GPUs capable of running modern Large Language Models(LLMs), providing a potentially much more flexible and resilient option for parsing website content.

Insights

Actually accessing page content was extremely easy on Salad’s distributed cloud, with more than 99% of pages viewed successfully within 2 tries. Just as we humans are occasionally presented with CAPTCHAs, our crawlers also ran into CAPTCHAs from time to time. We dealt with this by retrying the URL with a different queue worker (and therefore a different IP in a different location). I believe the remaining CAPTCHA efforts could be circumvented using a computer vision model like YOLOv8.

Once the boilerplate is set up, the process of writing the data extraction layer is a lot simpler and more intuitive than traditional web-scraping techniques, consisting mostly of asking natural language questions. It’s easy to imagine giving this framework a simple web interface that would allow the creation of custom web scrapers with no coding knowledge required at all.

LLM’s are not a magic bullet for the problems faced by Web Scraping. Prompts that work reliably with one website may not work very well at all with another site. You’re still going to do some per-site customization.

Setting up a proof-of-concept RAG application is pretty straightforward with off-the-shelf open-source tools. As is the case for most software, developing that proof-of-concept into something production-ready is non-trivial.

There are a lot of choices to make, knobs to adjust, variables to tweak in using the LLM approach to data extraction. There’s going to be a significant amount of experimentation involved in reliably getting the results you want. Everything from what models to use, how to split your webpage into chunks, and how you write your prompts can have a major impact on both the efficiency and accuracy of data extraction.

Using LLMs and Vector Databases is much more computationally intensive than traditional methods of web scraping, which rely on highly efficient HTML selectors and other low-cost text processing techniques. In our test run with Harrods.com, each page took about 9s to process on an RTX 3080 Ti, yielding a JSON object like this:

{

"name": "Moncler 999 x adidas Originals NMD Padded Boots",

"manufacturer": "Moncler, adidas Originals",

"price": 637,

"currency": "USD",

"description": "The Moncler x adidas Originals NMD Padded Boots are a collaboration between the two renowned brands. These boots are designed with a focus on functionality and style, featuring a unique combination of materials. The lining is made of other materials, providing added comfort and warmth, while the sole is also crafted from other materials. These boots are currently available for personal shopping and in-store purchase only, as they are not currently available for online purchase.",

"is_available_online": false

}Whether this technique is worth the extra computational cost will depend a lot on your particular use case. At scale, it’s likely that your savings in engineering time would significantly outweigh the additional costs from computing, especially given Salad’s low costs compared to traditional clouds.

The LLM space is developing at a breakneck pace. It’s possible (likely even) that by the time you’re reading this article, none of the models I’ve chosen are still considered state-of-the-art. The good news is that open-source inference servers like 🤗Text Embeddings Inference and 🤗Text Generation Inference make it very easy to try out new models without changing the rest of your code, each requiring only a single environment variable (

MODEL_ID) to configure models from Huggingface Hub.

Architecture

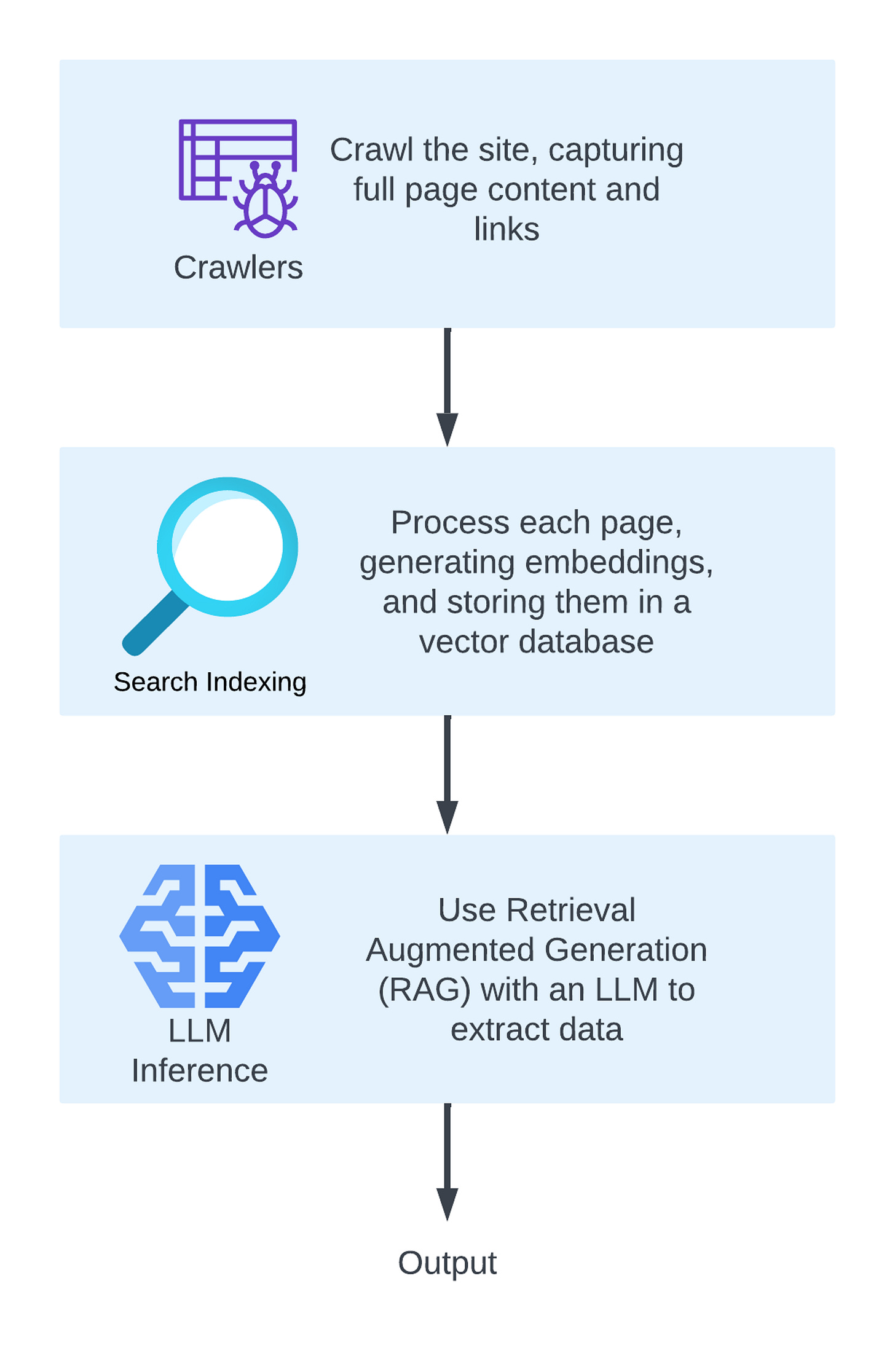

This task can be broken down into 3 main stages, each feeding into the next.

Web Crawling

Crawling Service

The web crawling is coordinated by a crawling service. The crawling service provides an interface for humans to start a crawl, giving an entry URL, maximum depth, and other crawl parameters. Crawlers request jobs from the crawling service, and return page content, along with all links on the page. The crawling service takes care of things like only handing out each URL once and only crawling URLs of the same domain as the entry URL.

Crawler

This piece is quite simple since we only need to capture the full page content, and not do any extraction at this stage. We used Playwright with Chromium, along with Playwright Stealth. The worker pulls jobs from the crawling service, and performs a simple page visit:

async def process_page(browser, url):

page = await browser.new_page()

await stealth_async(page)

print(f"Processing {url}", flush=True)

await page.goto(url)

try:

await page.wait_for_load_state("networkidle", timeout=5000)

except Exception as e:

pass # If we timeout, just continue

html = await page.content()

links = await page.eval_on_selector_all("a", "as => as.map(a => a.href)")

await page.close()

return {"html": html, "links": links}Search Indexing

Chunking

Websites are generally too large for LLMs to handle within their context limits. This means we have to split each page into chunks, so that we can present the LLM with a smaller, more relevant bit of context. There’s many ways to do this, and there’s likely big improvements to be made over what I present here.

Pull the HTML out of the MHTML

An .mhtml file contains all assets needed to render the page as it was at the time it was captured. This means the file likely contains media in addition to the html content. For this project, we only need the html, and not the media, but you can imagine building image tagging or audio transcription pipelines in a similar way here. Python’s built-in email library provides utilities for parsing the .mhtml file.

import email

from email import policy

def extract_html_from_mhtml(file_path: Union[str, IO[Any]]) -> str:

if isinstance(file_path, str):

with open(file_path, "rb") as file:

# Parse the .mhtml file as a MIME message

message = email.message_from_binary_file(file, policy=policy.default)

else:

message = email.message_from_binary_file(file_path, policy=policy.default)

# Iterate through the message parts

for part in message.walk():

# Check if the part is an HTML document

if part.get_content_type() == "text/html":

# Return the HTML content

return part.get_content()Chunk the HTML Content

Beyond the actual text content, many sites have a lot of useful data in <meta> tags, or embedded as JSON scripts. We’re going to capture all of that, and return it in manageable chunks. We’ll use BeautifulSoup and lxml to parse the HTML content.

from bs4 import BeautifulSoup

def chunk_html(html: str) -> List[str]:

soup = BeautifulSoup(html, "lxml")

meta = soup.find_all("meta")

scripts = soup.find_all("script")

json_content = [

tag.get_text() for tag in scripts if tag.get("type") and "json" in tag["type"]

]

text = soup.get_text(separator="\n", strip=True)

return json_content + [str(tag) for tag in meta] + chunk_text(text, max_tokens=256, stride=32)Often the plain text content on a website is also quite large, so we want to chunk that too. We’ll use nltk to tokenize the text by word, letting us specify a maximum number of tokens per chunk.

import nltk

from nltk.tokenize import word_tokenize

from nltk.tokenize.treebank import TreebankWordDetokenizer

nltk.download("punkt")

def chunk_text(text: str, max_tokens: int = 512, stride: int = None) -> List[str]:

"""Chunk a text into smaller pieces.

Args:

text (str): The text to chunk.

max_tokens (int, optional): Max tokens per chunk. Defaults to 512.

stride (int, optional): How many tokens each chunk should overlap with adjacent chunks. Defaults to max_tokens // 6.

Returns:

List[str]: A list of chunks.

"""

if stride is None:

stride = max_tokens // 6

# Tokenize the text

tokens = word_tokenize(text)

# Chunk the tokens, with each chunk having a maximum of `max_tokens`

# tokens, and overlapping front and back by `stride` tokens

chunks = []

for i in range(0, len(tokens), max_tokens - stride):

chunk = tokens[i : i + max_tokens]

chunks.append(detokenizer.detokenize(chunk))

return chunks

While it’s tempting to think of tokens as words, there are some key differences, and there’s a reason to use work_tokenize instead of simply splitting the string by whitespace.

from nltk.tokenize import word_tokenize

text = "Beyond the actual text content, many sites have a lot of useful data in <meta> tags, or embedded as JSON scripts. We’re going to capture all of that, and return it in manageable chunks. We’ll use BeautifulSoup and lxml to parse the html content."

print(word_tokenize(text))

# ['Beyond', 'the', 'actual', 'text', 'content', ',', 'many', 'sites', 'have', 'a', 'lot', 'of', 'useful', 'data', 'in', '<', 'meta', '>', 'tags', ',', 'or', 'embedded', 'as', 'JSON', 'scripts', '.', 'We', '’', 're', 'going', 'to', 'capture', 'all', 'of', 'that', ',', 'and', 'return', 'it', 'in', 'manageable', 'chunks', '.', 'We', '’', 'll', 'use', 'BeautifulSoup', 'and', 'lxml', 'to', 'parse', 'the', 'html', 'content', '.']

Notice how the tokenizer separates out punctuation, special characters, and splits contractions. This is why we also need to use NLTK’s TreebankWordDetokenizer instead of simply re-joining with " ".

Embedding

In order to make our text snippets searchable with a vector database, we need to generate an embedding for each snippet. An embedding is a vector representation of the text. For this, we’re going to use 🤗Text Embeddings Inference, a performance-oriented server from Hugging Face for generating text embeddings. It can be used with a lot of different models, but we chose BAAI/bge-large-en-v1.5 which ranked very highly on the MTEB leaderboard at the time of this project.

For each chunk of text, we need to generate an embedding value, via an http request to the TEI server.

import aiohttp

import os

embedding_server = os.getenv("TEI_SERVER", "<http://localhost:3001>")

async def get_embedding(session: aiohttp.ClientSession, text: str) -> List[List[float]]:

payload = {"inputs": text, "normalize": True, "truncate": False}

async with session.post(f"{embedding_server}/embed", json=payload) as response:

return await response.json()

So, to get all of the embeddings, we can concurrently fire off all of these embedding requests:

import asyncio

async def get_all_embeddings(chunks: List[str]) -> List[List[List[float]]]:

async with aiohttp.ClientSession() as session:

tasks = [get_embedding(session, chunk) for chunk in chunks]

return await asyncio.gather(*tasks)

Store Embeddings in the Vector Database

A vector database is a type of database designed specifically for handling vector data, which are data structures used to represent complex items like images, videos, text, and more abstract data in a high-dimensional space. These vectors are typically generated by machine learning models (such as BAAI/bge-large-en-v1.5), especially in the field of deep learning, and are used to represent complex data in a way that can be easily compared and searched.

One of the primary functions of a vector database is to enable efficient similarity searches. It can quickly find vectors that are closest to a given query vector based on similarity metrics like cosine similarity, Euclidean distance, etc. This is crucial in applications like recommendation systems, image retrieval, and natural language processing.

For our application, we used qdrant, a popular open-source vector database that powers Grok AI on X(formerly Twitter). Inserting all of our vectors is very simple:

from qdrant_client import QdrantClient

from qdrant_client.models import (

PointStruct,

Distance,

VectorParams

)

from uuid import uuid4

qdrant_server = os.getenv("QDRANT_SERVER", "<http://localhost:6333>")

qdrant_client = QdrantClient(url=qdrant_server)

qdrant_client.recreate_collection(

collection_name="harrods",

vectors_config=VectorParams(size=1024, distance=Distance.EUCLID),

)

def index_for_search(

collection_name: str,

chunks: List[str],

embeddings: List[List[List[float]]],

page_id: str,

):

points = [

PointStruct(

**{

"id": str(uuid4()),

"vector": embedding[0],

"payload": {"content": str(chunks[i]), "page_id": page_id},

}

)

for i, embedding in enumerate(embeddings)

]

qdrant_client.upsert(collection_name=collection_name, points=points)

Then, when we want to search the database, we convert our query to an embedding, and perform the search:

from qdrant_client.models import (

Filter,

FieldCondition,

MatchValue,

)

def search_page(query: str, collection_name: str, page_id: str, limit: int = 5):

query_embedding = requests.post(

f"{embedding_server}/embed",

json={"inputs": query, "normalize": True, "truncate": False},

).json()[0]

search_result = qdrant_client.search(

collection_name=collection_name,

query_vector=query_embedding,

query_filter=Filter(

must=[FieldCondition(key="page_id", match=MatchValue(value=page_id))]

),

limit=limit,

)

return search_result

LLM Inference

At this stage, all of our web content has been prepared for analysis by the Large Language Model. To serve the model, we’re going to use 🤗Text Generation Inference (TGI), a performance-oriented inference server for text generation, from Hugging Face. The model we’ll use is TheBloke/zephyr-7B-beta-AWQ, a 4-bit quantized version of HuggingFaceH4/zephyr-7b-beta, which is itself a fine-tuned version of mistralai/Mistral-7B-v0.1.

As mentioned before, a full website is generally too large (too many tokens) for an LLM to work within its entirety. Our challenge is to ask the LLM questions and present it with relevant context that it can use to answer the question. This is where our previous efforts to chunk the site and index it come into play. For each field we want to extract, we’re going to first query our vector database to pull relevant chunks, and then pass those chunks to the LLM along with our question. This is a technique known as Retrieval Augmented Generation, or RAG.

Retrieving Context

To retrieve the relevant context, we’ll be using the search_page function we defined above. The function converts our query into an embedding vector, and then uses qdrant to perform a vector similarity search, filtered to the matching page_id, to make sure we only get data from the specific page we’re working with. For example, lets find the name of a product:

from process_page import search_page

search_results = search_page("What is the name of the product?","harrods", "Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml")

#[ScoredPoint(id='ace2de7f-4a9d-4e5b-a7b4-ec436fac3c40', version=1, score=0.88206077, payload={'content': '<meta content="new" data-react-helmet="true" property="product:condition"/>', 'page_id': 'Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml'}, vector=None),

# ScoredPoint(id='f26f30cd-6145-419f-8412-b874606cd67a', version=1, score=0.89767003, payload={'content': '<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" name="description"/>', 'page_id': 'Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml'}, vector=None),

# ScoredPoint(id='c5fa7788-d6fa-4d09-8cbe-16354167e14b', version=1, score=0.90550584, payload={'content': '<meta content="#ffffff" name="theme-color"/>', 'page_id': 'Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml'}, vector=None),

# ScoredPoint(id='cc582f7c-8630-4e33-9406-1158b9de3ecf', version=1, score=0.9093299, payload={'content': '<meta content="product" data-react-helmet="true" property="og:type"/>', 'page_id': 'Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml'}, vector=None),

# ScoredPoint(id='99a652d9-82db-456a-b5d9-a3171d776549', version=1, score=0.9097151, payload={'content': '<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" property="og:description"/>', 'page_id': 'Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml'}, vector=None)]

What we get back is a list of ScoredPoint objects, each corresponding to a chunk of text we’ve indexed about this page. However, LLMs don’t work with ScoredPoint objects, they work with text, so we’re going to need to convert this into plain text.

searched_content = [

search_result.payload["content"] for search_result in search_results

]

context = "\n-----------------\n".join(searched_content)

<meta content="new" data-react-helmet="true" property="product:condition"/>

-----------------

<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" name="description"/>

-----------------

<meta content="#ffffff" name="theme-color"/>

-----------------

<meta content="product" data-react-helmet="true" property="og:type"/>

-----------------

<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" property="og:description"/>

Now we have a bit of text content that can be processed by the LLM. Importantly, we can also see that this content does include the correct answer to the question we’re asking.

Prompt Engineering

Prompt engineering is the process of crafting effective input prompts to guide the behavior of AI models. Key aspects of prompt engineering include:

Clear Instructions: Providing detailed, clear instructions in the prompt to guide the AI towards the desired type of response or output. This includes specifying the format, tone, and context of the response.

Contextual Information: Including relevant background information or context in the prompt to help the AI understand the task and generate more accurate and relevant responses.

Creativity and Experimentation: Experimenting with different prompt styles and structures to find what works best for a given model and task. This often involves a trial-and-error approach.

Understanding Model Capabilities: Being aware of the strengths and limitations of the AI model to craft prompts that leverage its capabilities while avoiding known pitfalls.

Optimizing for Desired Outcomes: Adjusting the prompt to optimize for specific outcomes, such as creativity, factual accuracy, brevity, or detailed explanations.

Knowing the prompt format that the model was trained on is critical for getting good results of LLMs. When you’re using 🤗 Transformers directly, there is a helper function, Tokenizer.apply_chat_template that provides a standardized input format that is then rendered into the correct prompt format for any given model. Since we aren’t using transformers directly, we will instead directly use the underlying Jinja template, exactly how transformers would. For Zephyr, a chat model, that template looks like this:

{% for message in messages %}

{% if message['role'] == 'user' %}

{{ '<|user|>\n' + message['content'] + eos_token }}

{% elif message['role'] == 'system' %}

{{ '<|system|>\n' + message['content'] + eos_token }}

{% elif message['role'] == 'assistant' %}

{{ '<|assistant|>\n' + message['content'] + eos_token }}

{% endif %}

{% if loop.last and add_generation_prompt %}

{{ '<|assistant|>' }}

{% endif %}

{% endfor %}

I’ve got this template saved in ./templates/chat.jinja so I can load it for use like this:

from jinja2 import Environment, FileSystemLoader, select_autoescape

env = Environment(loader=FileSystemLoader("templates"), autoescape=select_autoescape())

chat_template = env.get_template("chat.jinja")

For our purposes, we’re looking to craft a prompt that facilitates question-answering on our retrieved-context

def get_extraction_prompt(prompt: str, context: str) -> str:

chat = [

{

"role": "system",

"content": "extract the most relevant product details from web snippets for use by downstream applications. Always answer with ONLY a JSON object that has ONLY a single key. Never include any other information in your answer. Ensure that the content of the answer makes sense in the context of the question.",

},

{"role": "user", "content": prompt + "\n\n" + context},

]

prompt = chat_template.render(

messages=chat, add_generation_prompt=True, eos_token="<\s>"

)

return prompt

The first part of our prompt is the System prompt. This gives the LLM general guidelines on how it should behave, how it should present answers, etc. Here we’ve told it it’s task: extract the most relevant product details from web snippets for use by downstream applications. We’ve also told it how to return the answer, which is with a single-key JSON object, e.g. {"name": "Puma Speedcat"}

The second part of our prompt is the User prompt, the specific task we want the LLM to complete. The user prompt is made up of the question being asked, and the context in which the answer is present.

By specifying add_generation_prompt=True, we tell our template to end our prompt with the special token that lets the LLM know it’s time to respond.

Using our question and content from the previous section, we will get the following final prompt:

<|system|>

extract the most relevant product details from web snippets for use by downstream applications. Always answer with ONLY a JSON object that has ONLY a single key. Never include any other information in your answer. Ensure that the content of the answer makes sense in the context of the question.<\s>

<|user|>

What is the name of the product?

<meta content="new" data-react-helmet="true" property="product:condition"/>

-----------------

<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" name="description"/>

-----------------

<meta content="#ffffff" name="theme-color"/>

-----------------

<meta content="product" data-react-helmet="true" property="og:type"/>

-----------------

<meta content="Moncler 999 x adidas Originals NMD Padded Boots . Earn Rewards points when you shop and gain access to exclusive benefits." data-react-helmet="true" property="og:description"/><\s>

<|assistant|>

Now, we can send this to our TGI server:

generation_server = os.getenv("TGI_SERVER", "<http://localhost:3000>")

def generate(prompt: str, generate_params: dict = {}):

payload = {"inputs": prompt, "parameters": generate_params}

response = requests.post(f"{generation_server}/generate", json=payload).json()

if not "generated_text" in response:

print(response)

return response["generated_text"]

answer = generate(

prompt,

generate_params={

"best_of": 1,

"stop": ["}"],

"temperature": 0.1,

"max_new_tokens": 64,

},

)

answer = answer.strip()

And we get back the correct answer:

{

"name": "Moncler 999 x adidas Originals NMD Padded Boots"

}

This is great for a simple example, but LLMs are far from perfect, and we can’t assume every answer we get back will be in the exact format we want. We might get back JSON with more than 1 key, a partial JSON object that doesn’t parse, or any number of other invalid responses. We need a second prompt that fixes these kinds of outputs.

def get_normalize_prompt(prompt: str):

chat = [

{

"role": "system",

"content": "Rewrite the content as prose. Include all of the details present. Use a neutral tone, professional tone, and speak only about the product.",

},

{"role": "user", "content": prompt},

]

prompt = chat_template.render(

messages=chat, add_generation_prompt=True, eos_token="<\s>"

)

return prompt

Putting It Together: LLM Question Answering

We can take everything we’ve built so far in the section to make a new function, ask_question() that returns the answer to a question.

def ask_question(

question: str,

page_id: str,

limit: int = 5,

max_tokens: int = 256,

search=None,

collection_name="harrods",

) -> str:

"""Ask a question about a page.

Args:

question (str): The question to ask.

page_id (str): The page ID to ask the question about.

limit (int, optional): How many search results to use for context. Defaults to 5.

max_tokens (int, optional): The maximum number of tokens that the answer should contain. Defaults to 256.

search (_type_, optional): Optionally, use a separate search query from your LLM question. Defaults to `question`.

collection_name (str, optional): The name of the collection in qdrant. Defaults to "harrods".

Returns:

str: The answer to the question.

"""

if search is None:

search = question

search_results = search_page(search, collection_name, page_id, limit=limit)

if len(search_results) == 0:

return ""

searched_content = [

search_result.payload["content"] for search_result in search_results

]

context = "\n-----------------\n".join(searched_content)

prompt = get_extraction_prompt(question, context)

answer = generate(

prompt,

generate_params={

"best_of": 1,

"stop": ["}"],

"temperature": 0.1,

"max_new_tokens": max_tokens,

},

)

answer = answer.strip()

try:

# Hopefully the answer is valid JSON as requested

answer = json.loads(answer)

if len(answer.keys()) == 1:

# And hopefully it only has one key

return list(answer.values())[0]

elif len(answer.keys()) > 1:

# If it has more than one key, we need to normalize it back into prose

prompt = get_normalize_prompt(json.dumps(answer))

answer = generate(

prompt,

generate_params={

"best_of": 1,

"temperature": 0.2,

"max_new_tokens": max_tokens,

},

)

return answer.strip()

except Exception as e:

# If it's not valid JSON, we need to normalize it back into prose

prompt = get_normalize_prompt(answer)

answer = generate(

prompt,

generate_params={

"best_of": 1,

"temperature": 0.2,

"max_new_tokens": max_tokens,

},

)

return answer.strip()

return answer

Giving us this simple interface for asking questions about a document

page_id = "Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml"

collection_name = "harrods"

name = ask_question(

"What is the name of the product?",

page_id,

max_tokens=64,

)

print(name)

# Moncler 999 x adidas Originals NMD Padded Boots

Now, we can just repeat this for all of the information we want to extract.

def extract_from_page(page_id: str):

name = ask_question(

"What is the name of the product?",

page_id,

max_tokens=64,

)

price = ask_question(f"What is the price of {name}", page_id, max_tokens=16)

currency = ask_question(

f"What currency is the price of {name} ({price})", page_id, max_tokens=16

)

description = ask_question(

f"Description of {name}, or features", page_id, limit=7, max_tokens=512

)

is_available_online = ask_question(

f"Is {name} available to purchase online? Answer only yes or no.",

page_id,

max_tokens=16,

)

manufacturer = ask_question(

f"Who is the manufacturer (or manufacturers) of {name}? Answer as a string, even if there's multiple",

page_id,

max_tokens=64,

)

return {

"name": name,

"manufacturer": manufacturer,

"price": price,

"currency": currency,

"description": description,

"is_available_online": is_available_online,

}

Giving us a nice, JSON-serializable output with all of the data we want:

{

"name": "Moncler 999 x adidas Originals NMD Padded Boots",

"manufacturer": "Moncler, adidas Originals",

"price": 637,

"currency": "USD",

"description": "The Moncler x adidas Originals NMD Padded Boots are a collaboration between the two renowned brands. These boots are designed with a focus on functionality and style, featuring a unique combination of materials. The lining is made of other materials, providing added comfort and warmth, while the sole is also crafted from other materials. These boots are currently available for personal shopping and in-store purchase only, as they are not currently available for online purchase.",

"is_available_online": false

}

Final Workflow

With all of our steps wrapped up neatly in functions, we can see the simple data flow outlined by the architecture diagram.

# We've got all of our page content from the crawl, in .mhtml format

html_files = [

"Moncler x adidas Originals NMD Padded Boots _ Harrods US.mhtml",

"Anita Ko Yellow Gold and Diamond Huggie Hoop Earrings _ Harrods US.mhtml",

]

# We make the content searchable

for html_file in html_files:

embeddings, chunks = await get_embedding_from_mhtml("pages/" + html_file)

index_for_search("harrods", chunks=chunks, embeddings=embeddings, page_id=html_file)

# Then we extract our data

for html_file in html_files:

data = extract_from_page(html_file)

print(json.dumps(data, indent=2))

{

"name": "Moncler 999 x adidas Originals NMD Padded Boots",

"manufacturer": "Moncler, adidas Originals",

"price": 637,

"currency": "USD",

"description": "The Moncler x adidas Originals NMD Padded Boots are a collaboration between two renowned brands in the fashion and sportswear industries. These boots feature a unique combination of materials for both the lining and sole, which are both made of other materials. The availability of these boots is limited, as they are currently only available for personal shopping in-store, with no online option available at this time. However, customers who prefer to shop in person can still find these boots at select retail locations.",

"is_available_online": false

}

{

"name": "Anita Ko Yellow Gold Yellow Gold and Diamond Huggie Hoop Earrings",

"manufacturer": "Anita Ko",

"price": 6953,

"currency": "USD",

"description": "The Anita Ko Yellow Gold and Diamond Huggie Hoop Earrings are a luxurious accessory crafted from high-quality materials. Made of 18kt yellow gold, these earrings feature sparkling pear-cut diamonds with a total weight of 0.70ct. As a valued customer, you can enjoy the benefits of earning Rewards points with every purchase and gain access to exclusive benefits. The Anita Ko brand is renowned for its exquisite jewelry designs, and these earrings are a testament to their commitment to excellence.",

"is_available_online": true

}About Salad Cloud

Salad is the world’s most affordable GPU cloud. Our platform lets PC gamers around the world rent out their PCs when not in use. By utilizing this massive distributed pool of latent computing power, we can run AI and other compute-intensive workloads at much lower prices than legacy cloud providers. The distributed nature of the network is also a perfect fit for use cases such as web scraping, where device fingerprinting is a concern.

Can you share codebase?