Hands On #4: Testing the new Smartproxy Site Unblocker

Is the new Smartproxy's Site Unblocker capable to defeat Cloudflare, Datadome and other anti-bot solutions?

Hi everyone, this is a new series of posts from The Web Scraping Club, where I will try out products related to web scraping and make a sort of review about it. I hope this helps you to evaluate products before spending some money and time on testing them. Feel free to write me at pier@thewebscraping.club with any feedback and if you want me to test other products or solutions.

These Hands On episodes are not sponsored and the ideas expressed are my own, backed by quantitative tests, which change from the kind of product I’m testing. There might be some affiliate links in the article, which helps The Web Scraping Club be free and able to test even paid solutions.

July is the Smartproxy month on The Web Scraping Club. For the whole month, following this link, you can use the discount code SPECIALCLUB and get a massive 50% off on any proxy and scraper subscription.

During the past weeks, Smartproxy added to the available services also a brand new API, called Site Unblocker, which promises to make our life easier when it comes to web scraping. Let’s see together how it works and if it passes our anti-bot tests.

What is Site Unblocker

Smartproxy, following the latest trend in the industry, launched its “super API”, called Site Unblocker. Basically, with only one API call, you have in your scrapers:

proxy management and rotation

geo-targeting options

javascript rendering

fingerprint management

sessions management

Let’s stress it to find out its potential!

Our testing methodology

As we did to the other “unblocker” API, we’ll use a plain Scrapy spider that retrieves 10 pages from 5 different websites, one per each anti-bot solution tested (Datadome, Cloudflare, Kasada, F5, PerimeterX). It returns the HTTP status code, a string from the page (needed to check if the page was loaded correctly), the website, and the anti-bot names.

The base scraper is unable to retrieve any record correctly, so the benchmark is 0.

As a result of the test, we’ll assign a score from 0 to 100, depending on how many URLs are retrieved correctly on two runs, one in a local environment and the other one from a server. A score of 100 means that the anti-bot was bypassed for every URL given in input in both tests, while our starting scraper has a score of 0 since it could not avoid an anti-bot for any of the records.

You can find the code of the test scraper in our GitHub repository open to all our readers.

Preparing for the test

First of all, you need to create an account on Smartproxy’s website and then look for the Site Unblocker plans. As mentioned before, since this is the Smartproxy Month, you’ll get a 50% off discount on every plan using the code SPECIALCLUB.

After the plan is activated, you can start playing around on the website to test some URLs manually.

Clicking on the “Advanced parameters” you have more details and options to customize your request and see, at the bottom, how the request changes accordingly.

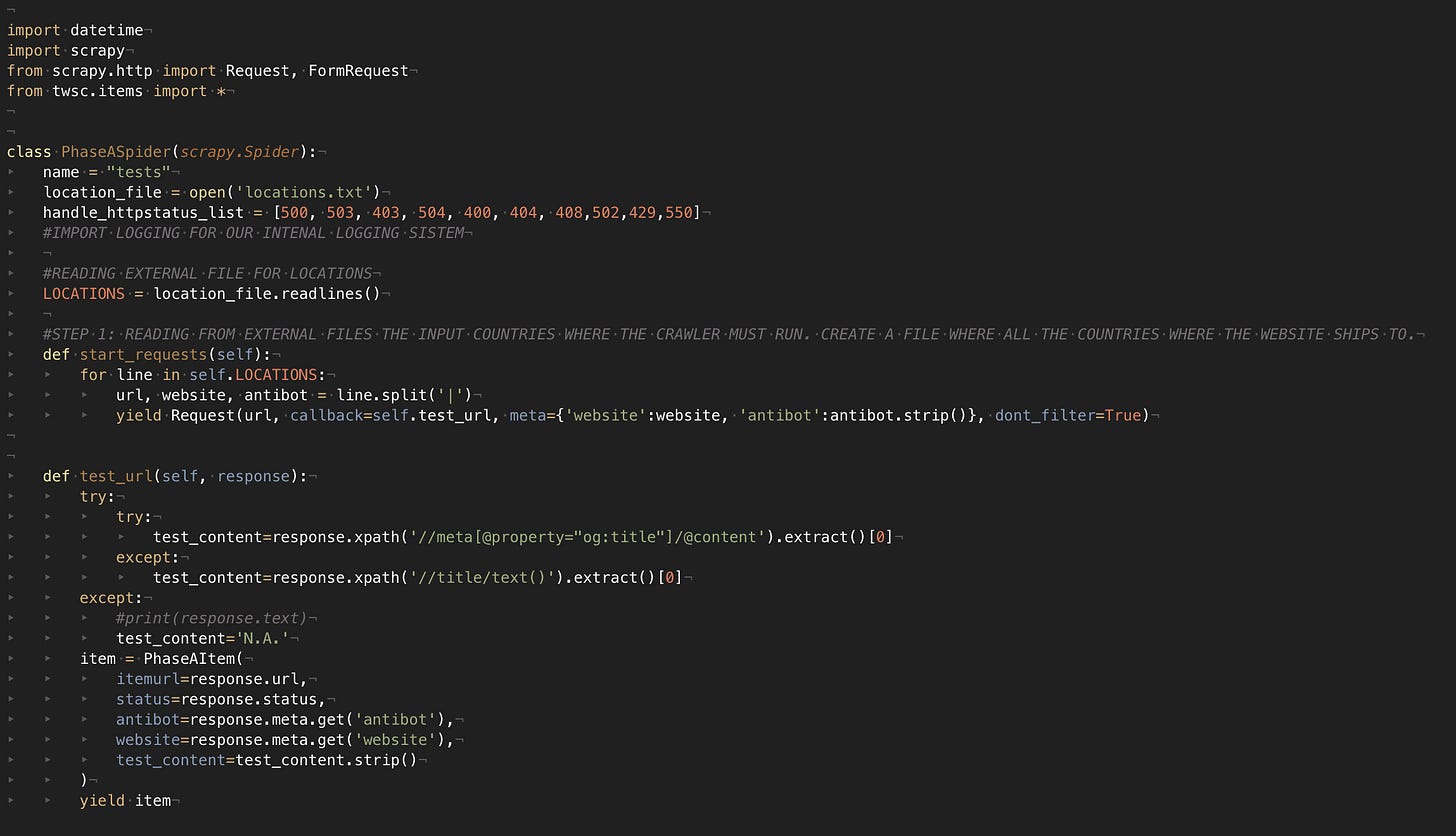

Setting up the Scrapy scraper

As said before, I’ve manually chosen fifty URLs, ten per website, as a benchmark and input for our Scrapy spider.

The scraper basically returns the Antibot and website names, given in input, the return code of the request, and a field populated with an Xpath selector, to be sure that we entered the product page and were not blocked by some challenge.

There’s no particular configuration to apply to the scraper, only the call to a proxy in the settings.py file.

You can find anyway the full code of the scraper on the free GitHub repository of The Web Scraping Club.

First run: no Smartproxy’s Site Unblocker

With this run, we’re setting the baseline, so we’re running a Scrapy spider without the site unblocker.

As expected, the results after the first run are the following.

Basically, every website returned errors except Nordstrom, which returned the code 200 but without showing the product we requested.

Second run: using the Site Unblocker with only raw HTML requested

In this run, we’re trying the Site Unblocker without any additional headers to see if this is enough to bypass all the anti-bot challenges.

Having made all the setup in the settings.py file, the scraper is pretty basic.

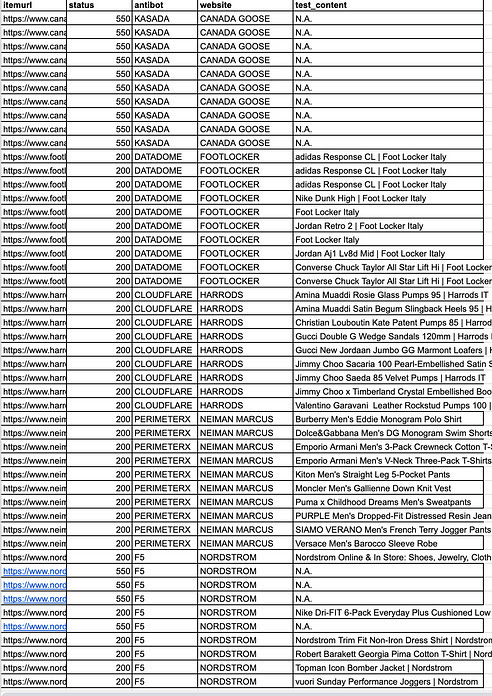

Here are the results.

While the solution works at 100% against Datadome, Cloudflare, and PerimeterX, we have mixed results against F5, but it’s something that can be solved with some retries, while Kasada seems that cannot be bypassed.

Third run: using Site Unblocker with Javascript rendering

To enable the browser rendering, the only thing to do is to review the requests parameter as follows.

Here are the results, similar to the previous run.

Final remarks

Smartproxy’s site unblocker has just been released and has margins for improvements but it’s already performing well. Except for Kasada, all major anti-bots are easily bypassed with this solution

Pros

Integration with Scrapy is straight-forward

100% success rate versus most famous anti-bots

Tweaking headers you can use advanced options like sticky sessions, geotargeting of the IPs, or custom cookies.

The price of 12$ per GB is one of the lowest for this kind of solution

Cons

Kasada actually not supported

There’s no pay-per-usage plan but only subscriptions, even if they start from really low, like 28$ per month for two GB.

Rating

The Smartproxy Site Unblocker is a brand new solution and the sins of its youth could be forgiven. It’s not that easy to bypass all the most known anti-bot solutions right from the start and the tweaks for scraping Kasada could come in the next months.

For today, its score is 80/100, a good result! In case you’re interested, you can try it on Smartproxy’s website and, using the code SPECIALCLUB you can get a 50% off discount.