How Can Multi-Accounting Browsers Help with Web Scraping?

A real-world use case with Octo Browser

Today, with our partner Octo Browser, we’re talking more about the benefits of using an anti-detect browser in web scraping operations.

Fingerprinting, along with other anti-scraping techniques, such as rate limiting, geolocation, WAF, challenges and CAPTCHAs, exists to protect websites from automated interactions with them. An anti-detect multi-accounting browser with a sophisticated fingerprint spoofing system helps bypass website protection systems. As a result, the efficiency of web scraping improves: data collection becomes faster and more reliable.

Together with the Octo Browser Team, let’s talk about how multi-accounting browsers work, how they can assist in web scraping, and what additional tools they are best used with.

What is a Fingerprint?

Many websites and services identify users based on information about their device, browser, and connection. This combined dataset is called a digital fingerprint. Based on the fingerprint information, website protection systems determine if a user should be treated as suspicious.

The specific set of analyzed parameters can vary depending on the protection system, but a browser can provide quite a lot of information if asked for it.

You can also ask the browser to create a simple 2D or 3D image, and generate a hash based on the information about how the device performed this task. This hash will distinguish this device from other website visitors: this is how hardware fingerprinting through Canvas and WebGL works.

You can find out what your browser fingerprint looks like using special checkers, such as Pixelscan, BrowserLeaks, Whoer, and CreepJS.

Minor changes to some of the features that the browser will communicate to the website's security system will not prevent the recognition of an already familiar user. You can change your browser, timezone, or screen resolution, but if you do not do all this simultaneously, the likelihood of identification will remain high.

How do multi-accounting browsers work?

To successfully scrape any website on a large scale, you will need three levels of masking your actions. You will need to:

Substitute your IP address for one that will not raise suspicions;

Spoof your digital fingerprint to hide the fact that the work is being performed on a single device;

Create a parser bot that will not trigger the security systems with its behavior or actions.

The role of multi-accounting (also known as anti-detect) browsers in bypassing website security systems lies in spoofing (substituting) the digital fingerprint. Using such browsers, you can create multiple browser profiles, virtual copies of the browser, isolated from each other and having their own sets of characteristics and settings: cookies, browser history, extensions, proxies, and fingerprint parameters. Each browser profile appears as a separate user to website security systems.

Spoofing in multi-accounting browsers can be implemented in various ways, but many low-quality anti-detection tools perform spoofing by integrating initialization JavaScript scripts into the browser context. This makes profiles created in such browsers more noticeable. More professional tools, such as Octo Browser, perform spoofing at the browser kernel level, which makes them significantly harder to detect.

How to Do Web Scraping with Multi-Accounting Browsers?

Anti-detection browsers typically offer automation capabilities through the Chrome Dev Tools protocol. It allows automating actions required for web scraping through programming interfaces. For convenience, you can use open-source software libraries and frameworks, such as Puppeteer, Playwright, Selenium, and others.

To choose a suitable multi-accounting browser, you need to test it on the platforms you will be working with. Many such browsers offer a trial period or free profiles.

Octo Browser does not offer a trial period, but specifically for the readers of this article, we will provide a 4-day trial period of the Base subscription with the promo code DATABOUTIQUE. You can find all the necessary documentation to get started here and detailed instructions for working with the API here.

When choosing a multi-accounting browser for web scraping, pay attention to the following characteristics:

Spoofing quality, which you can test yourself or use checkers;

Availability of detailed documentation;

Browser stability and reliability;

Frequency and promptness of browser kernel updates;

API capabilities;

Costs of profiles;

Availability of technical support.

Depending on the tasks you face, you might also need to consider the browser functionality, price, ease of use, service reputation, and general applicability for scraping: whether API is available and whether one-time/persistent profiles can be created.

If scraping needs to be done on a server, it is advisable to use a Linux distribution. This option may be more efficient than using home hosts, as data centers have a higher network bandwidth and more resources.

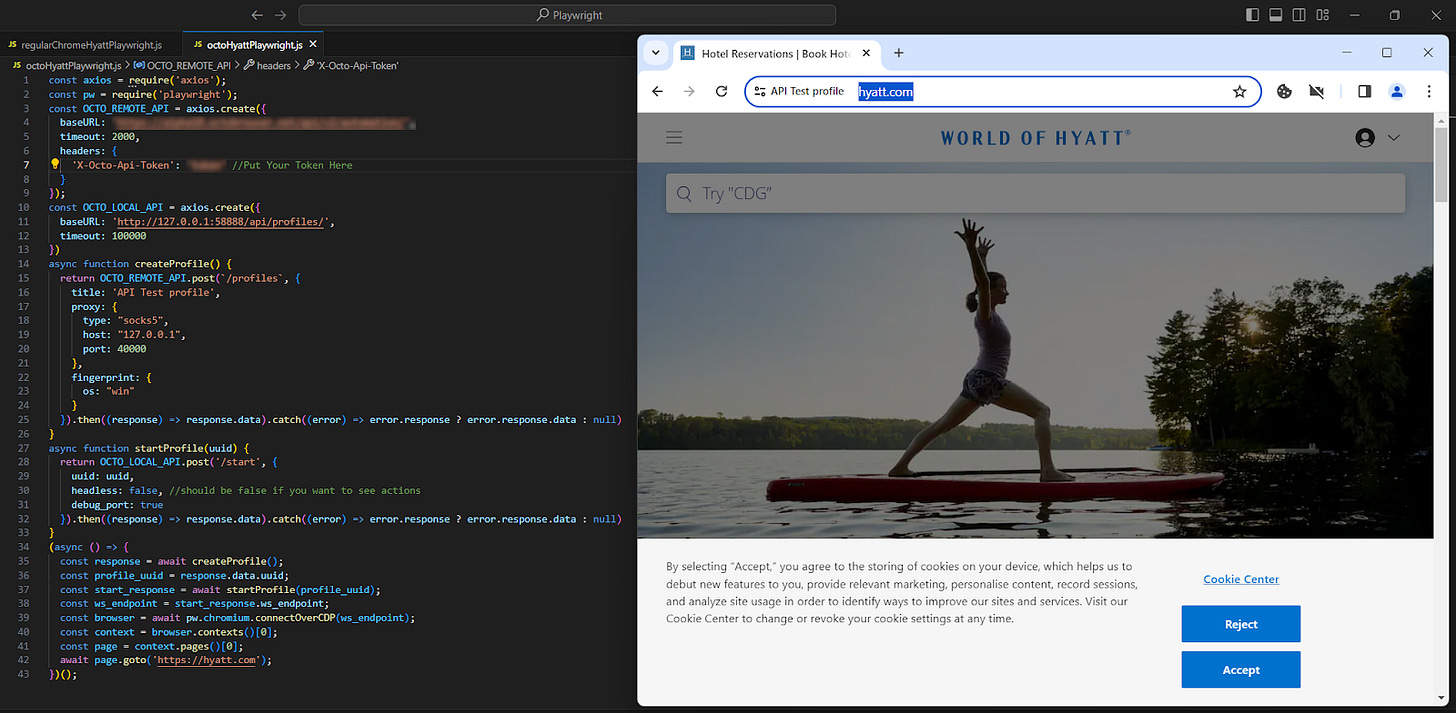

Here is an example of Octo Browser unblocking the hyatt.com site. This task is challenging, as the site is protected by Kasada antibot, which detects automation tools like Playwright or Puppeteer. Attempting to scrape data from this site with regular Chrome will result in a block.

Currently, Octo is the only well-known antidetect capable of bypassing this protection on hyatt.com. The site is inaccessible via regular Chrome and Playwright:

Here is the result with Octo:

Will a Multi-Accounting Browser Help Reduce Scraping Costs?

Multi-accounting browsers can both increase and decrease scraping costs, depending on resources and conditions.

Reduced costs come from reduced risk of blocks and automating manual tasks. For this purpose, multi-accounting browsers have a profile manager and automatic synchronization of profile data.

An increase in costs is mainly caused by purchasing a license for the required number of profiles.

All other things being equal, using a multi-accounting browser helps save resources and money and reduces scraping costs in the long run.

What Else Do I Need for Quality Web Scraping?

High-quality proxies play a very important role when working with a multi-accounting browser. You should choose providers with a good reputation and the lowest price. Proxies should be adaptable to the scraping strategy (geolocation) and have low spam/abuse/fraud scores. It is also important to consider the speed of proxies. For example, residential proxies may have high latency, which will affect the scraping speed.

Don't forget about simulating natural human behavior: clicks, scrolling, mouse movements, initial cursor coordinates, timings. Unnatural behavior raises suspicion and sends you to the greylist.

In addition to a multi-accounting anti-detect browser, high-quality proxies, and a well-thought-out script with human behavior simulation, you may also need an automatic CAPTCHA solver. OSS solutions, manual CAPTCHA solving services like 2captcha and anti-captcha, and automatic solvers like Capmonster are all suitable for this purpose.

Conclusions

To engage in web scraping and bypass anti-bot systems, it's necessary to simulate user behavior, use clean IP addresses, and spoof your digital fingerprint. You can use anti-detection multi-accounting browsers for fingerprint spoofing. The most advanced of them spoof fingerprints at the browser kernel level (and not at the JavaScript level) and also use real user fingerprints for spoofing.