The Lab #40: start a web data monetization project with Data Boutique

Buying and selling high-quality web data has never been so easy.

In this episode of The Lab, the premium article series from The Web Scraping Club, we’re wrapping up some concepts we’ve seen in past articles. In particular, we’re seeing how we can monetize our web scraping skills by selling datasets on databoutique.com (disclaimer: I’m one of the co-founder of the website).

In particular, we’ll understand better how Data Boutique works and how web scraping professionals could use the platform to generate a new revenue stream by selling datasets on the platform. Last but not least, we’ll add one dataset with product prices of Canada Goose GOOS 0.00%↑ , a website hard to scrape since it’s protected by Kasada. For this, we’ll use Kameleo anti-detect browser.

What is Data Boutique and how does it work?

Data Boutique is a marketplace for web legally collected web-scraped data. Buyers can find datasets fresh and ready to use, that can be bought in a few clicks, at a fraction of the cost of a web scraping project.

While the idea of a data marketplace is not new and there are already several actors on the market (AWS Data Exchange, Snowflake Data Marketplace, and so on), they’re generic and host all kinds of data, from transactions to weather. In most cases, even if you find web-scraped data, you need to contact the vendor, ask for the dataset price, and start a negotiation.

How cool would be a marketplace where you could buy in less than 3 clicks and get it on your device immediatly? Well, this is Data Boutique.

We focused on the portion of web scraping that is 100% legal to obtain (no personal information, no copyright materials, no data protected by login), managing its storage, checking quality, enabling on-platform payment, and delivering directly to the buyer.

But the most important difference from the other marketplaces is that the interests of the platform are aligned with the ones of the sellers and buyers. Data Boutique makes money when data is bought on the platform, by taking a transaction fee. We want buyers to be happy when they find affordable and high-quality web scraped data, so they will buy more. We want sellers to make money by selling it: even if it’s a radical shift from the traditional freelancing gigs, especially when thinking about the pricing model, and it can be scary to think about selling datasets for 10 or 20 euros, this level of price is one of the key factors for wider adoption of web data. Just like what happened in many industries, from Walmart to Ikea: sell at less to sell to more people, expand the potential user base, and thanks to larger volumes, sellers could make more money from this process.

Lowering the final price for the customer while keeping the high-quality standards means:

eliminate your first competitor: the potential buyers that try to scrape by themselves the data.

enable more potential buyers to use your data. How many times have you seen a proposal refused because the price was too high?

encourage recurring buying on your dataset. Since the price on Data Boutique is a pay-per-download, buyers can subscribe to a monthly, weekly, or even daily refresh of your dataset, if the single download price is low enough.

The more data is sold, the better it is for sellers but also for Data Boutique, since per every purchase the platform takes a fee, so our mission is to bring more buyers to the platform and ensure they want to buy more by maintaining a high-quality standard on data provided.

Ensuring data quality

If you’ve been in the web scraping industry for some time, you know that data quality is one critical aspect that determines the success or not of a project.

On the platform, there are some embedded data quality checks on fixed columns, depending on the data schema used for the dataset. But of course, this cannot be enough: that’s why we also have a Peer Review quality program. Sellers are seldom asked to count how many items for a single brand or category are available on a random website, not provided by themselves. In this way, every dataset available for sale is not only reviewed by the seller but also crosschecked by others.

This means also that we had to make some difficult choices: data can be uploaded only by following pre-defined schemas. While this has the benefit of being more easily checked and also makes data from different sellers belonging to the same schema stackable in the same data structure, this means that on the platform you cannot find custom data models.

We believe that by creating several standard data schema, in the future we’ll be able to cover the majority of the use cases around, but since we know that every website has its peculiarities and additional information that could not fit in any of them, buyers can contact directly sellers for custom work directly from Data Boutique and agree on an off-platform task.

How to sell data on Data Boutique?

I hope I didn’t bore you with all this theory, now we’re finally getting our hands dirty with a cool project.

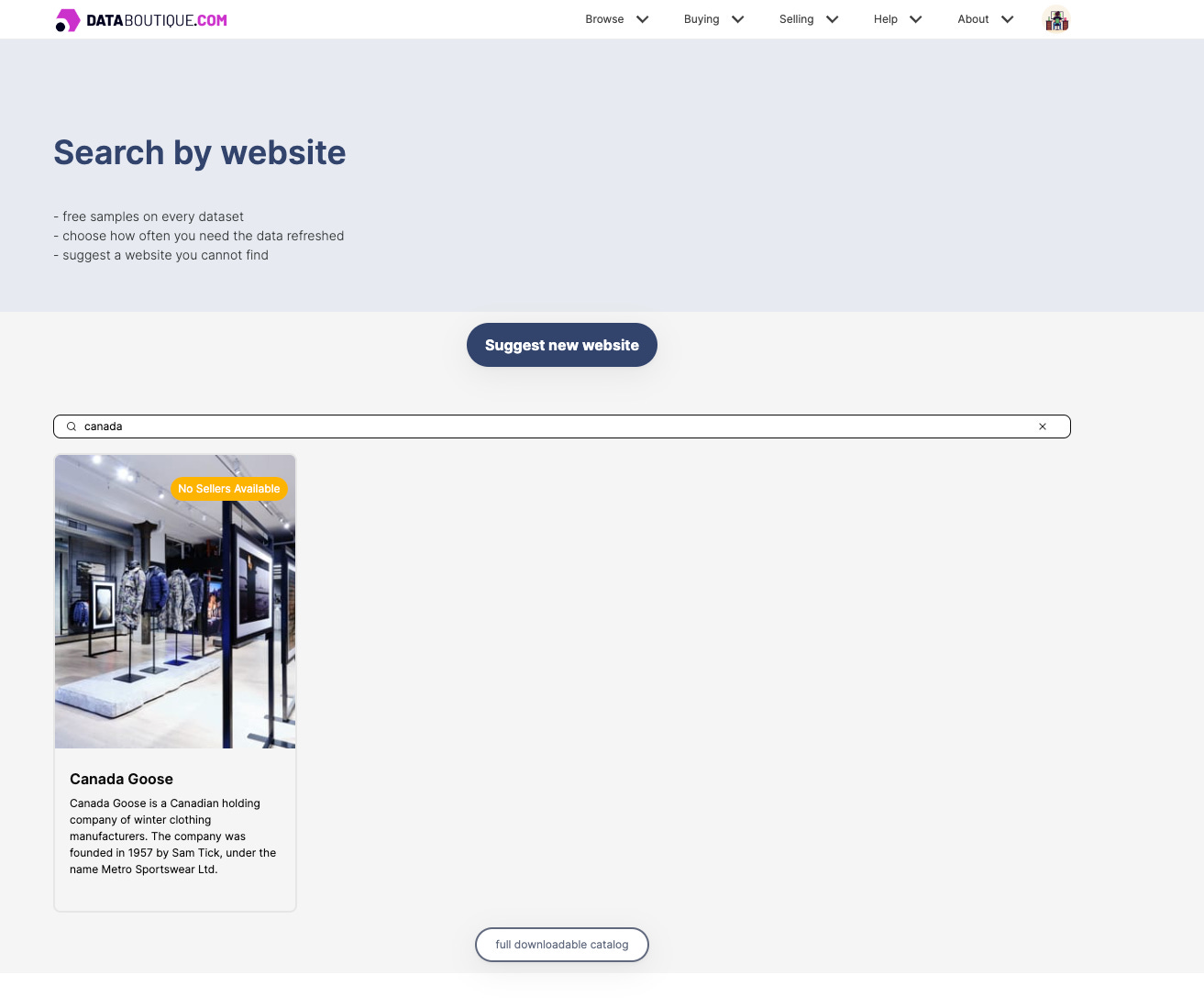

I’ve noticed that one of the websites listed on Data Boutique, Canada Goose, didn’t have any seller to provide it.

Since it’s a tough website, protected by Kasada, it could be a good fit for trying a tool we’ve already used for Cloudflare, Kameleo, and see if it works also in this case.

After signing up to the website and completing your profile, we can enter the Canada Goose page and see that we can choose between different countries to scrape. Let’s start by picking the USA dataset.

On the Canada Goose USA page, we see that there are no sellers available (no one is listed for selling it), and we can apply to sell this data by clicking on the red button.

Of course, we can apply to sell datasets on sale by other sellers, if we think we can offer them for a better quality or a lower cost.

After completing the Due Diligence questionnaire on the website with some required information about legal aspects of the operations, the accessibility of the data, and the price we’re gonna set per single download, you’ll be redirected to the page of your contracts.

By opening the contract, we’ll have all the information needed to proceed with our scraper.

We applied for an E0001 schema website, which stands for E-commerce 0001 schema, a basic data structure for e-commerce products (you can find all the schemas available at the moment here).

Then we have the contract and seller IDs, the S3 delivery directory where to send the file once is scraped, our AWS credential generated at the moment of the signup, and some documentation about the delivery method and the data structure.

Basically, after we scraped the data from the website, we just need to upload the results on a s3 Bucket: if it passes the validation checks, the contract is live and buyable.

Bypassing Kasada: the technical solution

After we get all the information needed, we can finally start coding our scraper.

We already mentioned that Canada Goose is protected by Kasada, so we’ll need a headful browser to bypass it. Since last time I was surprised by the ease of use of Kameleo, I’ve decided to test it again, this time against Kasada.

On the GitHub repository, available for paying readers, you’ll find all the code of this example.

If you’re one of them but don’t have access, please write me at pier@thewebscraping.club specifying your GH account.

The first step to take is to install and launch the Kameleo client on a Windows machine. I’ve set it up on a t3.medium image on AWS, and works like a charm.

Then we need to create programmatically a new profile and save its ID to use it later on the scraper. To do so, in the repository you will find a script called kameleo_API.py that prints out, at the end of the execution, the ID of the newly created profile.

from kameleo.local_api_client import KameleoLocalApiClient

from kameleo.local_api_client.builder_for_create_profile import BuilderForCreateProfile

# This is the port Kameleo.CLI is listening on. Default value is 5050, but can be overridden in appsettings.json file

kameleo_port = 5050

client = KameleoLocalApiClient(

endpoint='http://YOURCLIENTIP:5050',

retry_total=0

)

# Search Chrome Base Profiles

base_profiles = client.search_base_profiles(

device_type='desktop',

browser_product='chrome',

os_family='windows'

)

# Create a new profile with recommended settings

# Choose one of the Base Profiles

create_profile_request = BuilderForCreateProfile \

.for_base_profile(base_profiles[0].id) \

.set_recommended_defaults() \

.build()

profile = client.create_profile(body=create_profile_request)

# Start the browser profile

client.start_profile(profile.id)

print(profile.id)In this case, we’re simulating a Windows Desktop machine with Chrome Browser installed.

Given the ID, we can continue with the creation of the scraper, the script canadagoose.py