The Kallax Index - Scraping Ikea websites

Common issues happening when tracking a product price in different countries

The Kallax Index

If you're at least a bit interested in economics, you've surely heard about the Big Mac Index by The Economist.

Invented in 1986, it's a simplified way to understand if currencies have a "fair" exchange rate, using the theory of the purchasing-power-parity: in the long term, a Big Mac should cost everywhere the same.

As an example, if a Big Mac costs 1 dollar in the US and 4 Yuan in China, the expected currency exchange is 1:4, but if on the markets is 1:6 it means that the Yuan is undervalued.

But what it's true for a Big Mac, isn't true for most of the retail world. Prices for the same item vary considerably from country to country, depending on the production site location, the logistics costs to the retail point and then to the final customer, taxation and import\export duties, and currency exchanges.

Let's take as an example another global brand like IKEA, with stores in 61 countries of the world. While products are designed mainly in Sweden, they are manufactured mainly in China, South Asia, and eastern Europe, and then they need to be transported to all the retail locations around the globe, determining the final costs.

To measure how big is this impact and do some web scraping at the same time, let's take one of IKEA's bestsellers, available in every country: the Kallax bookshelf.

Mapping the sites

Our first task (and the most boring one) is to map all the versions of the Ikea websites available for each country. Unluckily there isn't a page with the list of all the country localizations of the website. We need to manually check for each one how the website works and if there's the Kallax in the catalog.

And then the doors of the multi-country retail hell opened.

Different product codes

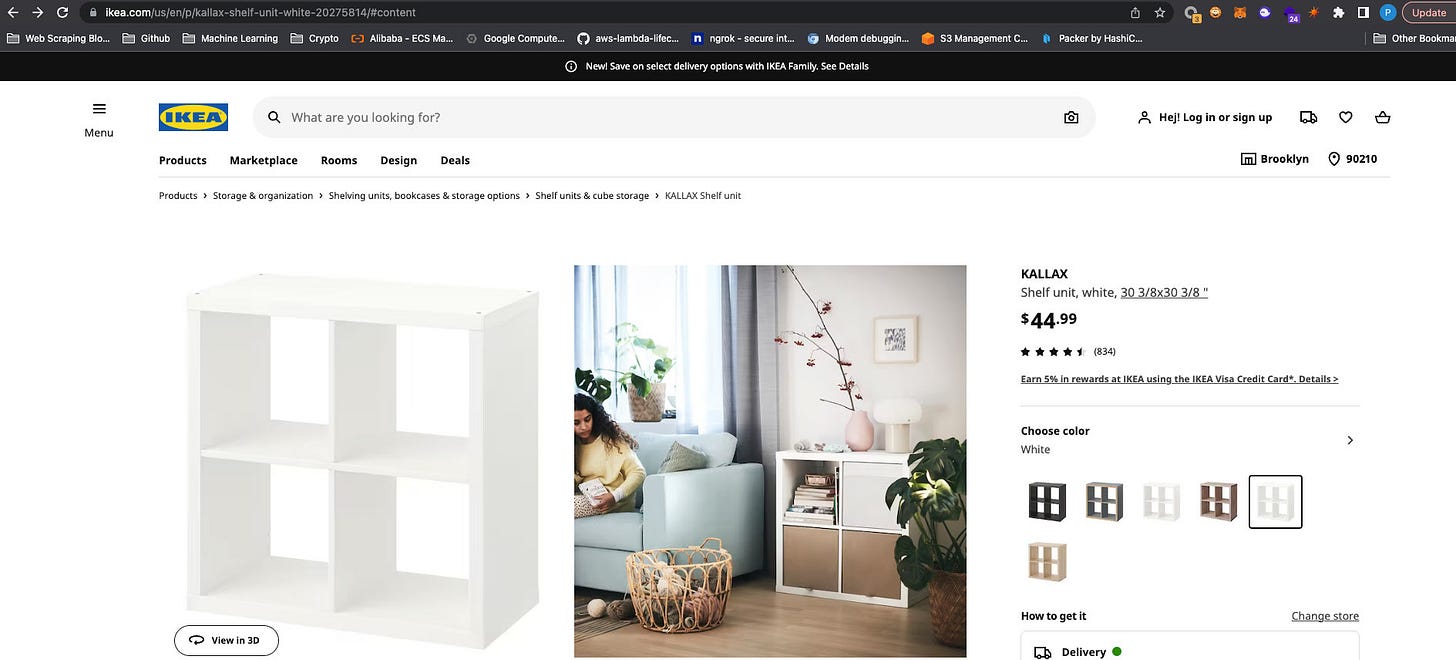

To be sure to compare apples with apples, the Kallax model we're looking for in each website is the white one, 77x77cm, that on the website has the product code 20275814 (or 202.758.14 with the punctuation format).

Once decided on the product to look for and found this code, we should be able to easily find it in every country.

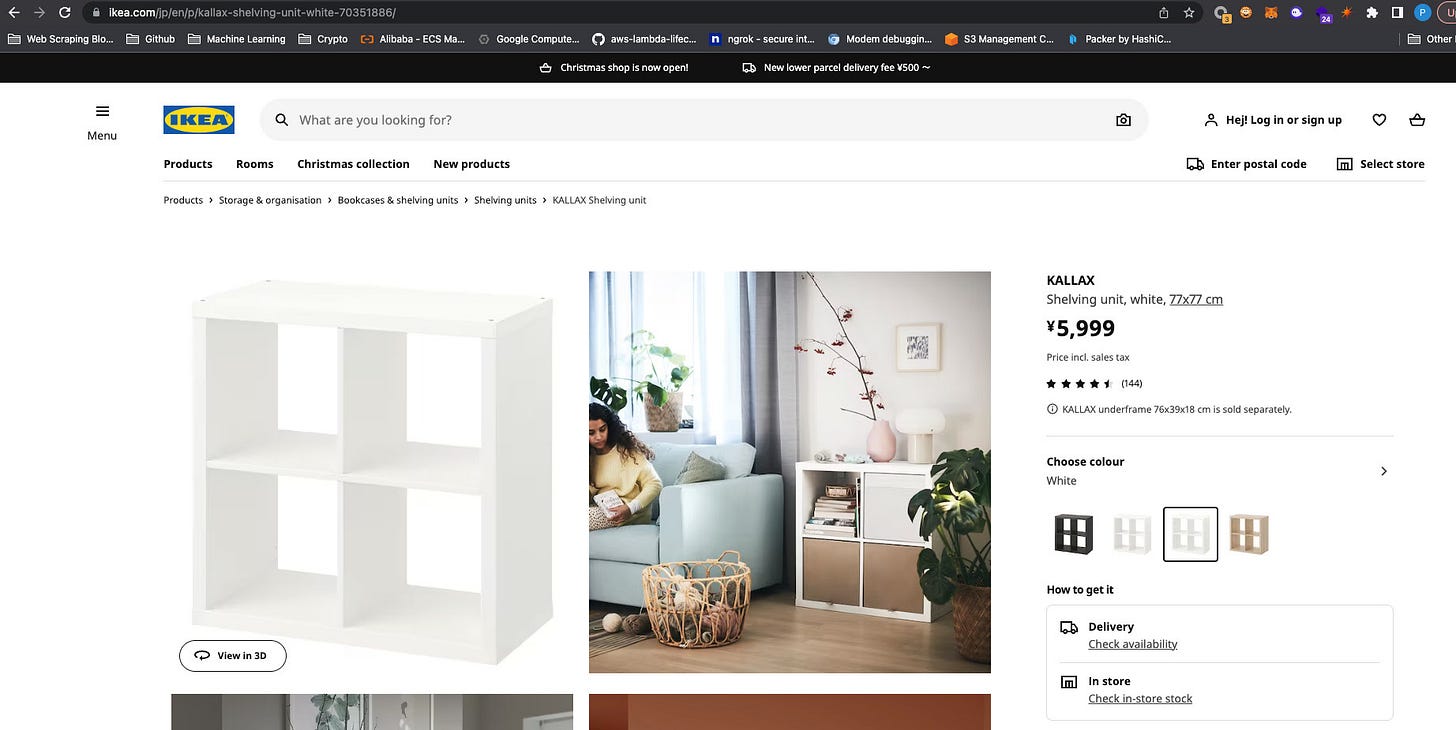

Well, actually almost every country: I've soon discovered that in Japan and other eastern countries, there's no product with the code 20275814, but the exact same Kallax variation exists and has 70351886 as a code.

Luckily there are only these 2 product variations and they are exclusive. There's no product 70351886 in the US and no other 20275814 product in Japan, so without worrying about picking the wrong item, we can programmatically look for both codes in every country to be sure we're not missing anything.

China also has a third code, 90471717, and we'll use it only for its website.

Different website versions

Once understood which product codes to ask for, I had a look at how websites in each country work, to get the price information we need most efficiently.

For most countries, a simple API call is enough. Using the internal product search API, found using the inspect tab of the network tools of Chrome, we can get easily the product price, its currency, and some other data we actually don't need.

But there are 14 countries out of 61 where this is not true: this is because a different tech stack has been used for the website implementation and there's not the same API available.

Bulgaria, Cyprus, and Greece use one variant of the website, without API

Hong Kong, Taiwan, and Indonesia use a second variant, calling a post request to an Algolia endpoint to gather data.

Estonia, Latvia, Lithuania, and Iceland use a third variant

Puerto Rico and Santo Domingo use a fourth variant

Turkey and China have each their own website version

In my experience with scraping e-commerces, this is a quite common situation and it depends on several causes. For greater China, Japan, and occasionally South Korea too, retailers usually assign the development of e-commerce to local teams that have greater expertise in UX, culture, and understanding of the society rather than a western one.

There are countries also where e-commerce has opened earlier and they are lagging behind on the software version, compared to the newest. Or on the contrary, the most recent e-commerce version has a new stack and it's tested only on several countries before being ported to others.

In our case, since we're not focusing on extracting all the data from all the countries but we need only one product per website, we'll create the scraper for the most common version and then manually fill the gaps for the other countries.

Creating the scraper

The scraper itself it's quite basic, we need to call the search API endpoint for 46 countries.

The endpoint is the following:

https://sik.search.blue.cdtapps.com/us/en/search-result-page?max-num-filters=8&q=20275814 and it returns a JSON with product details and its price. To rotate this request between the different countries we need the 2-digits country code and the related locale.

I'll create then a file to use an input for these two values, one row per country. Then, inside the scraper, we'll query both the product code we're looking for and parse the JSON response.

I've developed the scraper using Scrapy but it could be done probably with some simple Curl requests, being basic in its features and you can find the code on our GitHub repository for free readers.

Visualizing the outcome

I've copied the result into an open Google Spreadsheet and integrated the results with the data from the missing countries.

We were lucky enough that the API response contained already the currency ISO code, otherwise another step of data quality and standardization would be needed before being able to compare the results.

Google Spreadsheet contains the formula for the currency exchange but usually, this is a data enrichment activity done in the database after data is scraped and loaded.

Thanks to both these elements, we're able to get some insights immediately and there's one thing that's absolutely stunning. The same item could cost from 25 to 130 USD around the globe.

Despite the markup applied on local prices, needed for covering logistics and taxation costs, such gaps are usually a symptom of something to adjust. Generally speaking it can be that a currency devaluated or appreciated too much and the local price has not been adjusted in time.

Final remarks

In this post, we've seen what it means to scrape prices from a multi-country perspective.

Technically speaking, it introduces a new order of difficulties:

Several variants for the same website

Different standards between the different websites (like the product code)

Data quality and standardization needed to compare apples with apples

From the business side, we have seen the differences in prices for only one product on one website in 60 countries. But in my working experience at Re Analytics I've seen how important is for our customers to have a look at this pricing data for hundreds of websites and millions of products. With a bigger picture, managers can decide with much more data and rely less on gut feeling.

If you’re into pricing themes and you’re always looking for new data, maybe you can be interested in our new project, Databoutique.com. It’s free and still in private beta, but feel free to sign up.

Is there a scraper already built for substack comments to check for keywords related to spam?