THE LAB #19: How to mask your device fingerprint

Beating the fingerprinting by Cloudflare is possible

We’ve already seen in previous posts what’s a device fingerprint and how it is created, using an IP and its derivative information, the ciphers used by the HTTPS connection, and, most importantly, the information passed by your browsers.

When we tackle modern anti-bot software we mostly need a headful browser: this makes our scraper seem more human-like but also exposes a great variety of data from the environment where the scraper is running, and sometimes this approach is counterproductive.

Device fingerprinting at work

This can happen, as an example, when Cloudflare is configured at its highest level of paranoia.

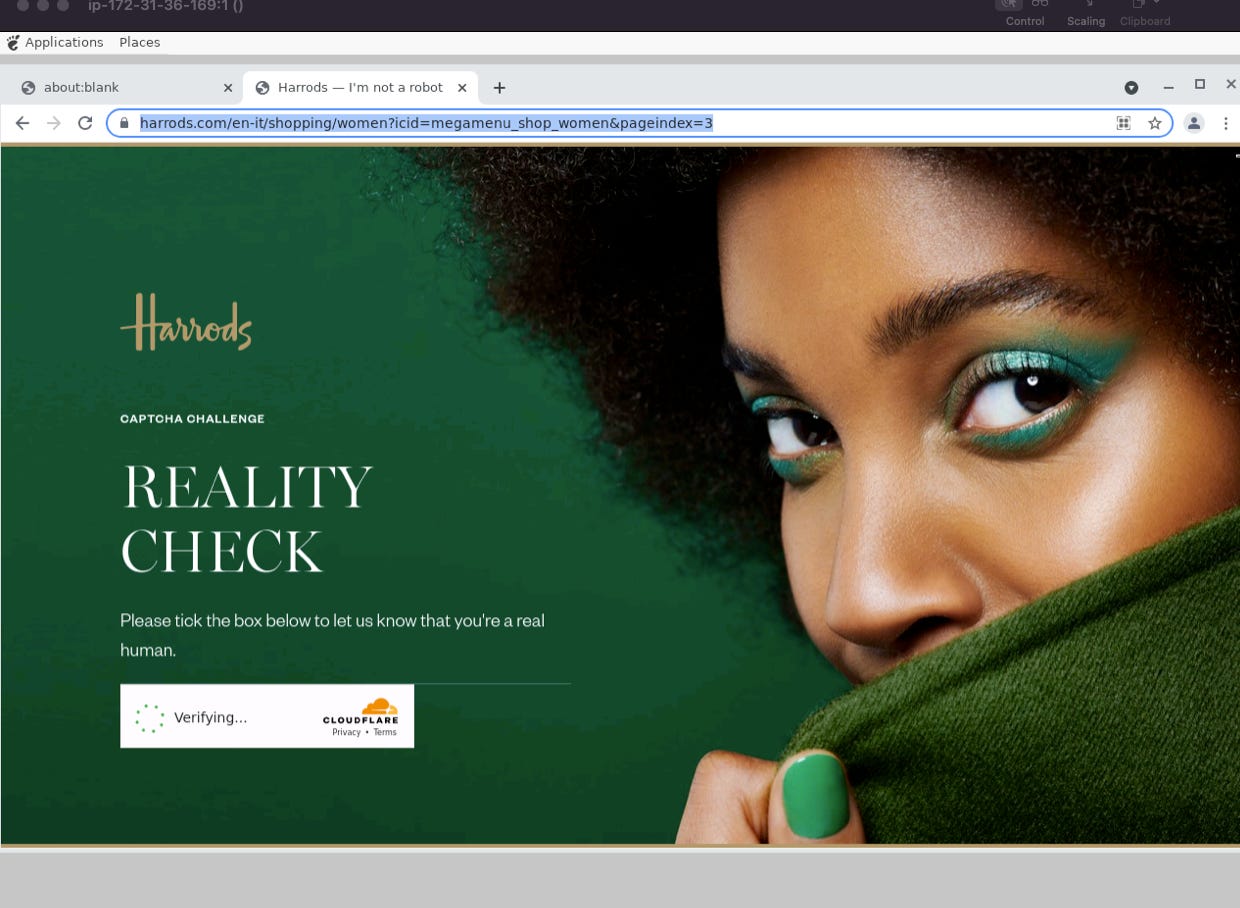

A well-known example of this situation is the harrods.com website. In the GitHub repository of The Lab, reserved for our paying readers, you will find a file called tests_pl_chrome.py.

It’s basically a Playwright browser session with Chrome and some flags to make it more human-like. Within this browser session, we open several pages of Harrods’ website.

If we run this script from a local machine, it bypasses the Cloudflare challenge and we can browse the website. If the same script runs from a virtual machine in a datacenter, it won’t pass the test.

And it’s not a problem on the IP, because even if we use Playwright with some residential proxy, like in the following snippet, the result is the same.

with sync_playwright() as p:

browser = p.chromium.launch_persistent_context(user_data_dir='./userdata/', channel="chrome", headless=False,slow_mo=200, args=CHROMIUM_ARGS,ignore_default_args=["--enable-automation"], proxy={

'server':'proxyserver',

'username':'userl',

'password':'pwd'

},)

page = browser.new_page()

page.goto('https://www.harrods.com/en-it/', timeout=0)

This means that the browser leaks some pieces of information that are seen as red flags by Cloudflare. Fundamentally, we’re getting blocked by the fingerprint of our server.

Trying to hide from fingerprinting

Since fingerprinting is a well-known issue also for its privacy implications, in previous years several browsers with increased privacy functions appeared on the market. Chrome is known for leaking a lot of data, while Firefox and Safari started to differentiate from it by putting more focus on the user’s privacy.

Let’s try first using Firefox instead of Chrome. This is also my general advice when using Playwright, since leaking fewer pieces of information, it performs better against anti-bots.

But this is not the case. Even our tests_pl_firefox.py script fails if we run it from a virtual machine on a server.

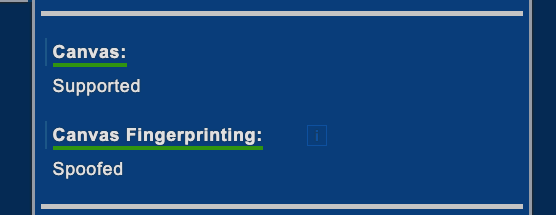

Let’s try another browser, Brave. I actually love it and use it on a daily basis for browsing, it has a built-in ad-blocker and spoofs some type of fingerprints, as you can see from the results of the deviceinfo.me test.

But unluckily also the tests_pl_brave.py script fails. I’ve also tried to launch an incognito window by Playwright but I’ve noticed a strange behavior.

CHROMIUM_ARGS= [

'--no-sandbox',

'--disable-setuid-sandbox',

'--no-first-run',

'--disable-blink-features=AutomationControlled',

'--incognito'

]

with sync_playwright() as p:

browser = p.chromium.launch(executable_path='/Applications/Brave Browser.app/Contents/MacOS/Brave Browser', headless=False,slow_mo=200, args=CHROMIUM_ARGS,ignore_default_args=["--enable-automation"], proxy={

'server':'server',

'username':'user',

'password':'pwd'

})

page = browser.new_page()

page.goto('https://www.harrods.com/en-it/', timeout=0)Using this script, a first window is opened in ‘incognito mode’ but the new_page() command opens a new window, which is not in the same context, making the —incognito flag unuseful. I’ve seen there’s an issue open on the Brave GitHub but anyone has found a solution or workaround for it? Please let me know in the comment below.

A working solution, finally

There’s another class of browsers, called anti-detect, which I discovered a few months ago and they are specifically built for spoofing the fingerprints they send.