THE LAB #88: Fuel Your Content Machine with LLM Scraping

Fill your Obsidian Vault with daily inspiration from the web

Finding new ideas for your content machine – whether it’s a corporate blog or a newsletter – can be hard.

The internet is constantly updated with new content every day, but manually checking dozens of websites for inspiration is tedious and time-consuming.

One way to discover what people are reading right now is to monitor a curated set of interesting sources and glean insights from their latest posts. I’m actually doing this here and then, but for this article, I decided to automate this process.

In the past days, I’ve started again to take notes on Obsidian: I used it some years ago, but I found it too complicated to connect the dots, and then I stopped. But thanks to some YouTube videos I’ve seen recently, I gave it another try, and the actual version makes it much smoother to take notes, so I’m starting my second brain again on it.

In this article, I’m building an engine for creating a daily “web scraping press review”, in order to be updated with the latest trends and news in the industry.

Before proceeding, let me thank NetNut, the platinum partner of the month. They have prepared a juicy offer for you: up to 1 TB of web unblocker for free.

The project is quite simple: I set some sources, extract the URLs to the latest articles, and then, using LLM Scraping tools like ScrapeGraphAI and Firecrawl, I ask for a summary of the article. For each article, I’ll create a small note on Obsidian and, if it seems promising, I’ll read the full one and add my reflections on it to my second brain.

But first of all, what is Obsidian, and what does it mean by “second brain”?

Building a "Second Brain" with Obsidian

A “second brain” is essentially a personal knowledge management system – an external, organized repository of knowledge that supplements your own memory. The term (popularized by productivity expert Tiago Forte) refers to using technology to capture and organize information so that it’s available for future use. The idea is that we’re constantly bombarded with information, far too much to remember, and a second brain helps “translate that knowledge into something useful” by storing and structuring it outside our head.

In practical terms, your second brain could be a set of digital notes, documents, or any system where you offload ideas and facts for later retrieval. This concept is especially powerful for anyone in content creation or technology, where you need to absorb and recall a large amount of details from various sources over time.

Obsidian is a popular app for building such a second brain. It’s a note-taking tool that works on local Markdown files, giving you full control over your notes. Obsidian bills itself as a “personal knowledge base” or “second brain” app, and for good reason.

Notes in Obsidian are plain text Markdown, which makes them easy to create, organize, and link together. In other words, Obsidian serves as a long-term memory bank: you input ideas or summaries, and later you can search or navigate through them via internal links (its graph view visually shows connections between notes). It’s offline-first and extensible, meaning your notes are saved on your device (you own your data) and you can enhance functionality with community plugins. All these features make Obsidian an ideal place to accumulate insights from articles you’ve read or summarized.

The fact that Obsidian uses Markdown and YAML standards makes it easy for us to also create notes programmatically, as we’ll see later in the code section.

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

💰 - 50% off promo on residential proxies using the code RESI50

💰 - 50% Off Residential Proxies

🧞 - Scrapeless is a one stop shop for your bypassing anti-bots needs.

Automating Daily Inspiration with LLM Scraping

The goal is to create a pipeline that, every day, fetches new articles from your favorite websites, summarizes them with AI, and drops those summaries into your Obsidian vault as notes. This way, your second brain gets a constant infusion of up-to-date knowledge and trend insights without you having to manually hunt them down. Here’s how the workflow can be set up.

Curate Your Sources

First, identify a list of blogs, news sites, or forums that consistently publish valuable content in your niche. These could be popular industry blogs, competitor websites, or content aggregators related to your field. The key is that you find their content useful or inspiring. (Many sites have RSS feeds or newsletters – those can help you detect new posts, but our AI scraper can also directly check the sites.)

In this example, I’m looking for posts about fingerprinting on Black Hat World forum and new articles on another web scraping blog called WebScraping.pro.

In the first case, I need to scrape the search results and filter the newest posts, storing the URLs of the ones not fetched in the previous runs.

For WebScraping.pro blog, instead, I can use the sitemap and extract the data from there, always filtering for the URL that has not been processed in the past.

Summarize the Content with LLMs

Rather than storing full articles (which can be very lengthy), we’ll use the AI superpowers to summarize them. For this project, we’re using ScrapeGraphAI and Firecrawl, which have a similar working pattern: you define the prompt and the output schema, and by calling an API, you get a JSON or Markdown result.

In our case, the result would be a concise summary generated by the LLM, instead of the raw article text. The idea is to condense each piece into an actionable blurb – something that tells you what the article is about and what insights it offers. This step is crucial because it saves you time: you only spend effort reading full articles that sound particularly relevant, while still capturing the gist of everything else.

We’re also asking for some tags, in case we want to navigate these notes by topic and, of course, the URL to the full article, which we’ll use for the full read of the ones that seem interesting.

This is the best use case we can ask for LLM scraping: we want to potentially add hundreds of sources, but, of course, we don’t want to write hundreds of scrapers for extracting the full articles. By asking LLMs to summarize the article, they do the dirty work for us, without the need to build custom scrapers.

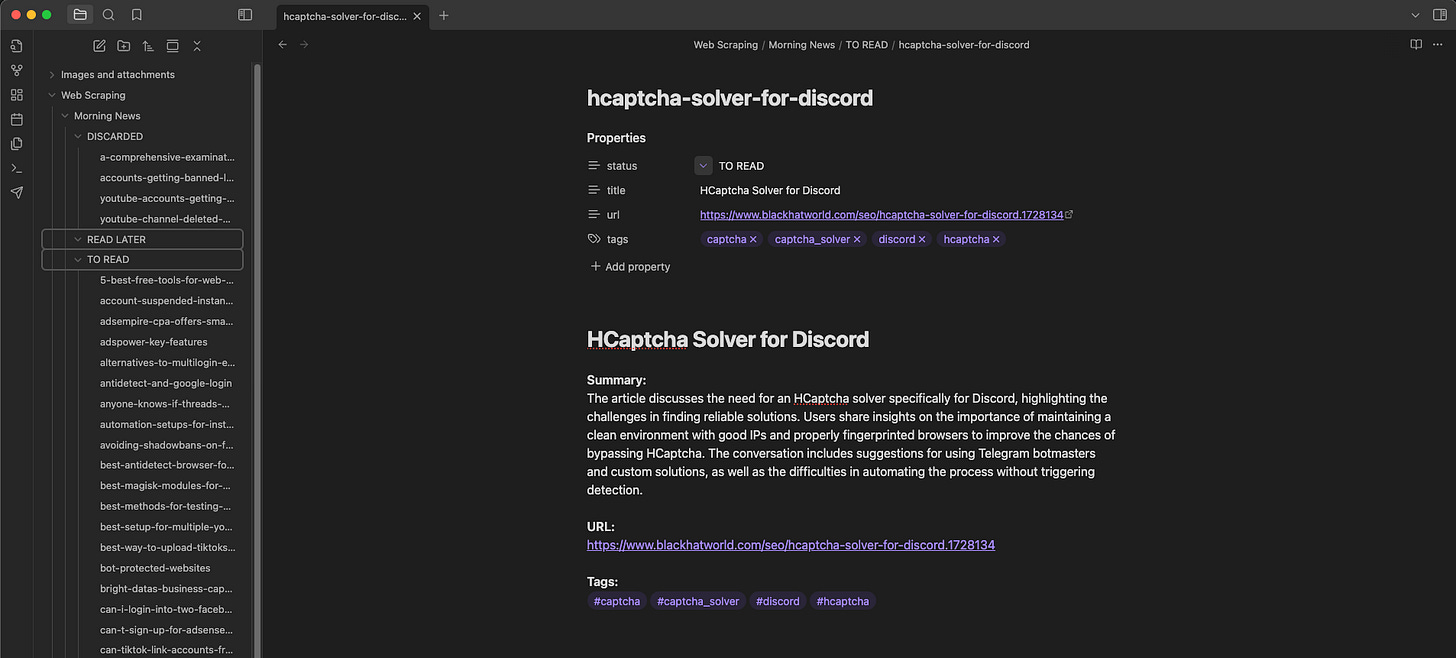

Create Markdown Notes in Obsidian

Once the AI returns a summary, our script will create a Markdown file with a formatting standard that makes it readable in Obsidian. A good practice is to include the article title, source, and a link back to the original post at the top of the note, followed by the summary text. You might also tag the note or put it in a specific folder (for example, an “Inbox” or “Daily Digests” folder in your Obsidian vault). Since Obsidian works with local Markdown files, this step could be as simple as saving the .md file to the vault directory. Some users treat their Obsidian “Inbox” as a holding area for new notes to review, which fits our use case well.

Review and Organize (Keep, Discard, or Read Later)

Now comes the human in the loop. With minimal effort each day, you can open Obsidian and see a set of new notes – each one a distilled summary of an article that came out recently on your chosen sources. Skim through these summaries. If a summary sparks an idea or seems particularly relevant, you might move that note into your permanent knowledge base, adding your two cents and connecting the dots with your other notes.

This is the “keep” scenario – you’re adding it to your second brain permanently. If a summary is not useful or off-topic, you can just delete that note (discard). And if a summary is intriguing but you want to dive deeper into the full article later, mark that note as “Read later”.

In effect, the AI has served as a first-pass filter, pre-reading everything for you and surfacing the main points so you can decide what deserves your limited attention.

By following the above steps, you essentially have an AI research assistant feeding your second brain with fresh knowledge every day. This not only keeps you informed about what’s trending or being talked about in your industry, but also helps you spot patterns across different sources. For example, if five different tech blogs in your list all started discussing a new web scraping technique or a browser update in the same week, that’s a strong signal of a hot topic – potentially something you might want to write about in your next blog post or newsletter. Your second brain notes will make these connections more visible, especially if you link related notes together or use tags for recurring themes.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Give it a try at this link.

The outcome of this process will look like the following

Now that we’ve seen the process, let’s move on to the practical part.

The scripts mentioned in this article are in the GitHub repository's folder 87.SECONDBRAIN, available only to paying readers of The Web Scraping Club.

If you’re one of them and cannot access it, please use the following form to request access.

Integrating the sources