How scraping a single website costed thousands of dollars in proxy

A story about the precautions you should always take when using proxies in your scraping projects.

Last month I had the great opportunity to test for free the Nimble professional plan for our scraping operations at Databoutique.com, a marketplace for web scraped data.

This great package worth 3600 USD included Nimble IP, their proxy solution, the Nimble Browser, an AI-powered browser designed for web scraping, and the Nimble API, which collects and parses data directly saving time for the end user.

Given all this bonanza, I’ve started integrating this package into our daily operations, starting from the low-hanging fruits.

First experience with residential proxies

As a first step, I’ve switched from one proxy provider we were using to Nimble for some Scrapy scrapers that were in production.

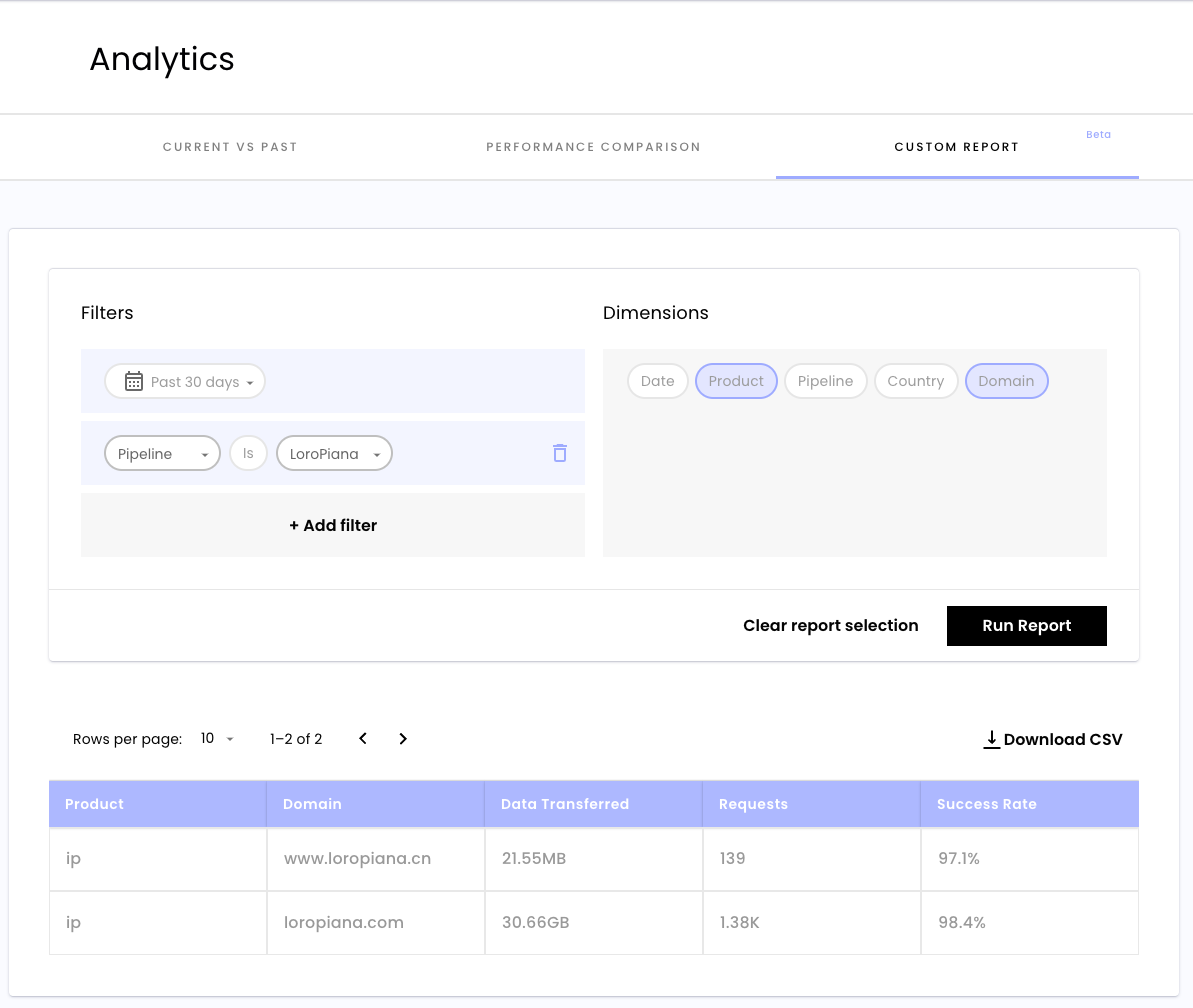

Nimble lets you create how many pipelines you need for your data extractions, each with its own credentials and dashboard. In the case in the picture, I’ve created a residential proxy pipeline that I’m gonna use on two similar websites. In this way, the accounting of the GB spend per website it’s much easier, and optimizing your operations becomes much easier, thanks also to the advanced custom dashboard, actually in Beta, which allows you to create reports on the usage of the services, pipeline per pipeline.

On average, I’ve got a 99.3% success rate on residential proxies, which is pretty good and all the things were running smoothly.

The need for bypassing Akamai

I had in my backlog to solve an issue on one of our scrapers, which points to a website protected by Akamai.

Usually, a good proxy is enough but in this case things were different: the Scrapy Spider kept being redirected to a JS challenge, before throwing an error for the maximum redirections reached.

https://www.stoneisland.com/za/stone-island-junior/kid?bm-verify=AAQAAAAI_____7zyMRqP2v7uHtLz0nnWmJ4KaBcGazEmN9C6y_soSJRnaE3ArQc-7ikCEWWjYhLaSjLlDPkWLZRauZp6CZ9dVPTDCDpdo4iDI7j2q6AjzX47SFJv0EE5rB0pwqxnzH8vcRKD4n6QfkGe17QTT4qdKMGXG8nXQdT0I1Wsn8mae1j5Mg5WLHRjKGCXEu-4ue8LG_W2gObLTqJuUjYeA5ZulbRHEVy6aD1OIY4KWFWFsp18So_QyKuyOFv0h4Z93vKeLCc9YFCqnkwEvNb56T9htw>: max redirections reachedIt seems a problem for the Nimble Browser, with its JS rendering engine.

Solution number 1: Nimble Browser

I created a new pipeline for this website and Nimble Browser solution and adapted the scraper using the new credentials, just like using a new browser. Easy win!

Maybe.

In fact, I could not complete a run that my credits for the Nimble browser were ended. From the dashboard, I can see I’ve made 100k requests, which were higher than expected but not too much, so I, wrongly, didn’t give this discrepancy too importance.

Solution 2: Playwright with residential proxies

Then I moved to residential proxies, together with Playwright. Since rendering the page was not an issue, I’ve just added some residential proxies to make this scraper work also from a data center.

To save some GB, I inhibited the loading of all the images on the website, using the Playwright routing feature.

def route_intercept(route):

if route.request.resource_type == "image":

print(f"Blocking the image request to: {route.request.url}")

return route.abort()

return route.continue_()

Additionally, since I was not sure how to grab all the items from this website, which is peculiar in its technical execution, I decided to change scraper behavior.

Instead of using the product list page for this e-commerce website, I made it read its sitemaps and then read every URL referred to a product. This increased the number of requests I needed to make but we’re talking about a website of less than 1k items per country, so relatively small, so I didn’t worry too much about this aspect.

Testing this solution on my laptop worked as a charm, so I decided to push it to production. I continued the tests for a few days on production since I noticed that Playwright got stuck at some point, so I optimized its usage and this is the result:

Holy S*it!

I depleted my whole account and still didn’t have a single decent execution!

Ok, this is for sure something we can learn from.

What happened and how to avoid these mistakes

After some study, I’ve found out that what happened was a concatenation of errors in the planning of proxy usage but also a strange behavior of the website, I still don’t know if intentional or not.

I was using a Pipeline where I grouped 4 websites where I use the same credentials for residential proxies, including Stone Island ones, which was the target of this scraper.

And this is the first error: if your provider allows you to do so, use different credentials per each website. In this way, you can easily detect outliers and set thresholds per website, something I didn’t do.

From the dashboard, I tried to understand what happened and something bounced to my eyes.

The websites in the green squares are the legitimate ones, while all the others are websites of third-party services called by Stone Island website when loading their page.

Per every GB spent on the real target website, I’ve used at least another 5 in third-party services I didn’t care at all.

So for this reason, I should be more aggressive in blocking third-party content other than images, adding to the route_intercept function this option:

if "stoneisland" not in route.request.url:

print(f"blocking {route.request.url} as it does not contains Stone Island")

return route.abort()So the lesson here is to be more selective about the resources to load in your browser when using Playwright by writing different routing rules.

This does not apply in your Scrapy spiders, since these resources are all triggered by the loading of a page inside a browser.

But even considering the traffic on legitimate websites, the numbers don’t match my expectations. Stone Island is a website much smaller than Neiman Marcus, why is it using 5 times more GB?

I’ve found out that when executed on a machine in a datacenter, some random pages of Stone Island websites start loading and loading again, in an endless loop, generating a lot of traffic.

I don’t know if it’s a bug or a feature, but the result is that this cost me a lot of GB.

While this behavior is unpredictable, here we can learn the third lesson: even if you think you’re working on a trivial scraper, set a threshold to proxy usage for your scraper.

Thanks to Nimble that allowed me to test their tools, even if the trial ended in a unpredictable way. But I could appreciate the quality of their tools and, thanks to their reporting system, I could easily find where the issues were hidden. I hope this article could help you save money and make you understand what to do to avoid the same errors I’ve made.