THE LAB #90: Camoufox Server in AWS

How to create a cluster of Camoufox instances on AWS

In the latest episode of The Lab, we created a working Docker image for Camoufox. It’s not so trivial as it might seem, as I could understand from the questions on the Discord server of TWSC.

Before proceeding, let me thank Decodo, the platinum partner of the month. They are currently running a 50% off promo on our residential proxies using the code RESI50.

In fact, the process was not that easy and took several hours before having a working solution. But now we have it, let’s deploy it on AWS and add a cluster of Camoufox instances to our proxy infrastructure.

Building and Tagging the Docker Image

Now that we have our Camoufox Docker image built and pushed to Amazon ECR, the next step is to configure the AWS infrastructure to deploy and scale it. This follow-up will focus on how to set up the AWS services (like EC2, Auto Scaling, and Load Balancing) to run multiple Camoufox containers, and will highlight alternatives (ECS, EKS, etc.) with their differences. We assume you have a basic understanding of AWS, so we’ll dive straight into the architecture and setup.

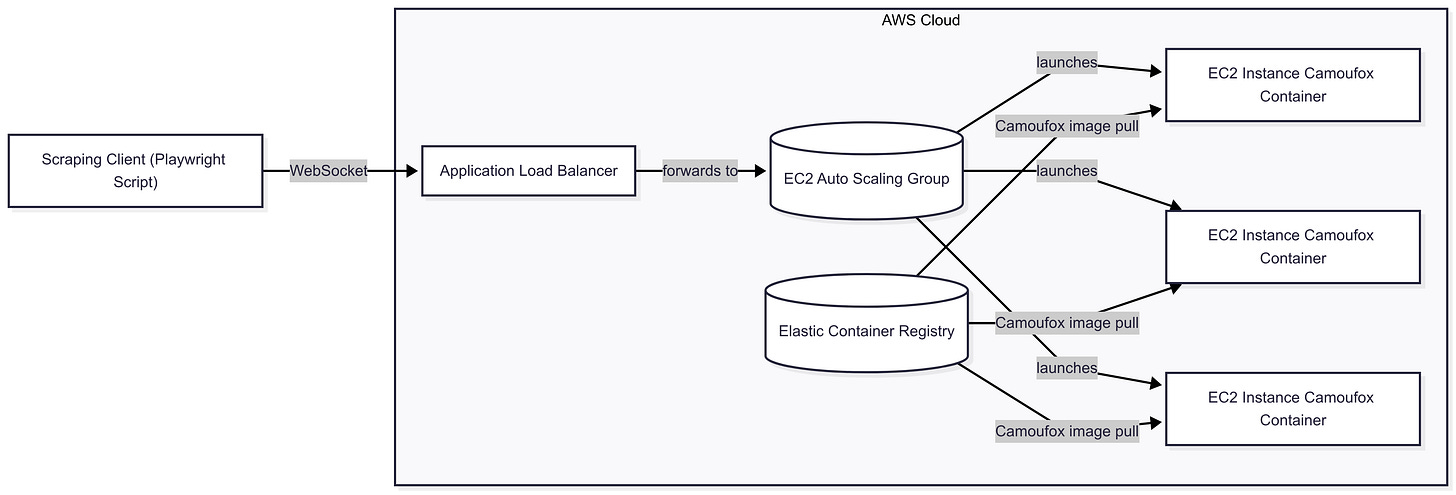

AWS Architecture Overview

Our deployment will use several AWS components working together:

Amazon ECR (Elastic Container Registry): Stores the Camoufox Docker image, allowing EC2 instances or other services to pull it.

EC2 Instances: Virtual machines that will run the Camoufox container. Each instance will host one Docker container running Camoufox’s headless browser server.

Auto Scaling Group (ASG): Manages a fleet of EC2 instances, automatically launching or terminating instances based on demand. This provides the elasticity to handle variable scraping load.

Application Load Balancer (ALB): Distributes incoming client connections (including WebSocket connections) across the EC2 instances. The ALB serves as a single entry point for the scraping clients and balances the load.

IAM Roles and Security: An IAM role attached to the EC2 instances will grant permission to pull images from ECR. Security Groups will control network traffic (e.g., ALB allowing inbound web traffic, instances allowing traffic from the ALB).

In essence, the client’s scraper connects to an ALB, which forwards the traffic to one of the Camoufox containers running in an EC2 Auto Scaling Group. As load increases, the ASG can add more instances (each with a Camoufox container), and scale down when load decreases to save costs. This design ensures both load distribution via the ALB and automatic elasticity via the ASG.

This episode is brought to you by our Gold Partners. Be sure to have a look at the Club Deals page to discover their generous offers available for the TWSC readers.

🛜 Buy private Socks5 & HTTPS proxies from 0.9 USD per IP

💰 - 50% Off Residential Proxies

Architecture Diagram

Below is a Mermaid diagram illustrating the AWS infrastructure for our Camoufox deployment:

In this diagram, the scraping client (your scraping script) connects via WebSocket (ws://...) to the ALB, which then routes the connection to one of the EC2 instances running a Camoufox container.

All EC2 instances retrieve the Docker image from ECR (which holds our Camoufox image). The Auto Scaling Group manages the EC2 pool, ensuring there are enough instances to handle the load. AWS’s Application Load Balancer natively supports WebSocket traffic, so it can handle the bi-directional connections required by Camoufox’s remote browser server.

Step-by-Step AWS Setup

Let’s go through the setup process, assuming the Docker image is already in ECR:

ECR Repository & Image

Prerequisite: You should have an ECR repository containing your Camoufox image (e.g., your-account.dkr.ecr.region.amazonaws.com/camoufox:latest). Make sure the image is pushed and available. This repository will be used by our instances to pull the container image.

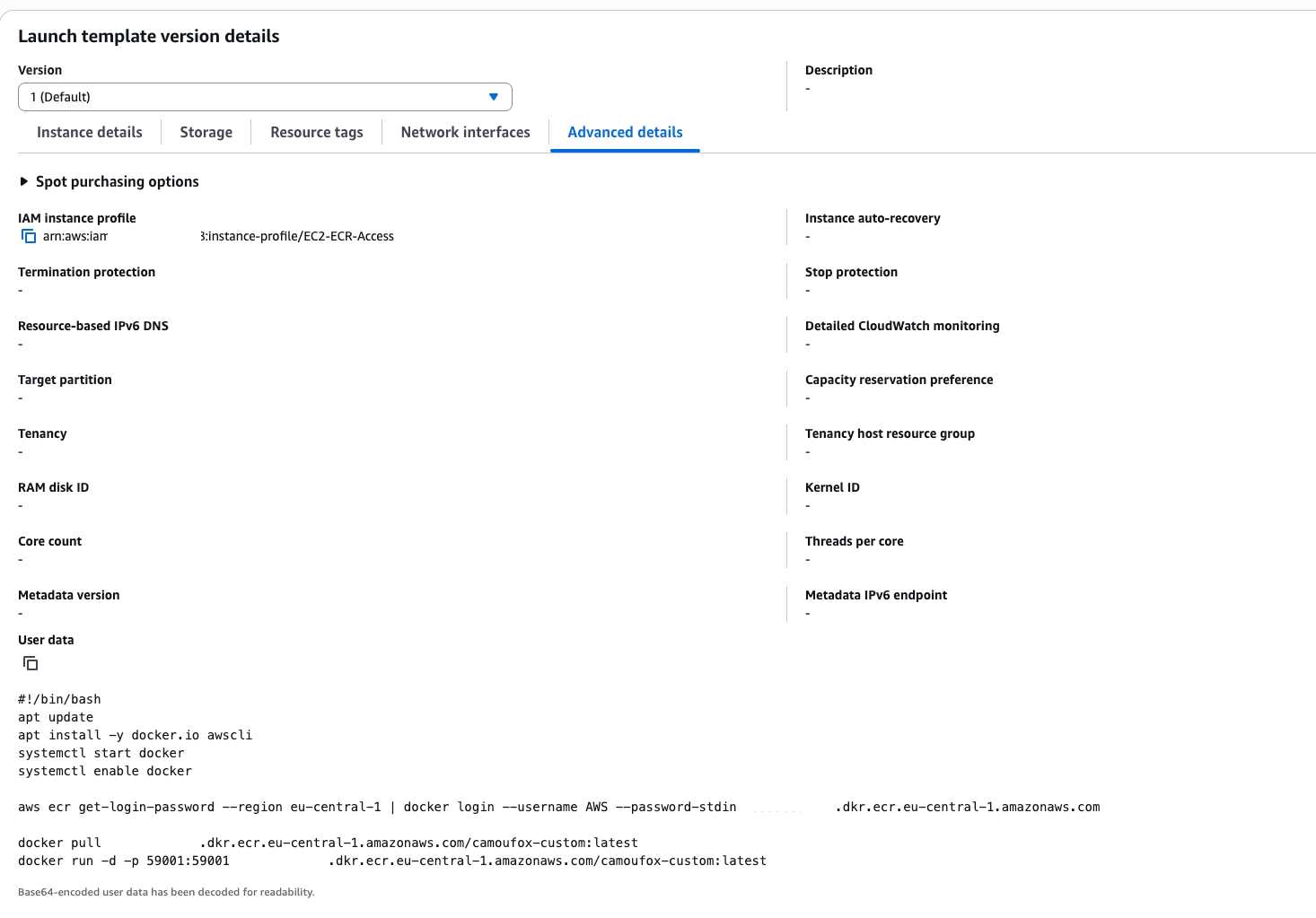

EC2 Launch Template/User Data

Create an EC2 Launch Template (or Launch Configuration) that defines how to launch your instances:

AMI: Use a Linux AMI with Docker installed (for example, Amazon Linux 2 or Ubuntu with Docker). If the AMI doesn’t have Docker, include installation commands in user data.

Instance Role: Attach an IAM Role to the instances that grants read access to ECR. For example, use the AWS-managed policy AmazonEC2ContainerRegistryReadOnly on the role. This avoids needing to embed AWS credentials in the instance.

Security Group: Set up a security group for instances allowing inbound traffic from the ALB on the Camoufox port (e.g., 59001), and allowing the instance to reach out to ECR (HTTPS calls to ECR endpoints).

User Data Script: Write a user-data bash script that will run on instance launch to start the Camoufox container. This script should:

Log in to ECR and pull the Docker image. For example, use the AWS CLI command to authenticate Docker to ECR:

aws ecr get-login-password --region <your-region> | \ docker login --username AWS --password-stdin <your-account>.dkr.ecr.<region>.amazonaws.comThis command gets an auth token and pipes it to

docker login, as recommended by AWS. With the IAM role in place, the instance can do this without explicit credentials.Run the Docker container. For instance:

docker pull <your-account>.dkr.ecr.<region>.amazonaws.com/camoufox:latest docker run -d --name camoufox-server -p 59001:59001 <your-ecr-repo>/camoufox:latestHere’s the full list of commands I added to the user data scripts, where I also installed and started Docker at every launch.

Before continuing with the article, I wanted to let you know that I've started my community in Circle. It’s a place where we can share our experiences and knowledge, and it’s included in your subscription. Give it a try at this link.

Application vs Network Load Balancer

When routing external traffic to your EC2 instances, AWS provides two main types of load balancers: Application Load Balancer (ALB) and Network Load Balancer (NLB). Understanding the differences is key to choosing the right one for your scraping infrastructure.

Application Load Balancer (ALB) works at Layer 7 (HTTP/HTTPS) of the OSI model. It parses incoming requests and offers advanced features like path-based routing, host-based routing, content rewriting, and native WebSocket support. It's ideal for traditional web apps and REST APIs, especially when you need routing logic based on headers or URLs.

Network Load Balancer (NLB) operates at Layer 4 (TCP/UDP). It doesn’t inspect application-layer content and simply forwards raw TCP packets to your targets. NLBs are designed for extreme performance: they handle millions of concurrent connections with low latency and are better suited for non-HTTP protocols or long-lived connections like WebSockets or gRPC.

We selected NLB for our architecture for three main reasons:

Performance: Camoufox clients initiate persistent WebSocket connections. NLB handles these at the TCP level without interfering with the upgrade handshake, resulting in lower latency and better throughput.

Simplicity: We didn’t need URL-based routing or HTTPS termination; just a TCP proxy on port

59001.Transparency: NLB does no smart routing or rewriting, which guarantees that what reaches Camoufox is exactly what the client sent.

Unless you're deploying a frontend-facing web app or need HTTP-layer features, NLB is often a more robust choice for infrastructure-oriented services like headless browsers.

After deciding the right type of load balancer, we need to set up its components.

Listeners: When setting up your NLB, create a TCP listener on port

59001—or whatever port your Camoufox container exposes. Since the WebSocket connection is handled at the TCP level, there’s no need to configure protocols like HTTP or HTTPS. The NLB simply forwards the TCP connection as-is to one of the registered EC2 targets.This simplicity is exactly why we chose NLB over ALB: no WebSocket upgrade headers, no path-based routing, no protocol guessing—just a raw, persistent connection between your Playwright client and Camoufox.

Target Group: Create a target group for the NLB, using the Instance target type and TCP protocol, on port

59001. Each EC2 instance in your Auto Scaling Group will be registered automatically and receive traffic on that port.For health checks, choose TCP health checks on port

59001. This tells the NLB to consider a target healthy as long as the port is open and accepting connections. Unlike ALBs, NLBs don’t support HTTP-level health checks or custom paths. That’s fine for our setup, since we only need to verify the container is listening on the correct port.This minimalistic health check works well with our lightweight headless browser service.

Since NLBs don’t support security groups directly, the access control is handled by the security groups attached to your EC2 instances. Ensure those groups allow inbound TCP traffic on port

59001from the NLB’s IP range (or from 0.0.0.0/0 if your clients connect from the public internet). Also, verify that outbound traffic to ECR and the web is permitted so the containers can pull the image and perform scraping.

This article won’t have any code deployed in the repository of The Web Scraping Club since it’s all about AWS configuration. But if you’re looking for the code of the previous articles, have a look at it.

If you’re one of them and cannot access it, please use the following form to request access.

Auto Scaling Group (EC2)

To deploy Camoufox at scale, we use an Auto Scaling Group (ASG) to manage EC2 capacity dynamically.

VPC & Subnets

Attach the ASG to the same VPC and subnets as your NLB. Typically, EC2 instances live in private subnets, while the NLB is placed in public subnets to expose it to the internet. Make sure your instances have outbound internet access (either via public IP or NAT Gateway) so they can pull the Docker image from ECR and perform web requests during scraping.