The Lab #53: Bypassing AWS WAF

Scraping websites protected by AWS WAF using an hybrid approach

Some days ago, one member of the community of The Web Scraping Club asked for some advice on how to bypass the protection by AWS WAF to scrape data from an API endpoint.

Given that we’re talking about scraping public data, I thought it could be interesting to share with you the solution I’ve worked on.

Of course, the following technique must be used for legitimate purposes and not to harm the target website or its business.

Before diving into the solution, what is a WAF and what are the peculiarities of the AWS WAF?

What is a WAF?

A Web Application Firewall (WAF) is a specialized security system designed to protect web applications by filtering and monitoring HTTP traffic between a web application and the Internet. It operates by analyzing the data packets and requests sent to a web application and filtering out potentially harmful requests based on predefined security rules. The primary function of a WAF is to protect against common web exploits and vulnerabilities, such as SQL injection, cross-site scripting (XSS), cross-site request forgery (CSRF), and other OWASP Top 10 threats.

A WAF typically works by sitting in front of a web application, serving as an intermediary between the client and the server. It inspects incoming and outgoing traffic, allowing legitimate traffic to pass through while blocking malicious traffic. This inspection can be done based on various factors, such as IP addresses, HTTP headers, URL patterns, and the payload content.

How do we identify the AWS WAF?

While this technology is not identifiable by Wappalyzer, you can easily understand if a website is using it by inspecting the session cookies.

If you find the key aws-waf-token, it’s pretty self-explanatory that the website is using the AWS WAF.

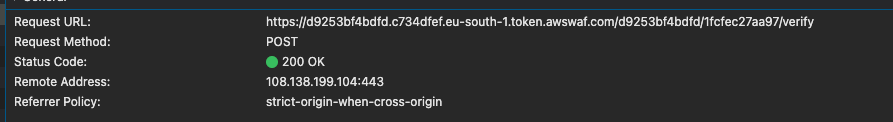

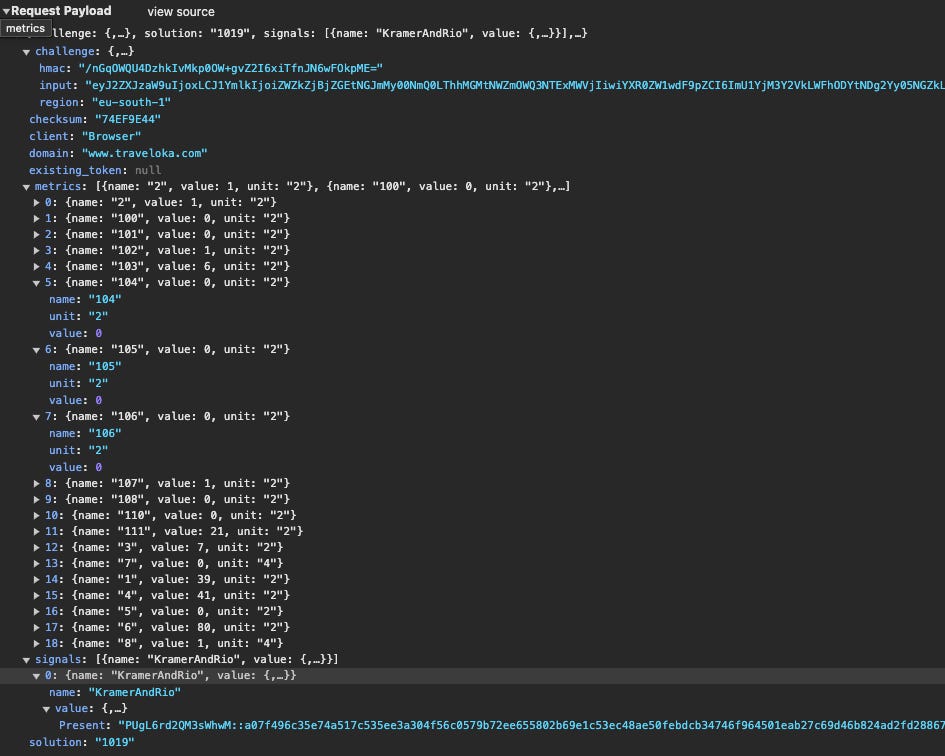

From what I understood by analyzing the behavior of some websites, a request is sent to the AWS servers to validate your browser configuration at the beginning of the browsing session.

As you can see in the payload, it’s not clear what is going to be sent to the server and a reverse engineering process is required to understand what’s happening under the hood.

If you’ve been following this newsletter for some time, you already know what I’m saying now: this process is time consuming and requires continuous updates, so it’s a task for companies selling commercial solutions to web scrapers.

If our main business is selling data, we have two options: buying commercial solutions or being creative with the tools we have to make our scrapers more similar to humans.

In fact, instead of reverse engineering the anti-bot solution, mimicking a human interacting with a website using a legit hardware and software stack is a more effective approach, capable of lasting for longer periods since it should not be affected by the releases of anti-bots.

How AWS WAF works

As we have seen in the previous paragraph, AWS WAF throws a challenge in the background in our browser as soon as we visit the website. If the configuration seems legit, we receive a cookie that certifies that we’ve passed the test and we can keep browsing without any other control, until the cookie deprecation.

After it expires, a new challenge is thrown and the wheel keeps spinning. The frequency of this test depends probably on the website configuration and it may differ from case to case.

It’s an approach common to other bot protection measures as we just saw with the Bearer Token some weeks ago.

While it’s a lightweight approach for the website, since there’s no constant monitoring of the visitor’s activity, this methodology could be exploited to write an efficient scraper, as we’ll see now in the case of Traveloka, a flights and hotel fares aggregator for the APAC world.

As always, if you want to have a look at the code, you can access the GitHub repository, available for paying readers. You can find this example inside the folder 53.AWS_WAF

If you’re one of them but don’t have access to it, please write me at pier@thewebscraping.club to get it.

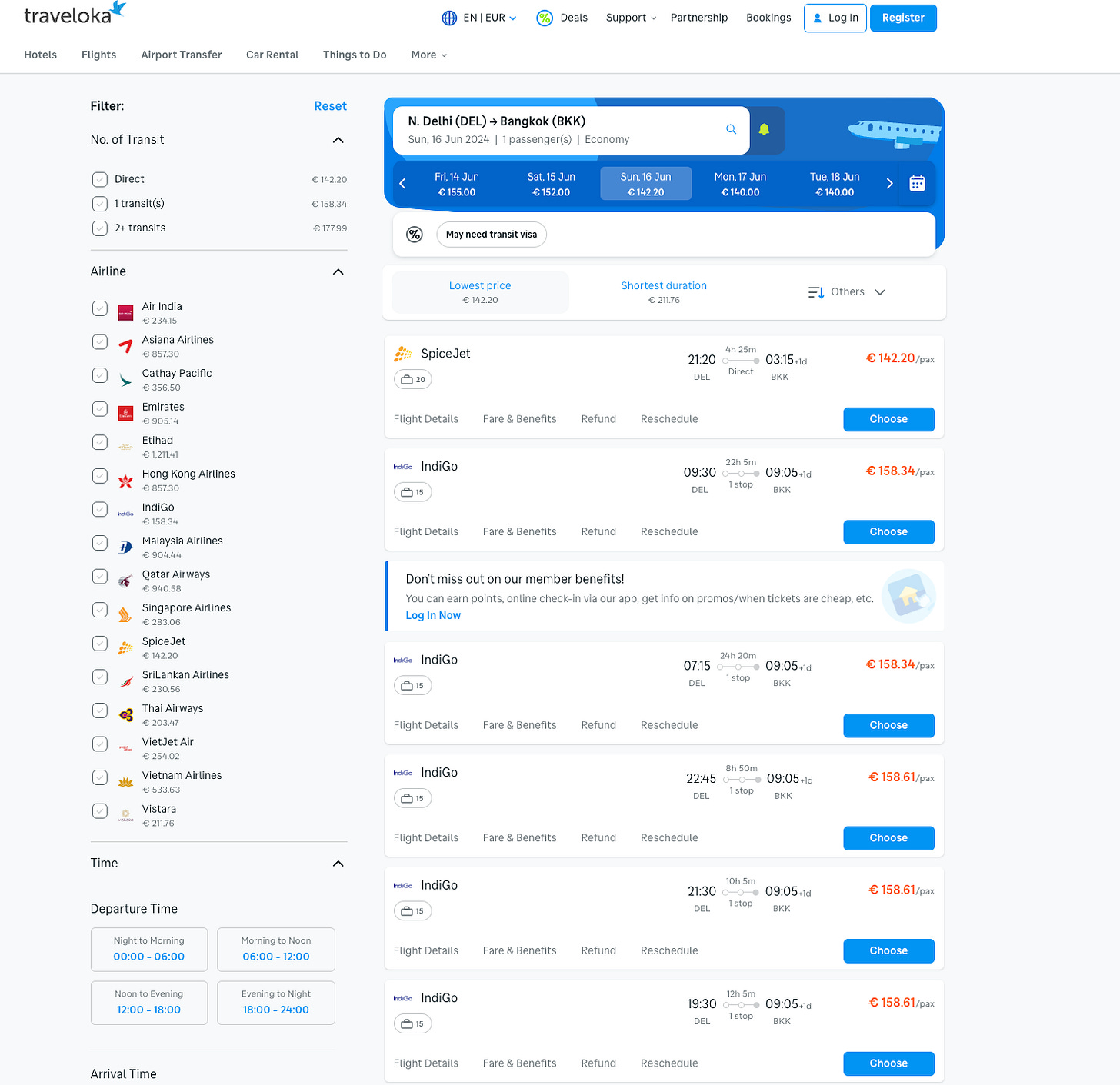

Examining the Traveloka website

Traveloka’s website has the traditional architecture of the industry: a home page, a search bar for hotels or flight routes, and, once we select the route and a time for our departure, the list of offers from different airline companies.

In order to load this data, usually there’s an API behind the front end and Traveloka makes no exception.

We have a POST request with the route information and some additional info in the payload.

curl 'https://www.traveloka.com/api/v2/flight/search/oneway' \

-H 'accept: */*' \

-H 'accept-language: en-US,en;q=0.9' \

-H 'content-type: application/json' \

-H 'cookie: selectedCurrency=EUR; currentCountry=IT; tv-repeat-visit=true; countryCode=IT; aws-waf-token=b6ac2c13-29d5-4f86-95a5-73903c784c47:DgoAhDUoz7YDAAAA:lkKEO0jAbax0/WqI1zMHelpnt/rI3wGD+t+66c+UWYsRD9lPdvjz+Z2cRCSFSeXTSA4zbzakIrab0/ckkvsPKH1dWoGYjUfDTLlsZVBcTTSJrH8KCkE9ZhdlDg5euQQzEmOu074HbFQ0GlbAc4VQsHmRk7Xe5XQvYtYz0Jc/u5grIqwbw4p01TEHKsAGEGVuRc8=; tvl=A3IvxWd/GJSwQafHmC3w6G8ICzVtxyfiYeyuSJlhufE6pzKOC7QVMHH+jpKIeDLow+AT8npt8+iEbJ1BLVsP6RiBKGEWVBZkEO1axIeYcyrnR8L6zqiyLXL0w/O6kFJLtEIhxFAMDSrD5WCsfwMHSrzME0HNmMOjNr0lQfzMUfpIaFVPwPCxQefTLSs9kReGBfi1NjCikXdEeJc6ZJJKY4sMbejHr0QQfqAFOCYNBWPh2luex9wS4XFCiVupQ6ddUB0WEfH/Fm8=~djAy; tvs=bPqvVC1YfJBQjiihg7CLqsjuzDqXHA/FddWAXUK1S2YIFIYikdiRXT+S9MUEPFWf9jwKwywLLRwG/RDWJcxWk8wgL1JyYgbN+V/RmnEGgVk16FMMqrwcGWzYU/R6IiudR24D8oM7l+aAnoMIbMxTsdLrxOUpUEDJryabfA14+t2UpzgCsa4J54MfO9eZUqAjj1N3d3ifKRvxTUX5egZK6DrLPO/cO0pLSaUvZ2PfEyQgVkqmM0/COREh02kmGCZ3OuH8m4Mca1L3SrSiWgRAdp9q3jRE0/7HhVU=~djAy; _dd_s=logs=1&id=65e1ce4a-ac32-45b2-bf1d-25b0eb10afb0&created=1717653197178&expire=1717654107146&rum=0' \

-H 'origin: https://www.traveloka.com' \

-H 'priority: u=1, i' \

-H 'referer: https://www.traveloka.com/en-en/flight/fullsearch?ap=DEL.BKK&dt=16-6-2024.NA&ps=1.0.0&sc=ECONOMY' \

-H 'sec-ch-ua: "Brave";v="125", "Chromium";v="125", "Not.A/Brand";v="24"' \

-H 'sec-ch-ua-mobile: ?0' \

-H 'sec-ch-ua-platform: "macOS"' \

-H 'sec-fetch-dest: empty' \

-H 'sec-fetch-mode: cors' \

-H 'sec-fetch-site: same-origin' \

-H 'sec-gpc: 1' \

-H 'user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36' \

-H 'x-domain: flight' \

-H 'x-route-prefix: en-en' \

--data-raw '{"fields":[],"clientInterface":"desktop","data":{"currency":"EUR","isReschedule":false,"locale":"en_EN","numSeats":{"numAdults":1,"numChildren":0,"numInfants":0},"seatPublishedClass":"ECONOMY","destinationAirportOrArea":"BKK","flexibleTicket":false,"flightDate":{"year":2024,"month":6,"day":16},"sourceAirportOrArea":"DEL","newResult":true,"seqNo":null,"searchId":"e55da74a-7ee0-4ede-8f34-1824f0d47d4c","visitId":"300d87cc-99fb-4d4f-9da1-ab767ca06ab5","utmId":null,"utmSource":null,"searchSpecRoutesTotal":1,"trackingContext":{"entrySource":""},"searchSpecRouteIndex":0,"journeyIndex":0}}'Playing around with Curl and the API endpoint, I’ve deduced that the Cookies relevant to our scope are:

selectedCurrency

currentCountry

tv-repeat-visit

countryCode

aws-waf-token

tvs

tvl

The first four can be set with fixed values while the last three are assigned to our browser session as we start navigating the website.

For our test purposes, we’re using the same payload for the requests, since it’s not important on this occasion to change routes and dates of flights.

First try: using only Scrapy

Let’s see how the website behaves if we use Scrapy to get data, checking if we’re able to get the cookies we need.

In the Scrapy folder of the repository, you can find the full scraper, where we basically use a legitimate set of headers for our requests, load the home page, and then go to the list of the offers for a certain travel route.

HEADER={

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8",

"accept-language": "en-US,en;q=0.5",

"cache-control": "max-age=0",

"priority": "u=0, i",

"sec-ch-ua": "\"Chromium\";v=\"124\", \"Brave\";v=\"124\", \"Not-A.Brand\";v=\"99\"",

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": "\"macOS\"",

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "none",

"sec-fetch-user": "?1",

"sec-gpc": "1",

"upgrade-insecure-requests": "1",

}

LOCATIONS = location_file.readlines()

def start_requests(self):

for i, url in enumerate(self.LOCATIONS):

yield Request(url, callback=self.get_flights, headers=self.HEADER, dont_filter=True)

def get_flights_page(self, response):

url='https://www.traveloka.com/en-en/flight/fullsearch?ap=DEL.BKK&dt=16-6-2024.NA&ps=1.0.0&sc=ECONOMY'

yield Request(url, callback=self.read_token, headers=self.HEADER, dont_filter=True)

def read_cookies(self, response):

print("End of scraper. No cookies get and cannot use the API")While we’re not blocked by getting the HTML of these pages, this is pretty unuseful. In the offers page code, there’s no information about the different fares, since they’re dynamically loaded from the API call response.

Additionally, we don’t get any interesting cookie we can use to call the API, so this scraper leads to nowhere. We need to change our approach.

Second try: Scrapy + Playwright

To scrape the travel fares from Traveloka we need to get the cookies required by the API endpoint, especially the aws-waf-token, and use them in our post request.

Since these cookies are assigned when we browse the website for the first time, why don’t we use Playwright to collect them and then inject them in a Scrapy POST call?

In this way, we can use a headful solution just to make one request and then use Scrapy and its ability to handle parallelism to create an efficient scraper capable of hundreds of requests in a few minutes.

In fact, once we get the aws-waf-token, at least for Traveloka, this will be valid for four days, so potentially we could use only one token for all our scraping needs.

But how can we mix Playwright and Scrapy in the same spider?

Well, this is the task of the scrapy-Playwright library and you can find the full code inside the directory 53.AWS-WAF/Scrapy-Playwright of the repository, available only for paying readers.