Hands on #3: Building a price comparison tool with Nimble

Using Nimble e-commerce API and Nimble browser to build a price comparison tool

This is the month of AI on The Web Scraping Club, in collaboration with Nimble.

During this period, we'll learn more about how AI can be used for web scraping and its state of the art, I'll try some cool products, and try to imagine how the future will look for the industry and, at the end of the month, a great surprise for all the readers.

And we could not have a better partner for this journey: AI applied to web scraping is the core expertise of the company and I’m sure we will learn something new by the end of the month.

A little bit of self-promotion before starting with the article: I’ve been hosted in the Ethical Data Explained podcast by Soax.

If you want to listen to the episode, you can get the episode at this link. If you want to try out Soax services, you can use the discount code WEBSCRAPINGCLUB40 to get a 40% discount for the first month on any plan.

In this episode of the “Hands On” series, in collaboration with our AI month partner Nimble, we’re testing their APIs in the process of building a price monitoring tool.

What is price monitoring and why it is important for brands?

Let’s go back to the 80s for a moment and suppose you’re willing to buy a pair of running shoes from your favorite brand.

Typically, you go to your closest store, see the price exposed, and then decide to buy or not. If you have different stores nearby, you can go to different stores to compare prices for the same pair of shoes, but that’s where your market research ends.

And after you buy the shoes, unless you’re a data nerd like me, I don’t think you’ll go back to the store where you bought the shoes, only to check if they are making some discounts.

Today, thanks to the internet and e-commerce, the whole purchasing process is completely different.

First, you check online for the best price across different websites, on the brand’s website, and on third-party sellers, marketplaces like Amazon, and so on. And you can postpone your purchase, maybe you’re waiting for a discount that, sooner or later somewhere will arrive (in most cases).

This translates to a greater variety of buying opportunities for the customer but also a great mess for the brand itself.

The price a brand puts on your running shoes is a statement: for us, for all the efforts we put into it, this is the value of these shoes. But it’s a statement without credibility if the same pair of shoes have different prices in different places at the same time. And the higher the price, like in luxury, the stronger must be the credibility of this statement.

That’s why brands some brands, nowadays, tend to prefer the direct-to-consumer channel (selling stuff only in their stores, both online and physical), rather than with third-party distributors. One case is Nike, that in the past years directed its sales efforts to DTC, like written in this article, but, given the pros and cons of this solution, is also focusing on a network of selected third-party retailers.

Building a price monitoring tool with Nimble

Let’s use this case by Nike as an example for this episode of the “Hands On” series and to stress the Nimble E-commerce API and browser.

Setting the baseline

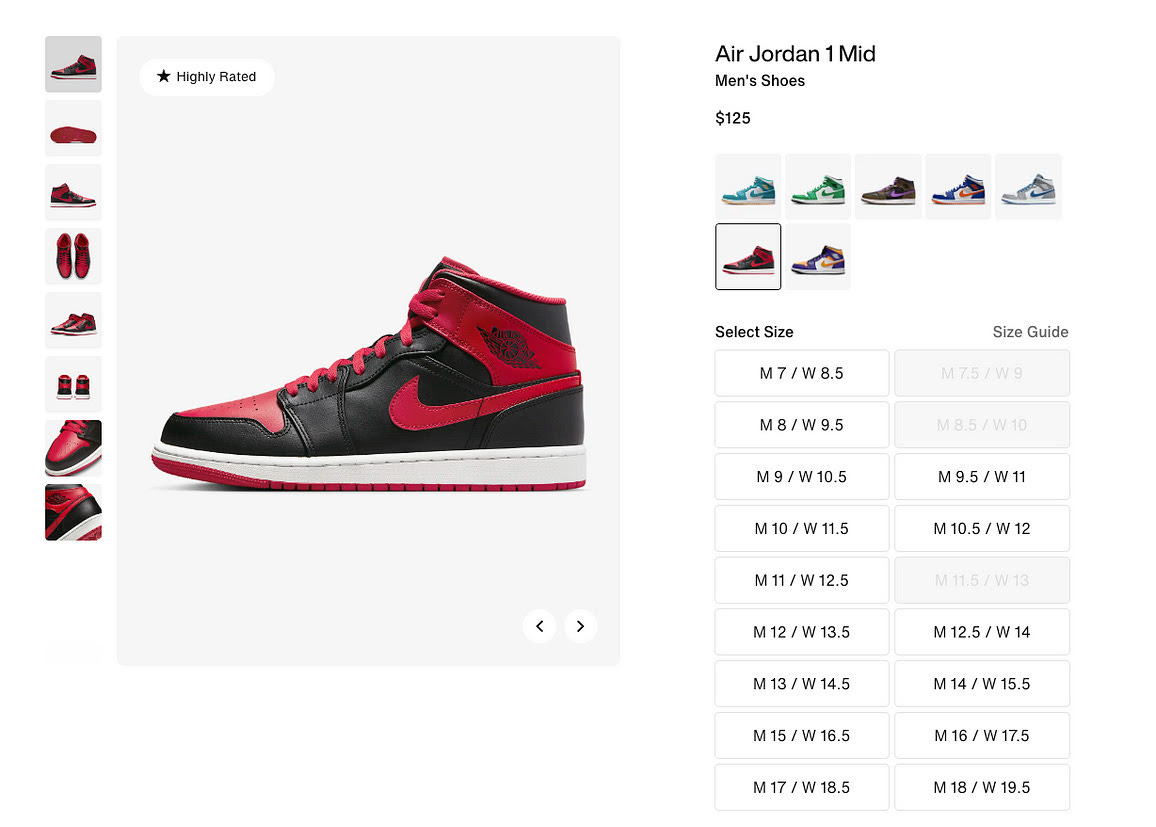

First of all, let’s set the base price by scraping the Nike website, and we’re going to monitor the Air Jordan 1 Mid model, in several different countries and colors.

Since the Nimble E-commerce API at the moment is available only for Walmart and Amazon websites, I’ll use the Nimble Browser to retrieve data, integrating it in a small scrapy project.

As always, the code is available on the GitHub Repository for all the readers.

I needed to create a custom middleware for handling proxies because the username needed to login to the Nimble Browser API had the “@” character and it was interpreted not correctly.

I will use the parsed content returned by the API, since the schema.org of the page contains already everything we need, just like the prices per every color of the shoes.

While the scraping part is straight-forward since the Nimble Browser is returning the product schema for each page in input, the real time-consuming problem is about the website itself: some countries present on one page all the colors available for the shoe (USA), while others like Canada have one page per color, so a different schema to be parsed. On top of that, Saudi Arabia uses different products ID, and it’s something more common than you think in multi-country e-commerce.

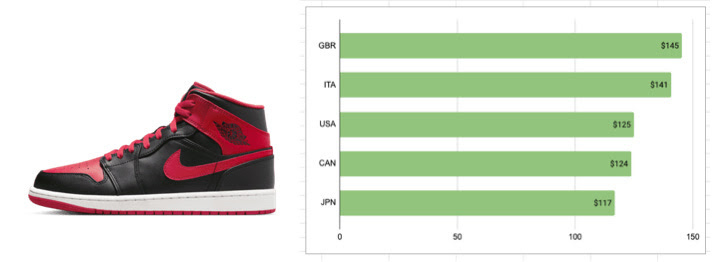

But despite the issues of the website itself, the scraper was a piece of cake to set up, and once gathered the data and normalized the prices in USD, we have the first insight.

The iconic shoe in the Black/White/Fire Red version has different prices around the globe, on the same website. This is possible for many reasons, including currency fluctuations, logistics, and different taxes in the countries, but it can be also a strategy from the brand. We basically calculated the geo pricing for this Nike model, and it’s a common analysis enabled by the power of web data.

Monitoring wholesalers’ pricing policies

As we’ve said before, the same shoe model can be found on several other websites, from Amazon to Farfetch and other retailers.

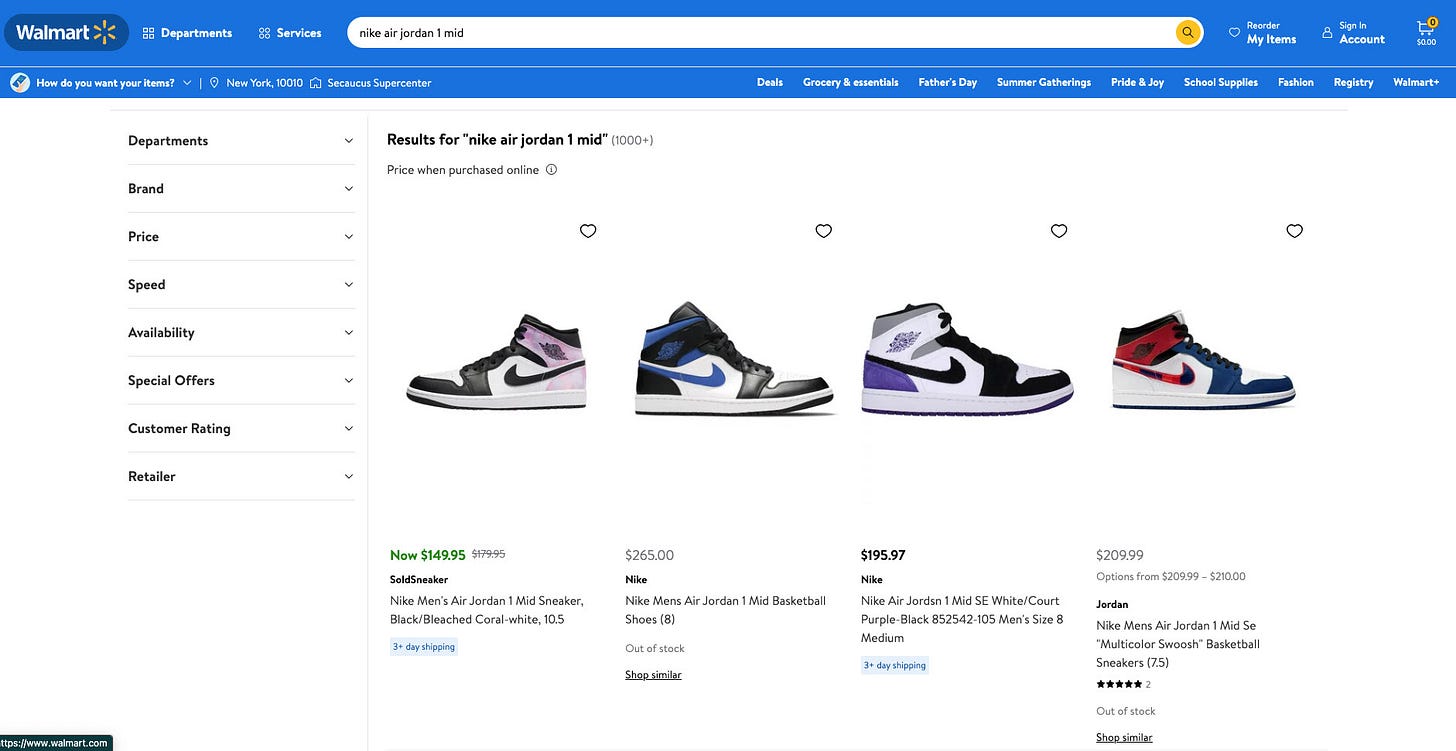

Let’s start getting the products from the Walmart website: for this task, we’ll use Nimble E-commerce API, specifically designed for being used on it and on Amazon.

We’ll give as input to the scraper the product list page obtained looking for the Air Jordan 1 Mid shoe and will stop the research after 25 pages, otherwise, we’ll get too much noise in the dataset.

As you can see from the Nimble E-commerce API documentation, its usage is so easy that we can extract everything we need using a Python script with requests (again, it’s in the GitHub repository).

After a few seconds, we get the results, with interesting details about the seller using the Walmart Marketplace.

It’s not the right place here because it would be off-topic but now, with some AI and human supervision, we could map the exact item from the Nike website to the one in Walmart, to see if there are some strange prices around.

And the same approach could be used for Amazon.com too. In this case, from the product list page, we can get less information, but then we can cycle inside the product details pages to get again all the sellers and the offers per each item shown on the website, without worrying about the technical infrastructure.

Final remarks

Of course, a real production project will need some more websites monitored and more data cleaning but this was not the scope of the article.

I wanted to stress out the Nimble services and find out if these AI-powered tools for web scraping could be useful in a real-world use case.

I’ve tested first the Nimble Browser API and I confirm the first impression I had on the previous article. It’s a powerful tool with a high margin of improvement, as soon as the training will be made to more and more websites, with different structures and formats.

The E-Commerce API is instead targeted to only Walmart and Amazon USA (I’ve tried to use it on Amazon UK but with no luck, but I suppose it’s only a matter of domain to enable). On top of the functions of the Browser API, you can also select the US state and store of Walmart from where to check the price and delivery times, and the zip code for Amazon deliveries. If you’re studying this kind of information, that’s a great feature that comes with a stunning easy-to-use solution.

At the risk of repeating myself, I can say definitely that these Nimble services are already great but with margins for improvement and designed for a professional audience.