Botasaurus: an anti-ban web scraping framework

Could it be your new friend for your web scraping operations?

While browsing on GitHub in search of new tools for web scraping I encountered the Botasaurus repository and this claim caught my attention.

Most Stealthiest Framework LITERALLY: Based on the benchmarks, which we encourage you to read here, our framework stands as the most stealthy in both the JS and Python universes. It is more stealthy than the popular Python library undetected-chromedriver and the well-known JavaScript library puppeteer-stealth

It seems it’s time for a new stress test!

Before continuing with the article, I’m happy to invite you to another webinar in March.

On March 28th, I'll be hosted by NetNut for the launch of their new Website Unblocker.

It will be a good occasion to talk about the challenges in the web scraping industry and how web unblockers could help in solving them.

Link to the webinar: https://netnut.io/lp/website-unblocker-webinar/

Setting up and creating our first basic scraper

After the installation using pip

python -m pip install botasaurus --upgradewe can create our first scraper, according to the documentation.

from botasaurus import *

@browser

def scrape_heading_task(driver: AntiDetectDriver, data):

# Navigate to the Omkar Cloud website

driver.get("https://www.omkar.cloud/")

# Retrieve the heading element's text

heading = driver.text("h1")

# Save the data as a JSON file in output/scrape_heading_task.json

return {

"heading": heading

}

if __name__ == "__main__":

# Initiate the web scraping task

scrape_heading_task()In the output folder of the project, we’ll find the scrape_heading_task.json file, with the results of the scraping, in this case, the heading field.

If we run this scraper, which you can find in the file first_scraper.py on the GitHub repository of The Web Scraping Club, you’ll see a browser’s window will be open and used to browse the website.

As the documentation says, by using AntiDetectDriver we’re telling Botasaurus to provide automatically an Anti-Detection Selenium driver to the scraper.

@browser(

headless=True,

profile='Profile1',

)

def scrape_heading_task(driver: AntiDetectDriver, data):We can define also the headless value and a profile to use in our projects.

We could also decide to use anti-detect requests instead of Selenium by calling

from botasaurus import *

@request(use_stealth=True)

def scrape_heading_task(request: AntiDetectRequests, data):

response = request.get('https://www.omkar.cloud/')

print(response.status_code)

return response.text

scrape_heading_task()Bypassing Cloudflare with Botasaurus

Let’s see if Botasaurus could bypass Cloudflare-protected websites like Harrods.com and, as suggested on the documentation, we’re using the requests module.

I created a small scraper you can find in the GitHub repository, under the folder Botasaurus, file cloudflare.py.

@request(use_stealth=True)

def scrape_heading_task(request: AntiDetectRequests, data):

soup = request.bs4("https://www.harrods.com/")

interval=randrange(10)

time.sleep(interval)

category = request.bs4("https://www.harrods.com/en-it/shopping/women-clothing-dresses")

# Retrieve the heading element's text

dom = etree.HTML(str(category))

json_data=json.loads(dom.xpath('//script[contains(text(), "ItemList")]/text()')[0])

for url_list in json_data['itemListElement']:

url='https://www.harrods.com'+url_list['url'].replace('/shopping/shopping', '/shopping')

print(url)

product_page=request.get(url)Unluckily the documentation doesn’t not explain so much what happens under the hood, so you need to review the code for a better understanding.

With the (use_stealth=True) parameter, you’re forcing the scraper to send Browser-like requests, to increase the chances of success.

In the rest of the scraper, I’ve used both Beautifoulsoup and requests to show the two ways you can parse the results.

In any case, with this simple scraper, I was able to load the first 60 product pages of a category in a headless way, without opening a browser’s window.

This is great because the scraper not only works but is also not as resource-intensive as a Playwright solution would be.

The test on Cloudflare is passed with flying colors, let’s see what happens with Datadome.

Bypassing Datadome with Botasaurus

Datadome is known for collecting events from the browser session, so I’m skeptical about using the same solution as before since there’s no browser used.

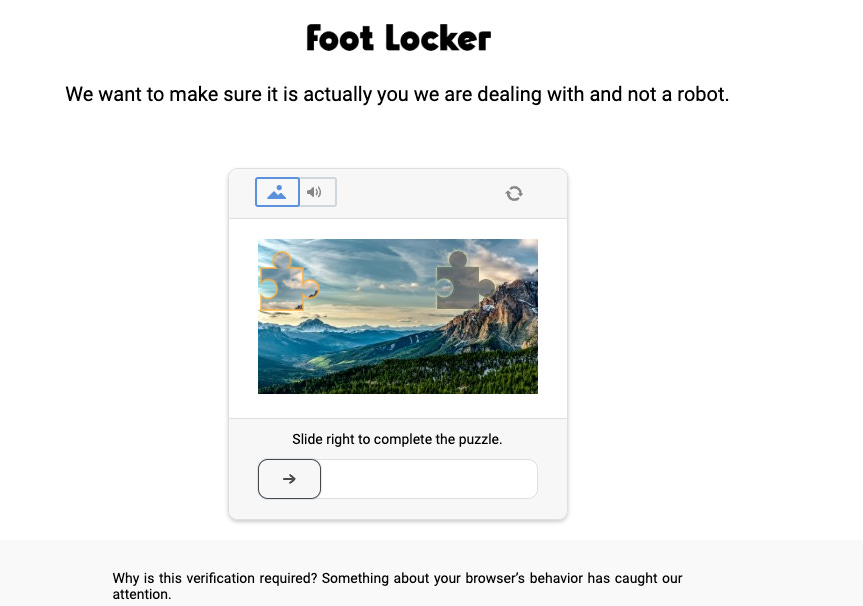

We’re gonna test Botasaourus on the Footlocker.it website, trying to scrape the first page of the sneakers category.

As I imagined, while we could loud the PLP page of the sneaker category, when we try to enter the detail page of the first product, we get blocked.

Even by using a browser, the Datadome CAPTCHA was raised immediately to me as soon as I browsed the PLP page.

Reading more on the documentation page of Botasaurus, I’ve found that I could create a “stealth instance” of a browser and work on its headers and screen resolution to make it more realistic.

While using only the stealth browser didn’t solve the issue, adding the user_agent and window_size parameters increased the scraping success rate.

@browser(

user_agent=bt.UserAgent.REAL,

window_size=bt.WindowSize.REAL,

create_driver=create_stealth_driver(

start_url="https://www.footlocker.it",

),With a good proxy rotation, I could make the scraper datadome.py work until I get the CAPTCHA and then restart the scraper from the latest URL correctly read.

There’s also the chance to install the Capsolver browser extension on the flight and use it in the browser, but it will be the main topic for another post.

Bypassing Kasada with Botasaurus

New anti-detect tools or unblockers usually are not able to bypass Kasada, since its diffusion is not so widespread but still it’s an efficient anti-bot solution with a strong challenge to bypass as soon as you connect to the website.

I tried at first with a scraper analog to the one used for Cloudflare, but with no results.

Let’s see if with the same configuration used for Datadome we could bypass it.

The scraper can be found in the kasada.py file on the repository and works like a charm.

@browser(

user_agent=bt.UserAgent.REAL,

window_size=bt.WindowSize.REAL,

create_driver=create_stealth_driver(

start_url="https://www.canadagoose.com/us/en/",

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

interval=randrange(10)

time.sleep(interval)

driver.get("https://www.canadagoose.com/us/en/shop/women/outerwear/parkas/")

interval=randrange(10)

time.sleep(interval)

# Retrieve the heading element's text

url_list_data=driver.find_elements(By.XPATH,'//a[@itemprop="url"]')

url_list_final=[]

for url_list in url_list_data:

url=url_list.get_attribute('href')

print(url)

url_list_final.append(url)

for url in url_list_final:

driver.get(url)

price=driver.find_element(By.XPATH,'//h4[@itemprop="name"]').text

print(price)

interval=randrange(10)

time.sleep(interval)

scrape_heading_task()

How Botasaurus manage browser fingerprinting?

I didn’t find any mention of browser fingerprinting on the documentation of the repository, so I suppose this is not spoofed.

All the tests seen up to now run on my laptop but I’m afraid that results could be different by running them on a server.

In fact, after configuring an AWS instance and uploading there the Botasaourus scrapers, both the Kasada and the Datadome one stopped working, even if I’ve used a residential proxy.

The reason is quite simple: we’re using traditional Chromedriver versions for our scraping and this means that the Browser’s APIs are leaking sensible information about our running environment.

This is exactly the topic of the next webinar I’ll have with Tamas, CEO of Kameleo on March 20.

We’ll talk about anti-detect browsers and their role in the web scraping industry.

Join us at this link: https://register.gotowebinar.com/register/8448545530897058397

Botasaurus lacks advanced browser fingerprinting management like the ones used by anti-detect browsers and this makes it a good tool for small-sized projects that run on consumer-grade hardware. It simplifies the customization of the webdrivers and handles most of the configuration in the background, so you don’t have to get crazy every time to recreate a working setup for bypassing anti-bots.

Unluckily, it’s almost useless if used on a server environment, since most of the anti-bots nowadays can easily recognize them and block consequently every access to the website coming from a datacenter.

One killer feature, instead, is the ability to install Chrome extensions on the fly, by simply adding one line to your code.

Even without server capability it is pretty good for small scraping. Thanks for sharing!

AntiDetectDriver

no modules in library. no working

From docs:

```Python

from botasaurus import *

from chrome_extension_python import Extension

@browser(

extensions=[Extension("https://chromewebstore.google.com/detail/adblock-%E2%80%94-best-ad-blocker/gighmmpiobklfepjocnamgk")],

)

def open_chrome(driver: AntiDetectDriver, data):

driver.prompt()

open_chrome()

```

```Ouput

❯ /home/puma/Development/Libgen/libgen/bin/python /home/puma/Development/Libgen/main.py

Traceback (most recent call last):

File "/home/puma/Development/Libgen/main.py", line 5, in <module>

@browser(

^^^^^^^

NameError: name 'browser' is not defined

(libgen)

```

With some remarks:

```Python

from botasaurus import *

from botasaurus.browser import browser, Driver, Wait

from chrome_extension_python import Extension

@browser(

extensions=[Extension("https://chromewebstore.google.com/detail/adblock-%E2%80%94-best-ad-blocker/gighmmpiobklfepjocnamgk")],

)

def open_chrome(driver: AntiDetectDriver, data):

driver.prompt()

open_chrome()

```

```Output

❯ /home/puma/Development/Libgen/libgen/bin/python /home/puma/Development/Libgen/main.py

Traceback (most recent call last):

File "/home/puma/Development/Libgen/main.py", line 9, in <module>

def open_chrome(driver: AntiDetectDriver, data):

^^^^^^^^^^^^^^^^

NameError: name 'AntiDetectDriver' is not defined

(libgen)

```

What need to do that works?