Web Scraping and Coding: Five Programming Languages to Check Out

What's a good programming language to learn for web scraping?

Today, with our partner IPRoyal, we’re talking more about the benefits of using an anti-detect browser in web scraping operations. For all The Web Scraping Club readers, they prepared a 50% royal residential proxies discount using the code WSC50

If you visit The Scraping Club substack, I'm guessing that you're interested in online data gathering. Some of you are experienced and familiar with web scraping, APIs, proxies, and many other online data-gathering nuances. Meanwhile, others may be just starting; for them, I recommend visiting the Web Scraping From 0 to Hero course.

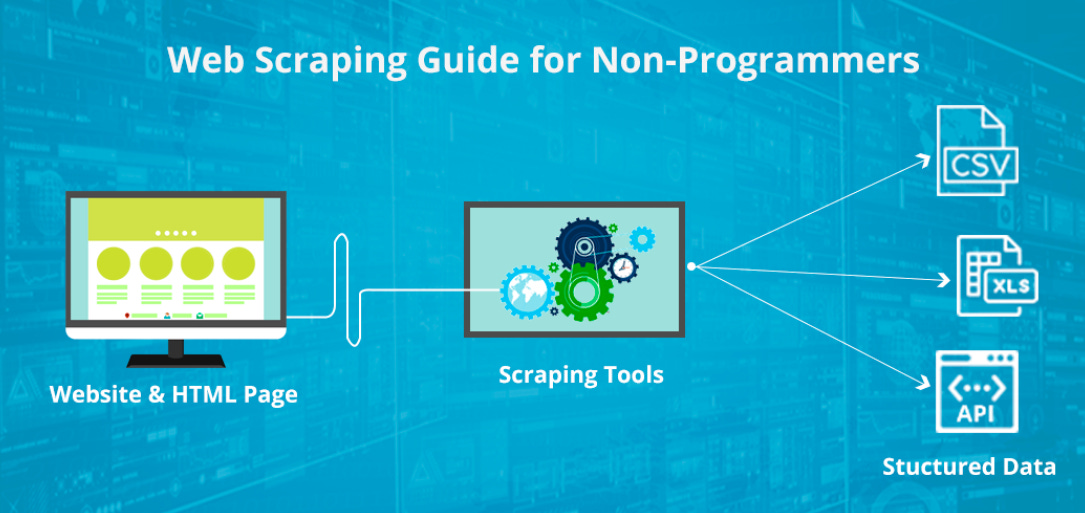

The more you learn about web scraping, the closer you get to programming languages. Now, that does not necessarily mean you have to be a coder to scrape the web. As you can see, there are no-code solutions to getting the required data. However, knowing computer languages like Python can significantly expand your capabilities.

Why Should You Learn to Code for Web Scraping?

In short, learning programming languages lets you achieve more tasks wherever you go, digital-wise. Regarding website scraping, it opens the doors to numerous customization options to target specific elements that hold relevant information. And I'm not talking solely about data scientists. Here's a good piece for The Economist about a journalist who learned to scrape the web to enhance his articles.

Nowadays, information is everywhere. Imagine you are looking to rent an apartment. You can go through dozens of rent sites manually, looking for good options within the chosen price range. Or you can pick a scraper and write several lines of code instructing it to go over these sites and collect all applicable options in one organized file. You have just achieved in minutes that would've taken you several hours.

But to write your own web scraping scripts, you need to know a programming language. But which should you choose? Python, PHP, JavaScript, Ruby, or any other from hundreds of choices out there? Well, you can't pinpoint one exact option that would be best for everybody. Instead, to help you get started, I'll list 5 programming languages for web scraping that are popular, widely used, have helpful libraries and frameworks, or are easy to learn.

Python

Anyone at least briefly familiar with scraping should not be surprised to see Python at the very top of this list. Indeed, Python is the most popular choice for web scraping and one of the most widely used coding languages generally. You can be sure to find lucrative employment opportunities if you know Python.

One of the reasons for Python's popularity is its readability. This programming language resembles simple English, so it's easier to learn for beginners. Furthermore, it is open-source, so you will find an active online community you can consult in case you have any questions.

What's best is that Python has one of the best libraries designed for scraping. The BeautifulSoup is excellent for parsing HTML and XML documents and efficiently collecting information from most online websites. Simultaneously, you can use Python's Requests library to enhance scraping with rotating residential proxies to target more websites simultaneously with an extra layer of obfuscation.

Another advantage is the Scrapy framework. This robust tool lets you handle website authentication, parse the content, manage concurrent requests, and target more complex websites. It has an in-built cookie handler, identifies duplicates, and fills in required forms, saving you time.

JavaScript (Node.js)

JavaScript is an excellent front-end coding language and has remained solely front-end for some time. However, the Node.js runtime environment allows back-end and server-side scripting, making it a powerful web scraping tool.

You will find it effortless to write scraping scripts if you master JavaScript. You can never be sure about such numbers, but several studies have shown that JavaScript powers 98.9% of all websites. This means that you can write scripts for anything you find online, be it renting prices, financial data, traveling expenses, etc.

Node.js also boasts a vast collection of libraries managed through Node Package Manager. Its Puppeteer library works like a headless Chromium browser that imitates a real user, bypassing numerous anti-scraping systems. It also excels at scraping websites that use popular JavaScript's Angular or React frameworks. Another nifty tool is the Cheerio library for parsing HTML and XML, which is very similar to Python's BeautifulSoup, which means you can easily use it within Node.js if you are switching from Python.

PHP

Web scraping with PHP is not for beginners, and I recommend this path for those who already know the PHP programming language or have a specific reason to use it for data gathering. Most often, you will be using the cURL library, which provides command-line tools to manage data exchanges over the Internet.

cURL lets you send HTTP requests to retrieve required content. What's more, you can send multiple customized HTTP headers to target multiple sites simultaneously, which speeds up the process. This tool can handle authentication requests, whether by password, bearer token, or API keys and is capable of dealing with HTTP response codes, including errors. However, cURL does not handle redirects, so you have to input the exact URL you want to scrape each time.

PHP has somewhat limited parsing features. You can use its Simple HTML DOM Parser, which does exactly what's stated – it simply parses HTML documents. Alternatively, you can use regular expressions to identify named patterns within HTML and extract information, but that doesn't go well for more complex websites.

Ruby

Just like Python, Ruby is the perfect choice for beginners due to its concise and straightforward syntax. It is also open-source and has an active and helpful community. Drop by Ruby's community section to see all the ways you can mingle, learn from experienced Ruby developers, or contribute to the open-source project yourself.

Ruby also offers a plethora of additional tools, satirically called gems, to enhance your web scraping tasks. Nokogiri is used for parsing HTML and XML. Ruby also supports the Scrapy framework, which is similar to Python. Another Ruby gem is HTTParty, which streamlines HTTP requests with a simple API. You can also use Logger to monitor how your scraping scripts perform to identify and fix bugs.

Ruby boasts of offering over 170,000 gems, which are basically chunks of code that include test files and usability documentation. Because there are so many gems, you can save time writing your own scripts and use them instead. Furthermore, beginners can inspect the code to learn from it or carry out more complex tasks until they develop sufficient knowledge.

R

Another great open-source programming language, R, offers numerous benefits for web scraping to data analysts and scientists. It excels at handling large data sets and has in-built data visualization features, which makes things much more manageable. If you're into statistical analysis, go straight into R, as it was primarily developed for this purpose.

However, R is not for the faint of heart. Its syntax is more complex than Python or JavaScript, and it has average scalability and error handling. On the other hand, it offers outstanding packages (similar to gems and libraries), like rvest or RSelenium, to extract online data, which is easily integrated within its ecosystem for further analysis.

Remember that data analysts and scientists have numerous employment opportunities, which are predicted to remain in high demand for the foreseeable future. If you have a sharp mind and an affinity for statistics, R will combine perfectly with web scraping to let you focus on the analysis and visualization parts.

I Learned to Code: What's Next?

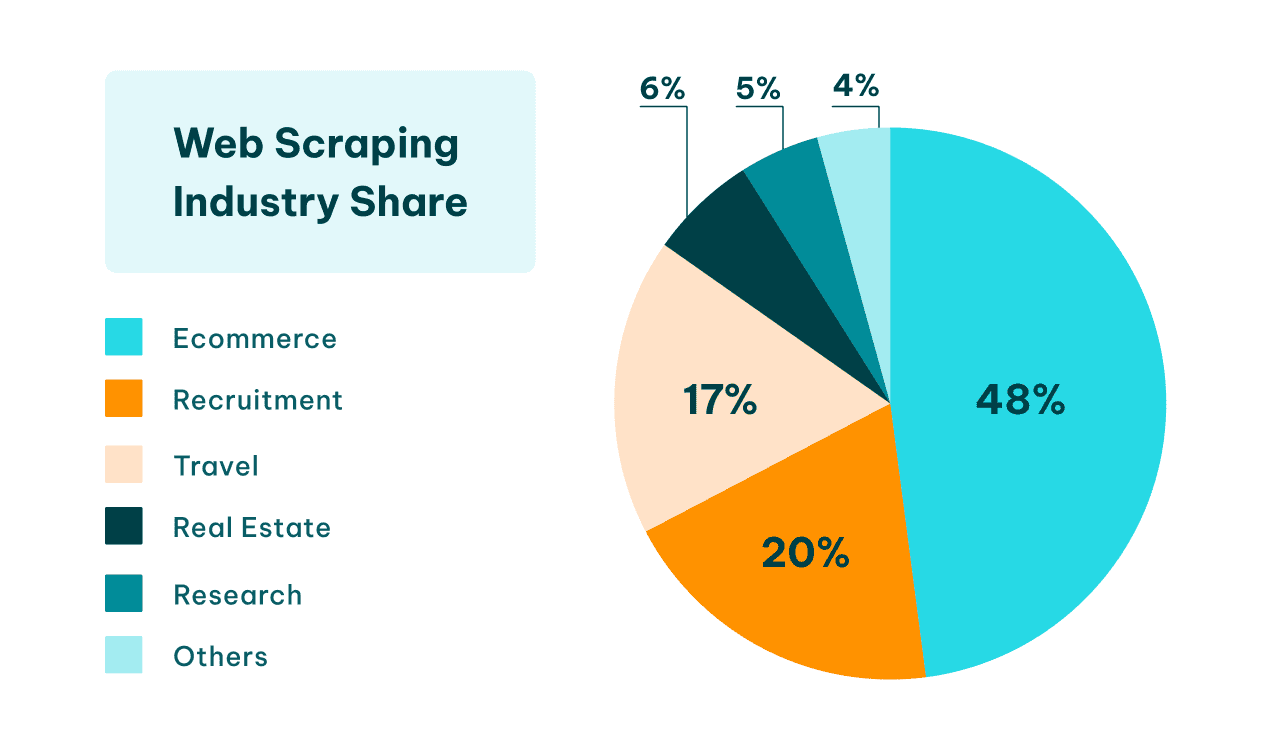

Many programmers will agree that working on an actual project is the best way of learning to code. Whether you're creating a website with JavaScript or creating databases using Python, pick a project that involves gathering and processing online data. Of course, you must be wondering about employment, so take a look at the picture below with the web scraping industry share.

As you can see, e-commerce is the largest sector, followed by recruitment and travel. Human resource employees who know how to scrape data can process thousands of portfolios to spot the best talents. Meanwhile, digital marketing specialists can scrape commodity prices and user reviews to improve their product placement. If you learn R and enjoy biology, consider working in the healthcare sector, which uses Big Data to improve diagnostics and social well-being trends.

Nowadays, data is an expensive commodity, so employment opportunities are endless. And the same applies to coding. Although it is undoubtedly possible to scrape the web without knowing any programming language, you will significantly expand your opportunities if you learn one.